Dedicated and bare metal cloud servers have countless configurations, and selecting the right one can become overwhelming. It is critical to determine a server build that meets your demands.

Deciding between a single vs. dual processor impacts performance, scalability, and costs. Therefore, knowing the key differences simplifies the choice between a single or dual CPU environment.

This article explores the differences between a single vs. dual-processor server to help you make an informed decision.

Differences Between a Processor, Core, and Threads

In the early days of computing, CPUs relied on single-core technology and were the only available choice. Performance depended solely on clock speed, where higher clock speeds meant faster CPU task execution.

Computing demands grew, and multi-core processors and threads appeared on the market. The innovation enabled processing multiple tasks simultaneously, and multi-core CPUs became a new standard for most systems.

High-performance computing servers utilize multiple processors on a single motherboard. These setups are intended for demanding workloads and are not widely available due to being specialized.

The sections below explain the differences between CPUs (processors), cores and threads.

What Is CPU?

A CPU (Central Processing Unit) is the hardware responsible for executing tasks from other hardware and software. It is often referred to as the "brain" of the computer. A CPU performs calculations, data processing, and manages I/O operations.

CPUs interpret and execute instructions through a cycle of fetching, decoding, and processing data. To manage task performance, it communicates with different computer parts, such as memory and storage. A processor's speed and efficiency are essential when determining system performance, especially in CPU-bound situations where the processor's capabilities are essential.

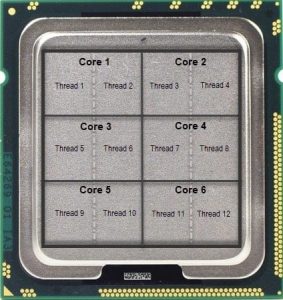

What Is CPU Core?

A core is a physical component that acts as a processor inside a CPU chip. Every core inside a CPU can process a task independently. The more cores a CPU has, the more tasks it can process simultaneously.

The multi-core architecture enhances performance and processing speed when compared to single-core CPUs. Handling several concurrent tasks does not slow down the system but improves performance and efficiency.

What are CPU Threads?

CPU threads are paths a CPU uses to execute instructions. A single core can have multiple threads. Threads enable a single core to run multiple tasks by alternating between threads. By rapidly switching between tasks, threads create the illusion of simultaneous processing. The switching process is known as hyper-threading (Intel) or simultaneous multithreading (AMD).

For example, if a CPU has six cores with two threads per core, there are 12 paths for information to be processed simultaneously. However, CPU threads don't offer the same performance improvements as additional cores do.

Single vs. Dual CPU Server

The choice between a single and dual CPU server depends on the requirements, workload, and available budget. Both configurations cater to different use cases. The table below summarizes the key differences between single vs. dual CPU servers:

| Feature | Single CPU Server | Dual CPU Server |

|---|---|---|

| Definition | One CPU socket with limited core count and processing power. | Two CPU sockets with doubled core count and processing power. |

| Design | Simple and cost-efficient for basic workloads. | Complex with NUMA support. Intended for intensive workloads. |

| Performance | Intended for smaller workloads and working within the system's limits. | Suitable for high-precision tasks, resource-heavy applications, and virtual machines (VMs). |

| Use cases | Application servers, small web servers, DNS hosting, dev or test environments. | HPC, database management, server clusters, and virtualization. |

| Price | Lower costs due to fewer hardware requirements. | Significant upfront costs due to a dual CPU setup, which requires additional RAM and specialized motherboards. |

Single CPU servers are simple and affordable. They are suitable for smaller workloads and constrained budgets. On the other hand, dual CPU servers are better suited for intensive workloads due to improved performance and vertical scaling.

The sections below provide an in-depth overview of the features listed in the table.

Definition

The fundamental difference between single and dual-processor servers is the number of physical processor units on the motherboard.

Single CPU servers have one processor socket and can house a single processor. These servers are suitable for workloads with moderate processing power and limited parallelism requirements.

Dual CPU servers have two sockets and can house two processors simultaneously. They are suitable for environments that require multitasking, parallelism, and additional processing power.

Design

Architectural design differences are significant, and they determine how suitable each server is for different workloads.

Single CPU servers have a straightforward design with one socket. The server is limited to the processor's built-in resources with limited expansion options. Typically, there are fewer RAM slots and PCIe expansion options than with dual CPU servers.

Dual CPU servers feature two sockets, each with their dedicated resources. They utilize advanced technologies such as NUMA to optimize resource allocation between two processors. There is also more room for expansion due to having additional RAM slots and PCIe lanes, resulting in greater scalability for HPC workloads and other resource-intensive tasks.

Performance

The performance differences between single and dual CPU servers depend on how well the system utilizes the available resources and the workload.

Single CPU servers are excellent for most workloads. High-performance CPUs like the Intel® Core™ i9 series provide high clock speeds, up to 24 cores, and advanced features. Modern CPUs are versatile and can handle moderate parallelism. However, being limited to a single CPU means it's best suited for smaller workloads.

Dual CPU servers outperform single processor ones at multi-threaded and parallelized workloads. They often have a CPU pair with additional performance boost options. For example, a pair of 5th Gen Intel Xeon Scalable processors have an overall greater core count and memory capacity. They have exceptional performance for highly parallelized tasks, which makes them suitable for intensive workloads.

Use Cases

Both single and dual CPU servers have use cases that differ based on workload intensity.

Single CPU servers are ideal for tasks that don't require extensive processing power or parallelism. Common use cases include:

- Web hosting. Supports small and medium-sized websites or content management systems (CMS) with modest processing requirements.

- Development. Runs staging, development, and test environments used by software teams.

- DNS hosting. Manages domain name resolution for small-scale deployments.

- Light virtualization. Hosts several VMs with limited and isolated workloads.

Dual CPU servers are better suited for high-performance, resource-intensive, and mission-critical applications. For example:

- HPC. Powers simulations, calculation-heavy workloads, and data modeling.

- Artificial Intelligence. Runs AI/ML applications that train complex models.

- Databases. Handles large-scale databases with many simultaneous queries.

- Virtualization. Runs extensive virtual environments with multiple VMs assigned to dedicated cores.

- Server clusters. Supports enterprise-level applications and scalability for clustered environments.

Price

The cost difference between single and dual CPU servers comes from the differences in hardware complexity. Knowing these differences is helpful when choosing the most cost-efficient option for the intended workload.

Single CPU servers are more affordable and ideal for businesses that have budget constraints. The main reasons the costs are lower are:

- Simpler hardware. The upfront costs are reduced due to having one CPU socket, fewer RAM slots, and simpler cooling systems.

- Lower operational costs. A lower power consumption due to having fewer CPUs makes these setups more energy efficient.

Dual CPU servers have higher operational costs due to complex hardware and advanced scalability options. Factors that contribute to the increased price are:

- Complex hardware. Additional CPUs and motherboards that support such architecture increase hardware costs. The hardware requires supporting a significantly larger memory, which further impacts expenses.

- Advanced cooling. Dual CPU systems require an advanced cooling solution (such as liquid immersion cooling) to handle increased heat output.

Note: Read more about the challenges and solutions in data center sustainability.

Single vs. Dual Processor Server: How to Choose

Selecting the right server configuration depends on multiple factors, such as workload demands, budget constraints, and operational considerations. There is no formula when choosing a server, but the following guidelines can help determine the best option for you:

- Purpose. Define the server's purpose by creating a clear outline of its intended purpose and the software requirements. Identify the required processing power, memory, and other hardware constraints.

- Workload. Plan and anticipate workloads during peak and high-demand times. Ensure the server has sufficient resources to carry out tasks while avoiding over-provisioning.

- Costs. Evaluate the costs of running the IT infrastructure and ensure it doesn't outweigh the benefits it provides.

- Expectations. If providing a service, ensure the server meets the client's needs, especially if there is an SLA agreement. Downtime or performance issues can impact customer satisfaction.

Conclusion

This guide explained the key differences between single and dual-processor servers. Due to their versatility and cost, single-CPU servers hold a large portion of today's market. Dual-processor servers are best suited for enterprise environments, data centers, and intensive workloads.

Next, learn more about how data center cooling can reduce operational costs and prevent power outages.