Amidst all the hype surrounding artificial intelligence (AI), many AI-related buzzwords are incorrectly used interchangeably. The synonymous use of the terms AI and machine learning (ML) is a common example of this unfortunate terminology mix-up.

This article provides an in-depth comparison of AI and machine learning, two buzzwords currently dominating business dialogues. Read on to learn exactly where these two technologies overlap and what sets them apart.

What Is Artificial Intelligence?

Artificial intelligence is a branch of computer science focused on creating systems capable of performing tasks that require human intelligence. This simulation of human-like capabilities enables AI to perform tasks non-AI systems cannot handle, such as:

- Following instructions to generate brand-new content, designs, or solutions.

- Predict future trends and behaviors.

- Recognize intricate patterns in the provided data.

- Improve performance over time without additional programming.

- Operate in dynamic and unstructured environments.

AI-based systems use mathematical algorithms to process large amounts of data, identify patterns within inputs, and make decisions or predictions based on available data. However, before a system is ready for real-life use, AI must first go through extensive training.

AI systems are trained on massive data sets that contain examples the model can learn from. During training, the system adjusts its parameters to improve accuracy in processing or analyzing training data. Once sufficiently trained, the AI can process new, previously unseen data that's in the same format as the files the model was trained on.

The most impactful advantage of AI technologies is that we can use them to automate repetitive tasks. Here are some common uses of AI across various industries:

- Analyzing medical images (e.g., X-rays, MRIs) and patient data to diagnose diseases.

- Assessing and predicting financial risks based on historical data.

- Recommending products to users based on browsing histories, preferences, and purchase behavior.

- Analyzing transaction patterns to detect fraudulent activities.

- Enabling autonomous vehicles to navigate, recognize objects, and make driving decisions.

- Grading assignments and exams.

- Generating music, art, and articles based on the user's guidelines.

- Screening resumes, scheduling interviews, and assisting in the recruitment process.

Our article on artificial intelligence examples provides an extensive look at how AI is used across different industries. You can also check out our post on the use of AI in business to see the most common ways different teams use AI to speed up and improve work.

Types of AI

There are two types of artificial intelligence: narrow (weak) AI and general (strong) AI.

Narrow AI is built to handle a specific task or a limited set of tasks within a defined context. This form of AI performs exceptionally well on well-defined, repetitive activities. Some common examples of narrow AI include:

- Speech recognition systems like Siri or Alexa.

- Spam filters used by email services to identify and filter out unwanted emails.

- Recommendation algorithms used by streaming platforms.

- Image recognition software in self-driving cars.

- Chatbots and virtual assistants used for customer service on websites.

- Predictive text and autocomplete functions in smartphones and word processors.

- Stock trading algorithms that analyze market data and execute trades.

Narrow AI operates based on predefined algorithms and rules. This type of AI is highly specialized and cannot perform tasks outside its scope. Another common name for narrow AI is artificial narrow intelligence (ANI).

On the other hand, general AI refers to a hypothetical AI system that exhibits universal human-like intelligence. Unlike narrow AI, general AI would possess the ability to understand, learn, and apply knowledge across different domains. As a result, strong AI would be able to perform cognitive tasks without requiring specialized training.

General AI remains a theoretical concept and has not yet been achieved. If we ever develop it, strong AI systems will be able to perform a wide range of tasks at a level comparable to or exceeding human capabilities. Another name for general AI is artificial general intelligence (AGI).

Check out the SpyFu case study to see how transferring AI workloads to pNAP's Bare Metal Cloud helped our client reduce cloud costs by 50%.

What Is Machine Learning?

Machine learning is a subset of AI focused on developing algorithms that enable computers to learn from provided data. Training these algorithms enables us to create machine learning models, programs that ingest previously unseen input data and produce a certain output.

All machine learning models perform one of two broad types of tasks:

- Classification tasks that require the model to categorize provided data (e.g., is this email spam or a legitimate message).

- Regression tasks that require the model to make predictions based on provided data (e.g., which video does the user want to watch next).

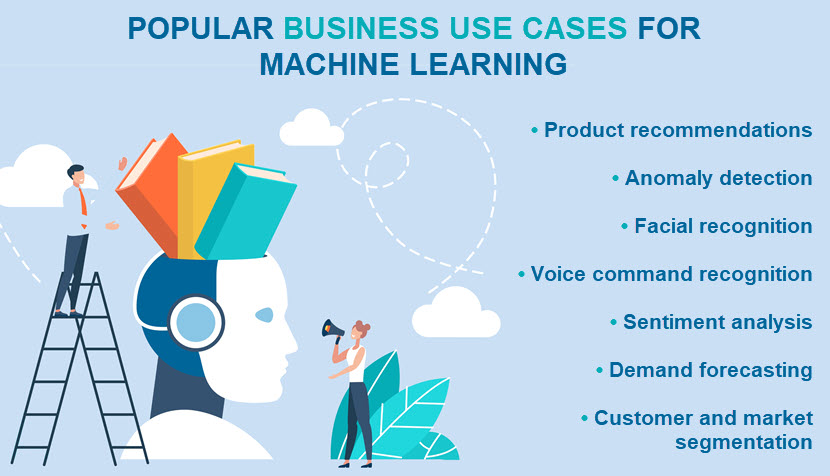

Although ML might appear limited, companies apply machine learning to a broad array of tasks. The most common applications of ML models include:

- Classifying files into different categories or classes.

- Analyzing data to uncover hidden trends or anomalies.

- Making continuous predictions, such as predicting house prices or potential sales revenue.

- Grouping similar data points into clusters (e.g., customer or market segmentation).

There are four different types of machine learning:

- Supervised learning. This type of machine learning uses labeled data during model training. Each piece of input data is paired with a corresponding output, and the model learns from the correlations between input-output pairs.

- Unsupervised learning. This type of ML relies on unlabeled data during training. The model must find patterns and relationships without relying on predefined input-output labels.

- Semi-supervised learning. This strategy involves the combined use of labeled and unlabeled training data.

- Reinforcement learning. This type of machine learning allows the algorithm to interact with an environment and receive feedback in the form of rewards or penalties. The model learns by figuring out how to maximize cumulative rewards over time.

Our supervised vs. unsupervised learning article provides an in-depth look at the two most common methods of "teaching" ML models to perform new tasks.

Types of Machine Learning Algorithms

There are several categories of ML algorithms based on their learning approach and the nature of the task they perform. Here are the most common types of machine learning algorithms:

- Regression algorithms. These algorithms predict a continuous output based on one or more input variables. The most popular regression algorithms are linear, polynomial, ridge, and lasso regression.

- Classification algorithms. These algorithms classify input data into predefined classes. The most popular algorithms of this type are support vector machines (SVM), decision trees, random forests, and K-nearest neighbors (KNN).

- Clustering algorithms. These algorithms group similar data points into clusters to find natural groupings in the data. The most common clustering algorithms are K-means, hierarchical clustering, and DBSCAN (Density-Based Spatial Clustering of Applications with Noise).

- Dimensionality reduction algorithms. These algorithms reduce the number of features in data while preserving as much information as possible. The most common algorithms of this type are principal component analysis (PCA), t-Distributed Stochastic Neighbor Embedding (t-SNE), and linear discriminant analysis (LDA).

- Reinforcement learning algorithms. These algorithms interact with an environment and gather feedback in the form of rewards or penalties. Common examples of reinforcement learning algorithms are Q-learning, deep Q-networks, policy gradient methods, and actor-critic methods.

- Deep learning algorithms. These algorithms use deep neural networks (DNNs) to model complex patterns in data. Usual examples of deep learning algorithms are convolutional neural networks (CNNs), recurrent neural networks (RNNs), long short-term memory (LSTM) networks, and generative adversarial networks (GANs).

The biggest downside to deep learning is that it takes a long time to train models. Luckily, you can use deep learning frameworks to drastically speed up the training process.

What Is the Difference Between AI and Machine Learning?

While there is a lot of overlap between AI and ML, these two are clearly separate fields. The table below provides an in-depth overview of the differences between artificial intelligence and machine learning:

| Point of Comparison | Artificial Intelligence | Machine Learning |

|---|---|---|

| Definition | A broad field of computer science focused on creating systems capable of performing tasks that typically require human intelligence. | A subset of AI that involves the use of algorithms to enable systems to extract knowledge from and learn from input data. |

| Scope | Encompasses various subfields, including ML, natural language processing (NLP), robotics, expert systems, and computer vision. | Concerned only with developing machine learning algorithms and models. |

| Goal | Create systems that mimic human cognitive functions. | Develop models that can generalize from data, recognize patterns, and make data-driven predictions or decisions. |

| Outputs | Depends on the use case (predictions, decisions, new content, etc.). | Numerical values, such as classifications or a score. |

| Usual Applications | Autonomous vehicles, personal assistants (e.g., Siri, Alexa), game playing (e.g., AlphaGo), robotics, medical diagnosis systems. | Spam filtering, recommendation systems, speech recognition, predictive analytics, fraud detection. |

| Data Dependency | Can function with or without large amounts of data, depending on the use case. | Highly data-dependent and requires large data sets to train models effectively. |

| Learning Ability | Includes both learning (ML) and non-learning methods (rule-based systems and logic-based approaches). | Learns from data to improve model performance over time. |

| Level of Human Intervention | Often requires significant human intervention to design rules, features, and logic. | Requires human intervention for data labeling, model selection, and parameter tuning. |

| Current Research Focus | The current focus is on figuring out the ethical implications of AI, experimenting with different cognitive architectures, and improving explainability. | The current focus is on optimizing model performance, improving scalability, and dealing with overfitting issues. |

AI vs. Machine Learning: Objectives

The primary objective of AI is to develop machines that mimic human cognitive abilities, such as:

- Reasoning.

- Learning.

- Problem-solving.

- Perception.

- Understanding natural language.

- Understanding context.

- Case-by-case decision-making.

On the other hand, the primary objective of ML is to enable computers to learn from and make predictions or decisions based on data. The goal is to create systems that automatically detect patterns, extract insights, and generalize from data to perform classification and regression tasks.

AI vs. Machine Learning: Learning Methods

AI encompasses a variety of learning methods. These methods include:

- Symbolic learning (logic-based approaches). Symbolic learning involves using explicitly defined rules, logic, and symbolic representations to perform reasoning and decision-making. These systems rely on human-crafted knowledge bases and inference rules to solve problems and make decisions.

- Sub-symbolic learning (data-driven approaches). Sub-symbolic learning involves using statistical and computational techniques to learn patterns and representations directly from data. This approach relies on neural networks and ML models that improve performance through experience.

- Hybrid learning approaches. This learning method combines symbolic and sub-symbolic learning. For example, you could combine rule-based systems with neural networks to leverage the strengths of both.

On the other hand, machine learning focuses specifically on data-driven approaches to learning. The primary learning methods in ML include:

- Supervised learning (i.e., learning from labeled data).

- Unsupervised learning (i.e., learning from unlabeled data).

- Semi-supervised learning (i.e., learning from a mix of labeled and unlabeled data).

- Reinforcement learning (i.e., learning by interacting with an environment and gathering immediate feedback).

While highly beneficial, AI introduces a fair share of considerable problems. Our article on artificial intelligence dangers outlines the most notable risks of this cutting-edge tech.

AI vs. Machine Learning: Implementation

Implementing rule-based AI systems starts with defining a comprehensive set of rules and a go-to knowledge base. This initial step requires significant input from domain experts who translate their knowledge into formal rules.

When implementing more advanced AI systems, like those involving computer vision, NLP, or autonomous decision-making, a combination of different AI techniques is often necessary, such as:

- Symbolic AI for reasoning,

- Neural networks for pattern recognition.

- Probabilistic methods for dealing with uncertainty.

- Evolutionary algorithms for optimization and adapting to changing environments.

- Fuzzy logic for handling imprecision and reasoning with uncertain or vague information.

- Swarm intelligence for solving problems through the collective behavior of decentralized agents.

The implementation process often involves integrating various AI components and ensuring they work together seamlessly. This integration is often complex since it involves different technologies and algorithms that interact and complement each other.

Implementing ML focuses more on data-driven approaches. The first step in ML implementation is data collection and preprocessing. This step involves gathering large amounts of data relevant to the problem you're trying to solve and cleaning it to ensure it's of high quality.

Next, adopters select an appropriate ML algorithm. Depending on the problem (e.g., classification, regression, clustering), you choose a suitable algorithm that aligns with the nature of the available data and your objectives.

Once you select an algorithm, the next step is training the model. This process involves feeding the preprocessed data into the model and allowing it to learn the patterns and relationships within the data. During training, you'll also validate the model to ensure it learns correctly.

After training, the model is tested on a separate data set to evaluate its accuracy and generalization capability. If the model performs well, it is ready for deployment.

AI vs. Machine Learning: Requirements

AI systems have a broad range of needs. Developers must have extensive domain knowledge if the AI relies on rule-based systems that require experts to create rules and knowledge bases. These systems also require logic and reasoning frameworks to structure intelligent behavior.

Computational resources for AI systems vary a lot. Some systems run on moderate resources, but more complex systems, like those using neural networks or running extensive simulations, require a lot of computational power. Specialized hardware and advanced forms of computing (e.g., HPC or GPU computing) are standard requirements.

AI also demands diverse programming skills and algorithm knowledge. Developers must use different techniques to build models, plus systems often require a mix of several methods to handle perception, reasoning, and learning tasks.

On the other hand, the most critical necessity for machine learning is data. The success of ML models depends heavily on the amount and quality of the training data.

Like other AI technologies, ML requires significant computational resources. Training complex and deep models demands powerful CPUs or TPUs and large volumes of memory.

Human expertise is another vital requirement for machine learning. All ML projects require data scientists skilled in statistics and data analysis. These data scientists are responsible for various manual tasks, including:

- Preprocessing data.

- Selecting suitable models.

- Tuning the model's parameters.

- Overseeing the learning process during training.

Human expertise is also essential for practical feature engineering and interpretation of the model's results. Additionally, ML admins must have a good understanding of various tools and frameworks, such as PyTorch or TensorFlow.

Check out our GPU servers page to see how well AI and ML workloads run on dual Intel Max 1100 GPUs.

Is It Better to Learn AI or Machine Learning?

Deciding whether to learn AI or ML depends on your interests, career goals, and the kind of work you want to do. Both fields offer exciting opportunities and are central to the future of technology, so you can't really make a bad choice here.

Here are a few points that might help break the tie if you're unsure whether to focus primarily on AI or ML:

- AI covers a broader range of topics, including ML, robotics, expert systems, NLP, and more. The broader scope makes this field harder to master, but it also leads to more diverse career opportunities.

- ML has a considerably more data-driven focus. Machine learning is a great option if you enjoy working with data, statistics, and algorithms.

- AI requires more learning outside traditional computer science.

- ML requires you to familiarize yourself with fewer techniques and fields than if you decide to learn AI.

- If you're interested in fields like psychology, neuroscience, and computer science, AI stands out as a more natural choice.

If you're still unsure about the correct choice, we recommend starting with ML as that is a foundational component of AI. Learning about ML will give you a strong base from which to explore the broader aspects of AI later on in your career.

Can I Learn AI Without Machine Learning?

You can learn AI without focusing exclusively on machine learning, although you will miss out on a fundamental part of AI technologies. Here are a few AI-related areas that do not have a heavy ML focus until you start working on more advanced projects:

- Symbolic AI (logic-based approaches). These systems rely on predefined rules to make decisions rather than on ML models.

- Classical AI techniques. AI techniques like A* search, Dijkstra's algorithm, and other graph search methods don't rely on ML.

- Expert systems. These systems emulate the decision-making abilities of a human expert by following a set of rules provided by domain experts. In most use cases, there is no need to set up an ML model.

- Automated reasoning. This field involves the development of algorithms to solve problems with logical reasoning, theorem proving, and constraint satisfaction problems (CSP).

You can learn and implement many aspects of AI without diving deeply into machine learning. However, considering the growing importance and applicability of ML in AI, having some knowledge of ML would enhance your overall understanding of AI.

Can AI Replace Machine Learning?

AI and ML are complementary rather than mutually exclusive, so AI does not need to replace ML (or vice versa).

Machine learning is one of the most powerful tools in the AI toolkit. ML enables AI systems to learn from data and improve over time, which is crucial for many modern AI applications, including:

- Image recognition.

- Natural language processing.

- Forecasting tasks.

- Sentiment analysis.

- Autonomous driving.

- Fraud detection.

So, while some AI systems might not use ML, many advanced AI applications rely heavily on ML. The relationship between the two is more about integration and complementarity than replacement.

Learn also about neocloud, high-performance cloud platform optimized, amongst other things, for AI/ML training.

Now You Know What Sets the Two Apart

Knowing the difference between AI and machine learning is vital if you plan to use either of the two technologies at your company. This knowledge also helps identify which business processes can benefit most from each technology.

A clear understanding of what sets AI and ML apart enables you to make informed decisions about which technologies to invest in and how to implement them effectively. It also supports better communication with stakeholders and ensures alignment with organizational goals.