The ability of machines to learn and adapt is rapidly changing our world. Just a few decades ago, facial recognition or automatic translation were impossible tasks for a machine. These are now commonplace applications, with exciting breakthroughs emerging at an ever-increasing pace.

Deep learning, a cutting-edge subfield of artificial intelligence, is at the heart of this revolution. Moreover, the story of deep learning taps into a timeless aspiration to infuse machines with intelligence.

This article will explain the inner workings and vast potential of deep learning.

What Is Deep Learning?

Deep learning is a method of training neural networks to perform tasks with minimal human intervention.

Deep learning algorithms are modeled on the structure and function of the biological brain, using interconnected layers of artificial neurons to process and learn from data.

Machine Learning vs. Deep Learning

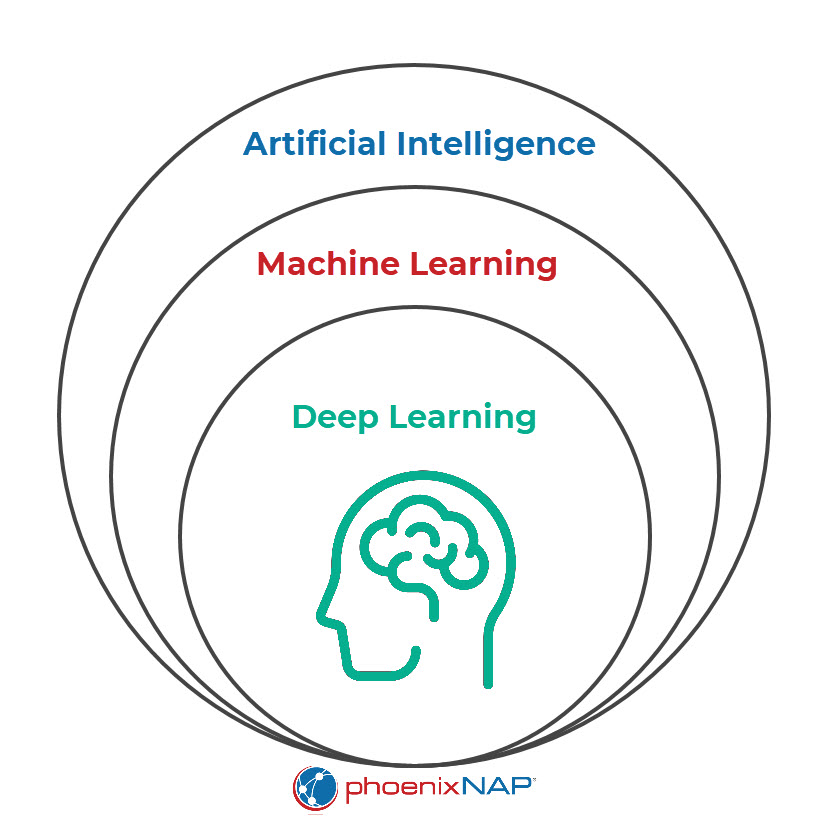

Deep learning is a subtype of machine learning and a part of artificial intelligence (AI).

Machine learning is a broader term that refers to the process by which computers learn from data without being explicitly programmed to perform a task. Rather than relying on predetermined instructions, machine learning algorithms identify patterns within data and make predictions on the desired outcome when presented with new information.

Deep learning models use a complex web of interconnected nodes to process large amounts of data. They are generally more powerful than machine learning models and can handle more complex tasks.

Here is a table comparing the differences:

| Machine Learning | Deep Learning | |

| Algorithm structure | Simpler algorithms like linear regression and decision trees. | Complex, multilayered artificial neural networks. |

| Data requirements | Can work with smaller datasets. | Requires large amounts of data to train effectively. |

| Human intervention | Requires human intervention for feature engineering and model tuning. | Automates feature extraction and learns from raw data. |

| Applications | Used for tasks like regression, classification, and clustering. | Excels at complex tasks like image and speech recognition, natural language processing, and autonomous systems. |

Deep Learning - A Brief Historical Overview

The rise of neural networks spans from the introduction of the first digital computers to the present day.

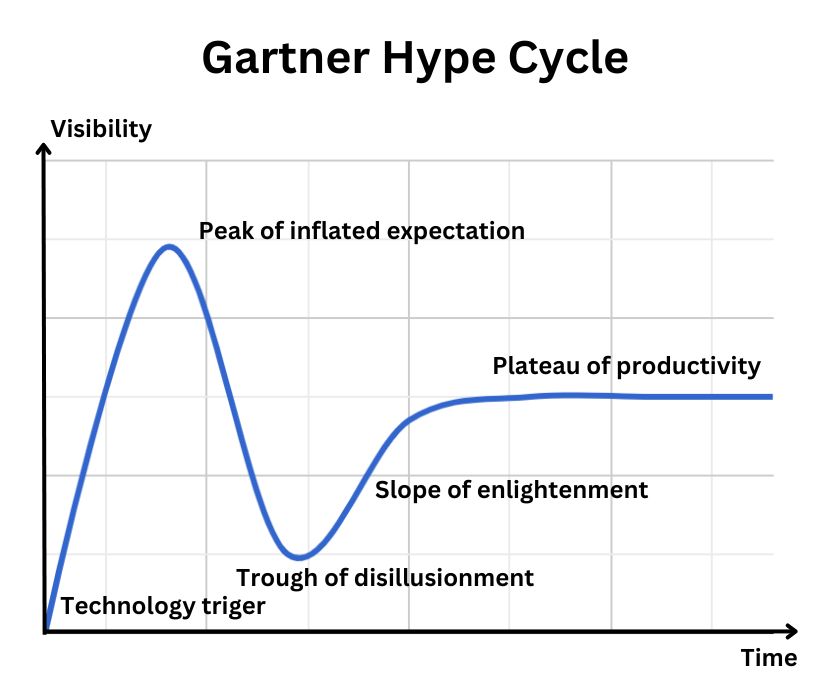

Their evolution was shaped by cycles of enthusiasm, skepticism, and breakthroughs. Each AI winter was followed by a resurgence of interest, often driven by technological advancements and paradigm shifts.

Early Promise and Challenges (1943-1979)

The geopolitical context of the Cold War between the United States and the Soviet Union profoundly shaped the early stages of neural networks. AI development was a strategic initiative to gain technological superiority.

This arms race funneled a great deal of investment and talent into AI and laid the groundwork for subsequent civilian applications.

The late 1970s and early 1980s also marked the first AI winter. During this time, the initial optimism about the potential of AI was tempered with setbacks. The technology did not live up to the high expectations of early pioneers, and there was a growing realization that achieving true artificial intelligence was more elusive than initially thought.

Timeline:

- 1943. Warren McCulloch and Walter Pitts laid the foundation with the first neural network model.

- 1965. Alexey Ivakhnenko and Valentin Lapa pioneer deep learning algorithms.

- 1970s. First AI winter dampens research fervor.

- 1979. Kunihiko Fukushima develops Neocognitron, a convolutional neural network (CNN).

Resurgence and Refinement (1980s-1999)

AI researchers made bold claims about the capabilities of their creations. When promised tasks like playing grandmaster-level chess or flawlessly translating languages stumbled, disappointment and skepticism replaced excitement.

One of the main reasons why early AI systems underdelivered was that, in the 1980s, computing power was nothing like it is today. Early algorithms lacked the power to tackle complex problems efficiently. Additionally, data needed to train those algorithms was scarce, further hindering progress.

Even with advancements, it wasn't always clear how to apply AI for tangible benefits. Early systems often excelled at niche tasks without broad commercial viability. As interest dwindled and progress slowed, funding for AI research dried up once again, and talent began to leave the field.

Timeline:

- 1989. Yann LeCun showcases practical backpropagation and revives interest.

- 1990. Second AI winter chills neural network research.

- 1995. Cortes and Vapnik developed the support vector machine (SVM).

- 1997. LSTM, a powerful recurrent neural network architecture, was invented.

Breakthroughs and Rise to Dominance (1999-2020)

In the twenty-first century, the focus of AI has shifted to accommodate the requirements of the Internet and support the emerging digital economy.

The rapid development of computer hardware also bolstered the training and deployment of complex artificial intelligence models, propelling the field towards unprecedented capabilities.

The current AI boom is built on the lessons learned from past winters. Researchers focus on practical applications, leveraging powerful hardware and massive amounts of data, and exploring new approaches like deep learning.

Timeline:

- 1999. Faster computers and GPUs boost computational power for neural networks.

- 2000s. The vanishing gradient problem was tackled with layer-by-layer pre-training and LSTM.

- 2010s. GPUs enable efficient training of convolutional neural networks.

- 2012. AlexNet and Google Brain's Cat Experiment showcase deep learning's prowess.

- 2014. Ian Goodfellow introduces Generative Adversarial Neural Networks (GANs).

- 2016. DeepMind's AlphaGo defeats world champion Go player Lee Sedol, marking a significant milestone in game-playing AI.

- 2017. AlphaZero further demonstrates the power of deep learning by mastering multiple games without prior knowledge of the rules, including Go, chess, and Shogi.

- 2022. Continued advancements in large language models (LLMs) like ChatGPT and Bard demonstrate the growing capabilities of deep learning in natural language processing.

Components of Deep Learning

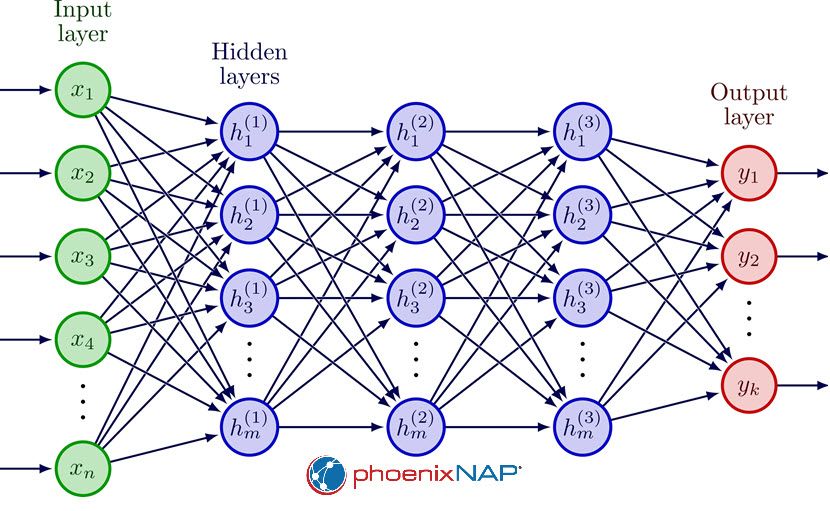

In deep learning, neural networks consist of multiple layers, including input, hidden, and output. Each layer contains interconnected nodes, or artificial neurons, which process information and pass it to the next layer.

The network adjusts its parameters through backpropagation to minimize the difference between its predictions and the actual outcomes in the training data.

Below is a summary of the three main layers found in a deep learning neural network.

Input Layer

The input layer is the layer that receives input data. It undertakes the processing and transmission of data to subsequent layers within the neural network. A deep neural network comprises multiple nodes that are responsible for inputting data. The node’s structure differs depending on the input data type.

Hidden Layer

Hidden layers engage in information processing at varying levels, adapting their behavior with the acquisition of new information. Deep learning networks incorporate many hidden layers, allowing them to scrutinize a problem from diverse perspectives.

For instance, when a deep learning computer vision algorithm is presented with an image of an unfamiliar animal, it compares it with a database of known animals. The algorithm investigates the basic shapes and sizes in the image, such as the shape of eyes and ears, size, and number of legs to identify patterns. The presence of hooves suggests a potential classification as a cow or deer. Cat-like eyes may indicate a species of wild or domestic cat.

Each hidden layer processes distinct features, aiming to categorize the input correctly.

Output Layer

The output layer comprises nodes responsible for producing the final data output. Deep learning models with binary classification provide "yes" or "no" answers through only two nodes in the output layer. Conversely, models yielding a broader range of answer classes include more nodes.

How Does Deep Learning Work?

Deep learning involves multiple steps and repeats the same process through numerous iterations. A neural network trains on labelled data first to learn from examples before moving on to creating predictions on unlabeled data.

Here are the steps for deep learning, from feeding data to the network to refining its predictions:

- Data Preprocessing. Some algorithms can only process structured data, while others work with unstructured data. Here are some examples of preprocessing tasks:

- Removing noise or inconsistencies in the data is a common problem when working with sensor systems or recorded data.

- Scaling or adjusting the data values to a standard range to improve model convergence.

- Handling missing data.

- Data In. Feed the network with input data, such as an image or a sentence.

- Feature Extraction. Each hidden layer analyzes the data, extracting increasingly complex features from the dataset.

- Prediction. The final layer combines the extracted features and forms a prediction.

- Error Check. The prediction is compared to the actual answer (the label).

- Backpropagation. If the prediction is wrong, the network calculates the error and uses it to adjust the connections between neurons (weights). The weights control the contribution of each input to the neuron's overall output. Neurons with stronger connections (higher weights) impact the following layer's activity.

- Repeat. The process restarts with new data, iteratively refining the network's understanding of the problem and improving predictions.

Deep Learning Examples

Deep learning has shown remarkable success in various applications. Its ability to automatically learn hierarchical representations from data has made it a powerful tool for solving complex problems and achieving high levels of accuracy in tasks that were once challenging for traditional machine learning.

Below are some examples in three popular deep learning fields: computer vision, natural language processing (NLP), and recommendation engines.

Computer Vision

- Autonomous Vehicles. Self-driving vehicles utilize deep learning to identify road signs, pedestrians, and obstacles.

- Defense Systems. Satellite imagery analysis uses deep learning to identify points of interest and track objects over vast distances.

- Content Moderation & Image Analysis. Algorithms automatically remove inappropriate content from websites, recognize facial attributes, and extract details like brand logos from images.

Natural Language Processing (NLP)

- Chatbots. NLP algorithms enable intelligent chatbots that engage in human-like conversations and provide customer service.

- Document Summarization & Business Intelligence. Deep learning extracts critical information from documents and analyzes business data, allowing for efficient knowledge retrieval and informed decision-making.

- Virtual Assistants. Deep learning powers virtual assistants like Siri and Alexa.

- Sentiment Analysis & Social Media Insights. Sentiment indexing on social media platforms uses NLP models to understand public opinion and track trends.

Recommendation Engines

- Personalized Recommendations. Deep learning analyzes user behavior and preferences to provide personalized recommendations in retail, media, and other industries. For example, platforms like Netflix and Spotify leverage deep learning to recommend content based on user behavior.

- Industrial Safety. Factories implement deep learning to identify accident patterns and unsafe proximity between people and machines.

Read up also on the wide use of AI in our article Artificial Intelligence Examples.

Deep Learning Algorithms

An algorithm is a step-by-step procedure to perform a specific task or solve a problem. In deep learning, algorithms perform complex calculations that process data layer by layer, extracting intricate patterns through mathematical operations.

Deep learning relies on a toolbox of algorithms, each excelling in specific tasks. Below are several well-known deep learning algorithms in use today.

Convolutional Neural Networks (CNNs)

CNNs utilize multiple convolutional layers for feature extraction, pooling layers for dimensionality reduction, and fully connected layers for final predictions. They excel in image recognition, object detection, and video analysis, effectively identifying spatial features and patterns in visual data.

Recurrent Neural Networks (RNNs)

Processing data sequentially, one element at a time, RNNs capture context and relationships within a data sequence. The sequential approach makes them effective for handling sequential data like text and audio. Their forte is language modeling, text generation, sentiment analysis, and sequence prediction tasks.

Long Short-Term Memory Networks (LSTMs)

Long Short Term Memory Networks, or LSTMs, are an advanced form of Recurrent Neural Networks (RNNs). They're designed to tackle the challenge of retaining information over longer sequences, which RNNs struggle with due to short-term memory constraints. LSTMs use specialized memory cells, making them highly effective for tasks involving sequential data such as text and audio.

Their applications range from translation and speech recognition to sentiment analysis, time series forecasting, and music generation.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks consist of two neural networks, a generator and a discriminator. The two networks engage in a competitive process: the generator creates synthetic data, while the discriminator evaluates whether the generated data is real or fake.

Through continuous interaction and learning, the generator refines its output to become increasingly convincing, while the discriminator improves its ability to distinguish between real and generated data. This adversarial training process results in the generation of high-quality and realistic synthetic content.

Multilayer Perceptrons (MLPs)

Consisting of multiple layers of interconnected neurons with information flowing forward, MLPs are versatile and easy to implement, offering a good starting point for deep learning due to their simple structure.

MLPs excel at image recognition, classification tasks, function approximation, and regression problems.

Self-Organizing Maps (SOMs)

Using competing nodes that adapt and represent input data on a low-dimensional map, SOMs effectively perform dimensionality reduction and data visualization, revealing patterns and relationships within complex datasets.

Their expertise is data clustering, anomaly detection, market analysis, and visualization of high-dimensional data.

Autoencoders

Autoencoders represent input data in a condensed manner. They achieve this by encoding the data into a lower-dimensional space and reconstructing it to its original form.

They excel in various tasks, such as image compression, dimensionality reduction, anomaly detection, and data cleaning.

Deep Learning Frameworks

You don't have to build a neural network from the ground up. Some frameworks and libraries make deep learning accessible. Furthermore, many of these frameworks are open source and can be leveraged with a cloud-based approach without dedicated hardware.

Here are the best deep learning frameworks available today:

1. TensorFlow

- Open-source machine learning and AI framework.

- Developed by the Google Brain team.

- TensorFlow 2.0 was released in 2019.

- Supports various programming languages.

- Features built-in primitive neural network executions.

2. PyTorch

- Open-source machine learning and neural network framework.

- Developed by Facebook's AI Research Lab (FAIR).

- Python and C++ support.

- GPU-accelerated computations.

- Automatic differentiation with autograd.

- Features built-in neural network executions with torch.nn.

3. Keras

- High-level front-end for TensorFlow.

- Focuses on creating neural network building blocks.

- Beginner-friendly with pre-labeled datasets.

- Features predefined layers, parameters, and preprocessing functions.

4. SciKit-Learn (SKLearn)

- Open-source machine learning library.

- Built on NumPy, SciPy, and matplotlib.

- Features built-in datasets, data splitting, and an MLP algorithm.

- Unsuitable for large-scale deep learning due to a lack of GPU support.

5. Apache MXNet

- Open-source deep learning framework.

- Supports multiple CPUs, GPUs, and dynamic cloud infrastructure.

- Portability for deployment on various devices.

- Flexible programming with imperative and symbolic options.

6. Eclipse Deeplearning4j (DL4J)

- A suite of deep learning tools running on JVM.

- Supports various deep learning algorithms.

- Features Keras support, Hadoop, CUDA, and Spark integration.

7. MATLAB

- Proprietary software with deep learning support.

- Aims for minimal coding with various tools and extensions.

- Features interactive labeling and automated deployment.

8. Sonnet

- Deep learning framework built on TensorFlow 2.

- Developed by DeepMind researchers.

- Provides simplicity and TensorFlow integration.

9. Caffe

- Open-source deep learning framework in C++ with a Python front-end.

- Specializes in image classification and segmentation.

10. Flux

- Julia machine learning framework for high-performance pipelines.

- Features a layer-stacking-based interface.

- Supports differentiable programming and TPU compilation.

Consider reading our article on deep learning frameworks for a more in-depth comparison.

GPU and Deep Learning

A GPU (Graphics Processing Unit) is a specialized chip that accelerates computer graphics processing. Unlike CPUs (Central Processing Units) that handle tasks sequentially, GPU computing excels at parallel processing, meaning it can handle many calculations simultaneously.

This parallel processing capability makes GPUs ideal for repetitive and layered calculations common in deep learning algorithms.

GPUs also have dedicated video RAM (VRAM) with much higher bandwidth than regular RAM, enabling faster data transfer between memory and processing cores. This transfer speed is crucial for deep learning, as models often deal with large images, videos, or text datasets.

Finally, modern GPUs are designed for deep learning, with features like tensor cores that accelerate specific matrix operations found in neural network operations.

The rise of powerful GPUs has made training of complex models faster. It has also led to larger and deeper neural networks capable of tackling more challenging tasks.

Read our article on GPU deep learning to learn which architecture, parameters, and techniques you should use to train a deep learning model with a GPU.

Benefits and Challenges of Deep Learning

Deep learning is an evolution and advancement in machine learning. However, it isn’t always preferable to traditional methods. Understanding the advantages and challenges is crucial for unlocking its full potential.

Benefits

Here are the advantages that set deep learning apart:

- Continuous Adaptation. A deep learning system adapts and evolves based on new information, fostering a dynamic and responsive environment that mirrors the complexity of the real world.

- Automatic Decision-Making. Deep learning systems reduce the need for human intervention.

- Accuracy. Deep learning models surpass traditional machine learning in tasks like image recognition, natural language processing, and speech recognition.

- Feature Learning. Unlike traditional methods that require hand-crafted features, deep learning automatically learns features from data, potentially uncovering hidden patterns and improving model performance.

- Versatility. Deep learning can handle diverse data types, including images, text, audio, and video.

Challenges

Some challenges of training a deep learning model are:

- Overfitting. Overfitting happens when a model learns the training data too well, capturing noise and details that may not generalize to new, unseen data. An overfitted model becomes overly specialized in the training set, losing its ability to predict unknown and diverse examples accurately.

- Resource Demands. Training complex deep learning models is computationally expensive. It demands specialized hardware and significant energy resources. Deep learning models also require large amounts of high-quality data for effective training, which is costly and time-consuming to acquire.

- The Black Box Problem. With millions of parameters and intricate architectures, deep learning models are challenging for humans to understand and interpret. Due to its black box nature, it’s difficult to reverse-engineer and uncover the actions of a deep learning model.

- Tunnel Vision. AI algorithms prioritize their goal without considering broader implications, leading to unexpected and undesirable outcomes. For instance, a drone designed to minimize delivery time may prioritize flying over restricted areas.

The paperclip maximizer is a thought experiment in AI ethics that illustrates the potential risks of creating a superintelligent AI with a poorly defined and simplistic objective.

In this fictional scenario, a super-intelligent AI is tasked with maximizing the production of paper clips. It relentlessly pursues its goal, converting all available resources, including the entire Earth, into paper clips.

Beyond Deep Learning

Deep learning excels at autonomous learning from data, recognizing patterns, and performing complex tasks that can surpass human capabilities. Yet true intelligence requires human oversight to provide ethical guidance, contextual understanding, and bias correction. This balance highlights the symbiotic relationship between human ingenuity and artificial intelligence, ensuring AI development remains aligned with human goals and values.