A Tensor Processing Unit (TPU) is specialized hardware that significantly accelerates machine learning (ML) workloads. Developed to handle the computationally intensive operations of deep learning algorithms, TPUs provide a more efficient and faster way to execute large-scale ML models than traditional CPUs and GPUs.

What Is a Tensor Processing Unit?

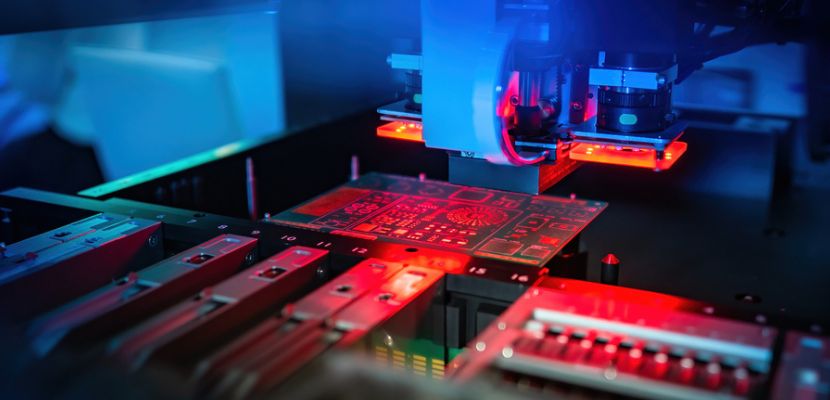

A TPU is an application-specific integrated circuit (ASIC) developed specifically for accelerating machine learning tasks. It is optimized for the high-volume, parallel computations characteristic of deep learning models, particularly those involving tensors, which are multidimensional data arrays. TPUs improve the performance and power efficiency of machine learning computations, making them highly effective for both the training and inference phases of deep learning models.

Tensor Processing Unit Architecture

The architecture of a TPU is tailored for the efficient processing of tensor operations, which are fundamental to many machine learning algorithms. Critical components of a TPU include:

- Matrix multiplier unit. At the heart of the TPU, this unit is optimized for quickly performing large matrix operations, which are common in machine learning workloads. This capability is crucial for accelerating the backbone computations of neural networks, such as the forward and backward passes during training and inference, by efficiently handling the tensor operations that these models depend on.

- Vector processing unit. This unit enhances the TPU's ability to perform operations on vectors—arrays of data that represent quantities such as features or predictions—thereby streamlining tasks such as activation function computations and other element-wise operations critical to machine learning algorithms.

- High-bandwidth memory. This type of memory allows for the rapid movement of large datasets and model parameters into and out of the processing units, which is essential for maintaining high throughput and efficiency, especially when dealing with complex models and large volumes of data.

- Custom interconnects. When used in parallel, these interconnects allow for rapid data transfer within the TPU and between TPUs, enabling scalability for large models and datasets. This architecture supports the distributed processing of machine learning tasks, allowing multiple TPUs to work together seamlessly on a single computational problem, which is particularly beneficial for training very large models or processing extensive datasets that exceed the capacity of a single TPU.

Tensor Processing Unit Advantages and Disadvantages

TPUs excel at tasks like training massive machine learning models, but they are specialized for TensorFlow and can be expensive compared to CPUs and GPUs.

Advantages

Here are the benefits of TPUs:

- High performance and efficiency. TPUs accelerate machine learning workflows, offering significant computation speed and efficiency improvements over general-purpose CPUs and GPUs.

- Energy efficiency. TPUs consume less power for the same computational tasks, making them more cost-effective for large-scale ML operations.

- Optimized for machine learning. With a design focused on the specific needs of tensor calculations and deep learning models, TPUs provide optimized performance for these applications.

Disadvantages

These are the drawbacks of TPUs:

- Flexibility. Being specialized hardware, TPUs are less flexible than CPUs and GPUs for general-purpose computing tasks.

- Availability and cost. Access to TPUs is limited, and they are a higher upfront investment than more widely used computing resources.

- Complexity in programming and integration. Leveraging the full potential of TPUs requires specialized knowledge.

Tensor Processing Unit Use Cases

TPUs are used across a variety of fields that require the processing of large datasets and complex machine-learning models, including:

- Image recognition and processing. Speeding up the training and inference phases of convolutional neural networks (CNNs).

- Natural language processing (NLP). Support large-scale models like transformers for language understanding and generation.

- Autonomous vehicles. Accelerating the real-time processing required for the perception and decision-making components of self-driving cars.

- Healthcare. Enabling faster and more efficient analysis of medical imagery and genetic data for personalized medicine and diagnostics.

- Scientific research. Processing vast amounts of data from experiments and simulations, especially in fields such as physics and astronomy.

- Financial services. Analyzing large datasets for risk assessment, fraud detection, and algorithmic trading, where speed and accuracy significantly impact outcomes.