Neural networks enable us to build machine learning (ML) models that simulate the behavior of the human brain. These networks make decisions by simulating how biological neurons work together to process information, weigh options, and arrive at conclusions.

This decision-making capability lets neural networks adapt to changing and novel inputs, a critical trait for cutting-edge AI models like ChatGPT and DALL-E.

This article takes you through everything you must know about neural networks. Jump in to learn how these networks work and see whether your organization would benefit from setting up and training an in-house neural network.

Check out our intro to deep learning if you are new to the concept of training neural networks.

What Is a Neural Network?

A neural network is a computational model in which interconnected nodes (called neurons or units) collaborate to analyze data and make predictions. Another common name for a neural network is an artificial neural network (ANN).

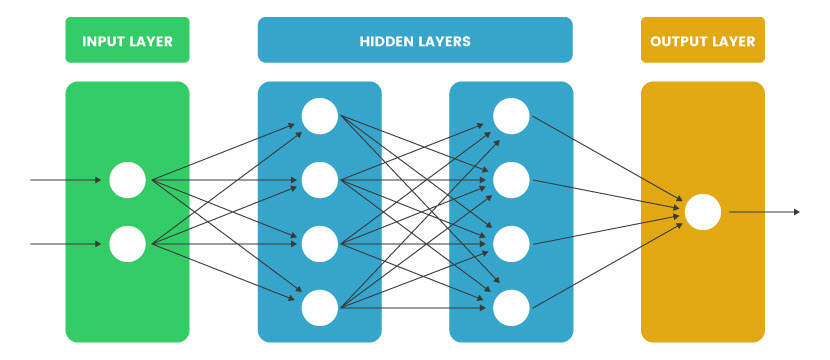

Every ANN consists of nodes organized in three types of layers:

- Input layer. Raw data enters a neural network through the input layer. Nodes in this layer analyze the input data and pass it along to the next layer.

- Hidden layer(s). These layers analyze the output from the previous layer, process it, and pass it to the next layer. Most neural networks have more than one hidden layer.

- Output layer. This layer operates at the end of an ANN. Output layers receive inputs from the last hidden layer and produce the network's final prediction.

Regardless of the layer in which they operate, each node in an ANN processes inputs received from nodes in the previous layer. Each node applies a formula to the received data and then checks the formula's output against a dynamically changing threshold. The node then does one of two things:

- If the output exceeds the current threshold, the node passes data to the next layer.

- If the output falls below the current threshold, the node does not pass the data to the next layer.

This process adds non-linearity to the network's decision-making process. Non-linearity makes ANNs highly effective at computer vision, image and speech recognition, natural language processing (NLP), and advanced robotics.

Types of Neural Networks

There are two broad categories of ANNs based on the number of hidden layers: shallow and deep neural networks. Shallow ANNs have only one hidden layer, while deep neural networks (DNNs or deep nets) have two or more hidden layers.

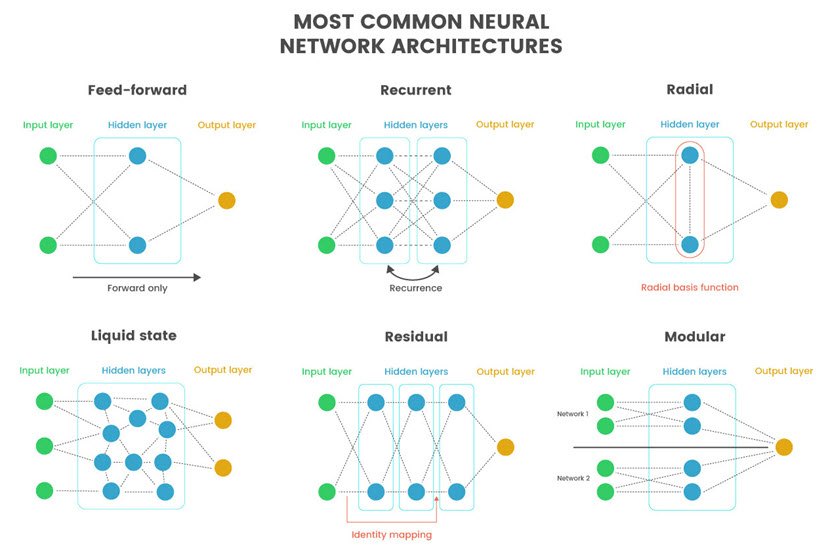

There are also different types of neural network architectures. Here are the most common ones:

- Feedforward neural networks (FNNs). FNNs direct data only in one direction, from input nodes through hidden layers to output nodes. There are no cycles or loops. FNNs are suitable for binary classification and regression tasks that involve no sequential data and have relatively simple input-output relationships.

- Recurrent neural networks (RNNs). RNNs enable data to go backward through layers to get better results. RNNs are well-suited for sequential data processing tasks, such as time series prediction, NLP, or speech recognition.

- Radial basis function networks (RBFNs). The hidden layer in an RBFN applies a radial basis function to the input. These functions compute their output based on the distance between the input data and specific centers associated with each function. RBFNs are often used for function approximation, classification, and clustering tasks.

- Liquid state neural networks (liquid state machines). Nodes in a liquid state machine are randomly connected to each other. These networks are excellent at real-time processing of spatiotemporal data, such as sensory data from robots.

- Residual neural networks. This type of neural network architecture allows data to skip layers via a process called identity mapping. The residual design is beneficial for very deep networks with many hidden layers.

- Modular neural networks. This architecture integrates two or more neural networks that do not interfere with each other's activities. Modular ANNs are suitable for tasks where the problem can be decomposed into smaller, more manageable sub-problems.

Deep nets with 100+ hidden layers have significant benefits, but these ANNs are not easy to set up and train. Our guide to deep neural networks provides an in-depth look at how DNNs work.

How Do Neural Networks Work?

Nodes in a neural network are fully connected, so every node in layer N is connected to all nodes in layer N-1 and layer N+1. Nodes within the same layer are not connected to each other in most designs.

Each node in a neural network operates in its own sphere of knowledge and only knows the following factors:

- Input data received from neighboring nodes.

- Weights, which are numerical values that determine how much influence inputs have on the output.

- A threshold, which is a parameter added to the weighted sum of inputs.

Let's look at a simple example of a node that decides whether you should go hiking (≥1 for Yes, ≤0 for No). Our node accounts for three inputs:

- Is the weather good? (Yes: 1, No: 0)

- Are the trails crowded? (Yes: 0, No: 1)

- Is there a risk of encountering wildlife? (Low: 1, High: 0)

Based on today's conditions, nodes in the previous layer provide the following inputs:

- X1 = 1 (sunny day)

- X2 = 0 (crowded trails)

- X3 = 1 (low wildlife activity)

Weights determine the significance of each X. Larger weights signify a variable of greater importance to the outcome. Here are the weights for our example:

- W1 = 5 (you love hiking in the sun)

- W2 = 3 (you prefer solitude on trails)

- W3 = 4 (you're concerned about encountering animals)

The last thing we need is a threshold. For our example, we assume a threshold value of 4. Our node then uses a simple formula ((X1*W1) + (X2*W2) + (X3*W3) - threshold value) to calculate its output:

- (1*5) + (0*3) + (1*4) - 4 = 5

In this scenario, the output is 5, which is higher than 1, so our node's output indicates a strong inclination to go for a hike.

Network admins do not arbitrarily set the values of weights and thresholds. Instead, a neural network learns from data during training and use. It constantly adjusts weights and thresholds to produce better outputs.

Benefits of Neural Networks

The main benefits of using neural networks stem from their capability to learn complex patterns, adapt to new data, and generalize well across different tasks. Here are the main benefits of neural networks:

- Flexibility. Neural networks can perform a wide range of tasks, including classification, regression, clustering, pattern recognition, time series forecasting, anomaly detection, and more.

- Adaptability. Neural networks learn from data and adapt their internal structure to capture complex patterns and relationships in inputs. Over time, they become better at whatever task you assign them to.

- Non-linearity. Neural networks can model non-linear relationships in data, which allows them to handle complex and highly dimensional data effectively.

- Generalization. Neural networks generalize extremely well to unseen data. Regularization, dropout, and data augmentation techniques help neural networks deal with previously unseen data sets.

- Automation. Once adequately trained, neural networks reliably automate decision-making processes, eliminating the need for human intervention during low-latency, time-consuming, and repetitive tasks.

- Parallel processing. A neural network performs computations in parallel across multiple nodes and layers. That way, the network ensures efficient processing of large volumes of data and faster training times.

- Feature learning. Neural networks extract relevant features from raw data, reducing the need for manual feature engineering.

- Noise resistance. When properly trained, neural networks become highly resistant to noisy data and input variability, making them suitable for use cases with imperfect or incomplete data.

- High fault tolerance. The corruption or failure of one or more nodes in a network does not stop the generation of output. Instead, the ANN automatically reroutes data to healthy nodes and ensures availability.

- Scalability. Neural networks easily scale to handle larger data sets and more complex problems. A network scales vertically by adding more layers or nodes, but you can also scale horizontally if you want to experiment with modular ANNs.

Our horizontal vs. vertical scaling comparison outlines the differences between the two strategies and helps pick an optimal scaling model for your use case.

Disadvantages of Neural Networks

While neural networks offer numerous advantages, they pose a few must-know drawbacks. Here are the most notable challenges you're likely to face when training and using a neural network:

- Data dependency. A neural network's performance heavily depends on the quality and quantity of the training data. Obtaining adequate data is often costly and time-consuming, especially for domains with scarce data.

- Overfitting during training. Neural networks are prone to overfitting. This issue occurs when the model learns to memorize the training data instead of generalizing to unseen data.

- Hyperparameter tuning. Admins must set numerous hyperparameters during ANN training, including learning rate, batch size, regularization strength, dropout rates, and activation functions. Finding the correct set of parameters is time-consuming and often requires extensive testing.

- Interpretability. Understanding how a neural network arrives at a particular prediction or decision is often difficult. This lack of interpretability is a significant drawback in use cases that require transparency and accountability.

- Debugging. Neural networks, especially deep nets with many layers, are highly complex and challenging to understand and interpret. Teams often struggle to diagnose and debug issues.

- Adversarial attacks. Neural networks are susceptible to adversarial attacks. These attacks occur when a malicious actor makes small alterations to input data to cause the model to make incorrect predictions.

- Resource requirements. Training a neural network is computationally intensive and requires substantial resources. Most adopters must invest in costly GPU or TPU accelerators. Neural networks also require significant memory and storage resources to handle large data sets.

Solve the issue of neural network's computational demands with high performance computing (HPC) servers. The high parallelism and specialized hardware accelerators make HPC servers an ideal infrastructure for training a neural network.

What Are Neural Networks Used For?

Prime use cases for a neural network involve processes that operate according to strict patterns and deal with large amounts of data. If the data set is too large for a human to make sense of in a reasonable time frame, the process is likely an excellent candidate for ANN adoption.

Here are a few common use cases for a neural network:

- Image classification. Identifying objects in images is a popular use case for a neural network. Examples include classifying images to detect anomalies and identifying physical threats in live camera footage.

- Chatbots. Natural language processing models enable chatbots to engage in realistic conversations and provide assistance in various domains.

- Autonomous vehicles. Self-driving cars use neural networks to detect pedestrians, recognize traffic signs and signals, and navigate complex road environments.

- Speech recognition. Speech recognition systems use ANNs to convert spoken language into text. This feature enables users to give voice commands to smartphones, virtual assistants, and hands-free controls.

- Recommendation systems. Many organizations use a neural network to power recommendation engines that analyze user preferences to suggest personalized content or services.

- Fraud detection. Many fraud detection systems use ANNs to identify suspicious patterns or anomalies in financial transactions. These systems reliably detect signs of credit card fraud, identity theft, and money laundering.

- Gesture recognition. Neural networks are excellent at recognizing and interpreting hand gestures captured by cameras or sensors. This capability enables users to interact with devices and interfaces through hand motions.

- Medical diagnosis. Healthcare providers often use neural networks to analyze medical images (MRI scans, X-rays, histopathology slides, etc.) and assist in disease diagnosis and treatment planning.

- Predictive maintenance. Neural networks can analyze sensor data from industrial machinery and equipment to predict potential failures or maintenance needs. An ANN enables proactive maintenance strategies that minimize downtime and optimize operational efficiency.

Learn more about the role of artificial intelligence in business.

How to Train a Neural Network

The first step in training a neural network is to gather relevant training data. For example, to create an ANN that identifies the faces of actors, the initial data set would include tens of thousands of pictures of actors, non-actors, masks, and statues.

Data sets often require some preprocessing, which involves tasks such as:

- Scaling numerical data to a standard range, typically between 0 and 1 or -1 and 1.

- Resizing images.

- Splitting text into smaller, more manageable units (so-called tokens).

Next, adopters divide the prepared data into three sets that play a different role during training:

- Training sets for training the model.

- Validation sets for monitoring the ANN's performance during training.

- Test sets for evaluating the model's post-training performance.

Once all three data sets are ready, the training process can start. The training data set enters the model iteratively, and the network continuously updates its weights and thresholds to achieve better outputs. Admins must monitor the model's performance on the validation set during training to prevent overfitting.

Most adopters experiment with different parameters during training. Tweaking the learning rate, batch size, and node architecture are the go-to ways to improve the performance on the validation set. Admins also often test different:

- Regularization methods (e.g., L1 and L2 regularization, dropout).

- Optimization algorithms (e.g., Adam, RMSprop, SGD).

- Techniques for handling imbalanced data (e.g., oversampling, undersampling, SMOTE).

Once training is complete, admins evaluate the model's performance on the test set to assess its generalization ability and ensure it performs well on unseen data. If the trained model passes these tests, the ANN is ready for deployment into the production environment, after which the network continues to train itself as it interacts with real-life inputs and new data.

Thinking about setting up an in-house neural network? Use one of these deep learning frameworks to speed up the training of your new ANN.

A No-Brainer Investment (For the Right Use Case)

Despite requiring considerable investments in training data and hardware, ANNs are revolutionizing the way businesses deal with time-consuming and duplicative tasks. They can identify patterns in large datasets, helping companies streamline operations and improve efficiency.

If your use case involves repetitive patterns and large amounts of data, investing in a neural network is an obvious choice. Neural networks automate routine workflows, reduce errors, and provide faster, more accurate insights over time.