Understanding the theory behind machine learning (ML) is vital, but the only way to get truly good at ML is to actually build machine learning models. If you feel like you're at the point where the only way to improve is to get some hands-on experience, this is the right article for you.

This post presents 30 machine learning projects that will help you develop practical skills you cannot acquire through study alone. We've organized projects into three difficulty levels, so you will find suitable project ideas regardless of whether you're a relative beginner or an ML developer with some experience.

Why Start a Machine Learning Project?

If you are still developing your skills, starting a machine learning project will give you a taste of real-world ML development. Here's an overview of what you get by completing the machine learning projects featured in this article:

- Practical experience. Machine learning projects allow you to apply theoretical knowledge to real-world problems. You will solidify your understanding of algorithms, data processing, and model deployment.

- Portfolio development. Each completed project expands your portfolio. Once you start looking for a job, you will have something tangible to show during interviews, which will help you stand out from other candidates.

- Problem-solving skills. Working on a project challenges you to solve problems ML developers face on a regular basis (limited data, computational resources, the need for interpretability, etc.). Familiarity with these challenges will be valuable once you land your first job.

- Continuous growth. Like all artificial intelligence technologies, machine learning is a rapidly evolving field. Projects help you keep up with the latest tools, libraries, as well as identify ML areas in which you need improvement.

If you are a newcomer to ML, we recommend you start with the basics before diving into any projects. Our introductions to artificial intelligence and neural networks are a great starting point.

Tools and Methodologies Required for Machine Learning Projects

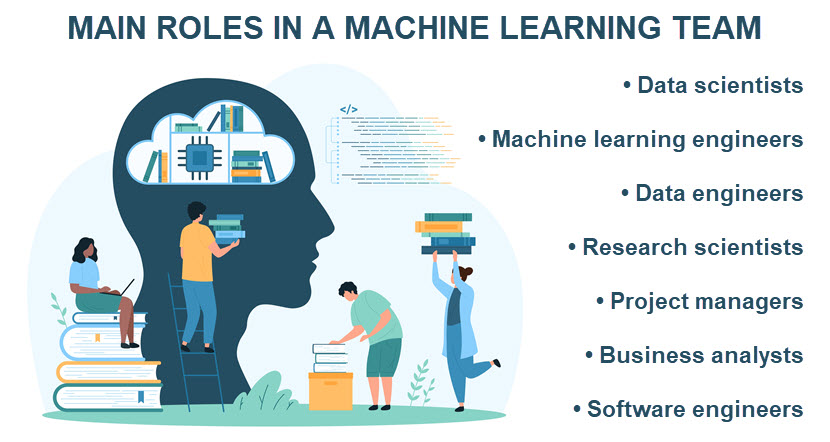

Machine learning is a broad field that requires familiarity with a diverse set of concepts and technologies. Below is a high-level overview of what you'll need to master to become an ML developer:

- Programming languages. ML developers must know at least one AI programming language, although familiarity with multiple languages is recommended. Python is the most popular choice due to its simplicity and extensive libraries. Julia, R, Java, and Scala are also common choices.

- ML libraries. Machine learning libraries and frameworks are building blocks for developing, training, and deploying machine learning models.

- Data visualization tools. Tools like Matplotlib, Seaborn, and Tableau help you understand data patterns, trends, and model performance.

- Data storage and management tools. Effective management and storage of large data sets are crucial in machine learning.

- Version control systems (VCS). VCS tools like Git enable you to track changes, collaborate with colleagues, and manage code versions.

- Integrated Development Environments (IDEs). IDEs like PyCharm or VS Code and notebooks like Jupyter provide a structured environment for writing, testing, and debugging ML code.

- Cloud platforms. Cloud computing platforms offer services for storing data, running machine learning models, and scaling projects to production environments.

As for methodologies, every ML developer must know how to:

- Handle and transform raw data (data cleaning, feature engineering, dimensionality reduction, etc.).

- Augment data to handle projects with limited data availability.

- Split data into training, validation, and test sets to ensure robust model evaluation.

- Select correct algorithms and know how to fine-tune them based on the problem and data characteristics.

- Evaluate model performance using accuracy, precision, recall, and F1-score metrics.

- Adjust the hyperparameters of models (e.g., learning rate, regularization strength) to optimize performance.

- Understand deployment strategies (e.g., Docker or TensorFlow Serving) and CI/CD pipelines.

- Monitor post-deployment model performance.

- Keep data safe throughout a model's lifecycle.

Does ML not look like the right fit for your interests? Check out our DevOps roadmap to see whether becoming a DevOps specialist would be a better career choice.

How to Start a Machine Learning Project

Every ML project is unique, but most require you to go through similar initial steps. Here's an overview of what developers normally do when they start working on a new machine learning project:

- Understand the problem domain. The first step is to identify the problem (classification, regression, clustering, etc.) you want to solve with the model you're building.

- Identify relevant data sources. Determine where you can obtain the data necessary for the project. All ML models require high amounts of data for training, so many projects fail during this step as teams often discover they do not have enough data to train the model.

- Perform exploratory data analysis (EDA). Before diving into modeling, developers always analyze the available data to understand its structure, identify patterns, spot potential outliers, and recognize missing values.

- Choose a language. The next step is to decide what programming language you'll use for your new machine learning project.

- Select appropriate libraries and frameworks. Depending on the project scope, you might need libraries to get the job done. Libraries are essential as they save you from having to write extensive code from scratch.

- Outline the steps. Create a high-level plan that details the steps you'll take during the project, from data preprocessing to model evaluation.

- Allocate resources. Determine the computational resources you'll need during the project. You should also consider whether you will need any additional team members or expertise at this point.

Spending adequate time on these steps enables developers to establish a solid foundation for the upcoming machine learning project. A solid basis helps avoid potential pitfalls during development and increases the likelihood of building a model that performs effectively in real-world settings.

While all projects in this article offer guidance and provide the necessary data sets, you should still go through the initial steps outlined above when starting a new project. This approach builds up good habits and ensures you do not skip steps when you start working on real-life projects.

Machine Learning Projects for Beginners

Beginner machine learning projects focus on basic data manipulation, simple models, and essential ML libraries. Let's dive into 10 beginner-friendly machine learning projects ideal for aspiring developers with little to no practical experience in ML.

Predicting Taxi Fares Using Random Forests

This project involves predicting the best locations and times to earn the highest taxi fares based on the New York City taxi data set. You'll leverage the tidyverse suite for data processing and visualization while experimenting with various machine learning strategies.

The project starts by tasking you with cleaning and exploring the provided data set. The data is processed using the tidyverse package in R, which simplifies the tasks of data manipulation and visualization.

Next, you apply tree-based models, including decision trees and random forests, to predict where and when taxi drivers can earn the highest fares. Here is the prerequisite knowledge for this machine learning project:

- Basics of R programming.

- Data cleaning and manipulation with tidyverse.

- A solid understanding of decision trees and random forests.

- Basic data visualization techniques.

Completing this project will improve your ability to handle real-world data sets and provide practical experience in debugging and optimizing code for different contexts. This project can also be adapted to other data sets, such as the Bike Sharing Demand data set.

Predicting Home Values Using Zillow's Economic Data

This project involves building a house price prediction model using Zillow's extensive data set, which includes factors like average income, crime rates, and proximity to amenities.

The project will guide you in exploring how factors such as income levels and the number of nearby schools influence housing prices. You will be asked to apply XGBoost, a powerful gradient boosting algorithm, to create a model that predicts home values and offers insights into where to buy or rent a home.

Here is what you need to complete this project:

- Solid Python programming skills, especially data manipulation techniques with the Pandas library.

- An understanding of the XGBoost gradient boosting technique.

- Experience with data visualization tools like Matplotlib or Seaborn.

By completing this project, you will gain skills in handling real-world data, refining predictive models, and drawing actionable insights from complex data sets. You will also gain a deeper understanding of real estate market dynamics and the practical applications of machine learning in property valuation.

Predicting Product Sales with Regression Models

This project involves using historical sales data from BigMart to build a regression model that predicts future sales for 1,559 products across 10 different outlets.

You'll work with a data set that contains sales data from 2013, covering 1,559 products across 10 BigMart outlets in different cities. The data set includes various attributes, including:

- Product type.

- Weight.

- Visibility.

- Store location.

Your goal is to develop a regression model that predicts sales figures based on these attributes. To get this project done, you will need a strong grasp of:

- Python programming (especially with Pandas and NumPy libraries).

- Basic concepts of regression analysis.

- Data preprocessing techniques, including handling missing values and outliers.

- A sound understanding of feature engineering and model evaluation metrics.

This project grants practical experience in applying machine learning to predict sales. You will also enhance your regression modeling skills and learn to extract actionable business insights from data.

Building a Music Recommendation System

This project involves creating a music recommendation system using the provided data set. The goal is to predict which new songs or artists a person might enjoy based on their past listening habits.

The key task in this project is to build a predictive model that provides data-based music recommendations. You'll use classification algorithms like logistic regression and decision trees to solve this problem.

Here are the prerequisites for this machine learning project:

- Proficiency in Python.

- Experience in data manipulation using Pandas.

- Basic understanding of classification algorithms.

- Skills in data preprocessing, including handling time-series data.

For a greater challenge, you can experiment with neural networks to enhance the prediction accuracy and capture more complex patterns in user behavior.

Classifying Song Genres Using Audio Features

This project focuses on classifying songs into two genres. You'll develop a model that classifies songs into Hip-Hop or Rock based on audio features, using techniques like normalization, Principal Component Analysis (PCA), and various classification algorithms.

You'll begin by exploring the data set and identifying correlations between the audio features. Then, you'll normalize the data using Scikit-learn's StandardScaler to ensure all features contribute equally to the model.

Then, you'll apply PCA to reduce the dimensionality of the data, making it easier to visualize and interpret. Finally, you'll train and validate models using logistic regression and decision tree algorithms.

Here is what you'll need to have to complete this ML project successfully:

- A solid knowledge of the Scikit-learn library.

- Basic knowledge of data preprocessing, including normalization and PCA.

- Understanding of logistic regression and decision trees.

- Familiarity with class balancing and cross-validation techniques.

By completing this project, you will gain practical experience in working with audio data. You will also get better at essential machine learning techniques and learn how to address class imbalances and basic overfitting problems.

Predicting Credit Card Approvals

This project involves building a predictive model that automates credit card approval decisions. You will also have to optimize model performance through hyperparameter tuning and effective data preprocessing.

You'll work with a realistic data set containing information on credit card applicants, focusing on features such as income, employment status, and credit history.

Implementing logistic regression to build your predictive model will be necessary. To enhance the model's accuracy, you'll use GridSearchCV for automatic hyperparameter optimization, pushing your understanding of model tuning beyond simple parameter settings.

Here is what you need to complete this project:

- Basic knowledge of the Scikit-learn library.

- Techniques for handling missing data and processing categorical features.

- Knowledge of feature scaling and data balancing.

- A solid understanding of logistic regression.

- Knowledge of hyperparameter optimization with GridSearchCV.

During this project, you'll learn to handle missing values, process categorical variables, and scale features to prepare the data for modeling.

Classifying Iris Flowers

The Iris Flowers classification project is a classic introductory machine learning problem. The project uses a simple yet powerful Iris data set to teach beginners the fundamentals of classification.

The goal is to use basic machine learning algorithms to classify Iris flowers into three species (setosa, versicolor, and virginica) using the petal and sepal measurements as features. Here is what this project requires you to have:

- A solid knowledge of Python programming.

- A fundamental grasp of data loading and handling concepts.

- An understanding of simple regression and decision tree algorithms.

Although simple, this project lays a strong foundation for understanding key concepts in classification problems. For those looking to expand their skills, you can experiment with more advanced algorithms or even incorporate deep learning techniques.

Predicting Wine Quality

This project aims to predict the quality of wine based on its chemical properties. The goal is to create an ML model that evaluates wine quality by exploring factors like acidity, alcohol content, and pH levels.

You will start by analyzing the Wine Quality data set, which contains detailed information on the chemical attributes of different wines. Your task is to visualize the data and identify which features most strongly influence wine quality.

After selecting relevant features, you will build and fine-tune a machine learning model. Then, you must adjust hyperparameters to achieve the highest possible accuracy. Here is a high-level overview of the prerequisites for this project:

- Basic data preprocessing and visualization techniques.

- Familiarity with regression and classification algorithms.

- Experience with hyperparameter tuning for model optimization.

Completing this project provides valuable experience in handling real-world data, exploring feature importance, and optimizing models to improve predictive accuracy.

Predicting Stock Prices with Time Series Forecasting

This project is ideal for aspiring data scientists or ML engineers interested in the finance domain. You'll build a model to predict future stock prices by analyzing a company's past performance and various economic indicators.

During this project, a time series forecasting model is built to predict stock price movements over a six-month period based on fundamental financial indicators like:

- Volatility indices.

- Prices.

- Macroeconomic factors.

You'll explore various time series models to identify trends, seasonal variations, and potential anomalies in the data. To complete the project, you will have to have a solid knowledge of:

- Time series analysis and forecasting methods.

- Techniques for data preprocessing and feature engineering

- Moving Average, Exponential Smoothing, and ARIMA models.

Completing this project provides practical experience in financial data analysis and time series forecasting.

Predicting Blood Donations with AutoML

This project focuses on predicting whether someone will donate blood based on data from a mobile blood donation drive in Taiwan. You'll leverage the power of automated machine learning (AutoML) to streamline the model-building process.

You'll begin by processing raw data collected from blood donation drives at various universities. The data is fed into TPOT, a Python-based AutoML tool, which automatically explores hundreds of potential machine learning pipelines to find the most effective one for this data set.

After identifying the optimal pipeline, you'll manually implement and fine-tune the model using normalized features to optimize performance.

Here is what you need to tackle this machine learning project:

- A good understanding of data preprocessing techniques.

- Familiarity with TPOT or similar AutoML tools.

- A grasp of supervised learning fundamentals.

By completing this project, developers gain insights into the capabilities of AutoML tools, learn how to handle and preprocess real-world data, and enhance their skills in model optimization.

Learn the difference between supervised and unsupervised machine learning, two of the most common strategies for "teaching" machines to perform new tasks.

Intermediate-Level Machine Learning Projects

Intermediate machine learning projects build on the basics and require more data processing, advanced algorithms, and a deeper understanding of model evaluation. Below are 10 project ideas ideal for developers who have mastered the fundamentals and are ready to tackle more challenging problems.

Building a Movie Recommender System

In this project, you will create a personalized movie recommendation system that suggests relevant films to viewers. You will use the MovieLens data set, which includes over 1,000,000 movie ratings for nearly 4,000 movies by more than 6,000 users.

You'll start by exploring the data set, which contains valuable information on user preferences and movie metadata. The project authors recommend creating a word cloud visualization of movie titles to better understand the provided data set.

From there, you'll dive into constructing a recommendation engine and experiment with collaborative filtering techniques like matrix factorization and content-based filtering.

As this is a more complex undertaking, we recommend diving into this machine learning project only if you have a solid knowledge of:

- Fundamentals of recommendation systems.

- Collaborative and content-based filtering.

- Matrix factorization.

- Data visualization skills, which are crucial during initial data set exploration.

Completing this project will give you experience in building recommendation systems, enhance your understanding of user behavior analysis, and expose you to the challenges of large-scale data processing.

Analyzing Movie Similarities Based on Plot Summaries

In this project, you are tasked with developing a clustering model that groups similar movies based on their plot summaries.

You'll start by collecting and combining plot summary data from IMDB and Wikipedia. The text data will require some preprocessing before analysis. You'll then transform the text using the TfidfVectorizer, a technique that converts text into numerical features.

With the processed data, you'll apply the KMeans clustering algorithm to group movies based on plot similarities. The final step is to visualize the clustering results with a dendrogram, which will help illustrate the relationships between different movie clusters.

To achieve all of that, you must be familiar with the following areas:

- Basics of Natural Language Processing (NLP).

- Text preprocessing techniques (especially tokenization and stemming).

- TfidfVectorizer for text feature extraction.

- KMeans clustering algorithms.

- Data visualization with dendrograms.

By completing this project, you'll gain hands-on experience in NLP and clustering.

Identifying Emerging Trends in Machine Learning

This project leverages text processing and Latent Dirichlet Allocation (LDA) to uncover the most-talked-about topics in machine learning from a vast collection of NIPS conference papers.

Your goal is to use text analysis and LDA to identify and visualize emerging trends in ML research by processing and analyzing the content of NIPS papers. To start, you will perform text analysis on a large data set of NIPS papers, with a focus on extracting meaningful terms and phrases. You'll then process this text data to create word clouds that provide an overview of frequently occurring terms.

Afterward, the data will be ready for LDA analysis, which will discover insights and patterns within the research papers. Finally, you'll analyze and interpret topics to identify the most significant trends in machine learning research.

To complete this project, you need a firm grasp of the following areas:

- Text processing techniques, including tokenization and stop word removal.

- Word cloud creation for visual data representation.

- Fundamentals of Latent Dirichlet Allocation.

Completing this project will enhance your skills in text analysis and topic modeling. You will also gain valuable insights into the direction of current machine learning research.

Forecasting Walmart Sales

This project involves building a predictive model that forecasts future sales based on Walmart's sales data set, which includes weekly sales data for 98 products across 45 outlets.

You will start by importing the Walmart sales data set and conducting EDA to understand its structure and identify key patterns. After preparing the data (i.e., merging data sets and applying grouping functions), you will plot time-series graphs to analyze sales trends.

Next, you'll develop and fit sales forecasting models to the training data. By comparing these models against test data, you will optimize them by selecting the most relevant features to improve accuracy.

This machine learning project involves many complex components, requiring extensive knowledge to complete successfully. Here is what you must be familiar with:

- Multiple EDA techniques.

- Time-series analysis and visualization techniques.

- The ARIMA model for time-series forecasting.

- Data preparation and feature selection techniques.

- Model evaluation and optimization techniques.

By completing this project, you'll gain practical experience in building and deploying sales forecasting models, which are one of the most common use cases for ML models.

Predicting Income Levels Using Census Data

This project tasks you with using the provided Adult Census Income data set to build a machine learning model that predicts whether an individual's income exceeds $50K per year based on attributes such as:

- Education level.

- Marital status.

- Work hours.

The provided data set offers a rich collection of both numerical and categorical variables, but it also has missing values. These missing values provide an excellent challenge for building a robust classifier in a realistic setting.

You will begin by exploring the data set and preparing it for modeling. Next, you'll experiment with different machine learning algorithms to build a classifier, comparing performance levels to identify the optimal build. Finally, you will optimize the model for accuracy.

To do all of that, you will need solid knowledge of the following areas:

- Data preprocessing (especially techniques for handling missing values).

- Feature selection and engineering techniques.

- Building and evaluating classification models.

- Model optimization and performance tuning.

By completing this project, you'll gain experience in dealing with real-world data complexities and learn how to build effective classifier models.

Classifying Insects Using Image Data

This project focuses on using image data to train a Support Vector Machine (SVM) model that can distinguish between honeybees and bumblebees based on visual characteristics.

You'll start by manipulating and processing bee images to extract key features that can be used for classification. You will then apply techniques such as StandardScaler for normalization and PCA for dimensionality reduction. These steps prepare the data set for modeling.

Afterward, you will train the SVM model on prepared data and validate its performance to ensure accurate classifications. Here is an overview of everything you must know to complete this machine learning project:

- Image processing and feature extraction.

- Data normalization using StandardScaler.

- Dimensionality reduction with PCA.

- Techniques for building and training SVM classifiers.

This project provides valuable experience in handling and processing image data. You will also learn how to build a classifier model capable of image recognition tasks.

Recognizing Emotions in Speech

This project focuses on developing a machine learning model that can recognize emotions in speech by processing audio files. Using the Librosa library, you will extract relevant features from sound files and train a model to classify emotions accurately.

You will begin by loading and processing sound files, after which you will extract audio features such as Mel-frequency cepstral coefficients (MFCCs) using Librosa. You will use these features to train the MLP classifier with the help of the Scikit-learn library.

Here is an overview of what areas you must have experience in to complete this machine learning project:

- Basic understanding of sound file formats and handling.

- Audio processing with Librosa.

- A solid knowledge of the Scikit-learn library.

- Feature extraction techniques.

- MLP classifier training and validation.

This project enhances your machine learning skills in audio processing and provides a solid foundation for exploring deep learning models that process audio files.

Predicting Bike Ride Demand

In this project, you will explore and evaluate different machine learning methodologies to forecast bike ride demand.

The project involves preprocessing data, selecting appropriate features, and experimenting with various models to find the best prediction strategy. The project expects you to have a sound understanding of the following fields:

- Time series forecasting.

- Feature selection and engineering.

- Data preprocessing techniques.

- Model evaluation and tuning.

By completing this project, you'll gain insights into demand forecasting for transportation services and get experience in handling complex data sets.

Analyzing Market Baskets

This project requires you to identify patterns in customer purchases to recommend complementary products and optimize store layouts for increased sales.

You'll discover how buying one product can boost the chances of buying another related one. By analyzing historical transaction data, you'll uncover associations between items, which can be used to:

- Create personalized recommendations.

- Design effective cross-selling strategies.

- Arrange products more effectively in stores.

To effectively meet the requirements of this machine learning project, you will need a sound understanding of the following areas:

- Data mining techniques.

- Association rule learning (e.g., Apriori algorithm).

- Data preprocessing and analysis.

- Customer behavior analysis.

By working on this project, you'll gain valuable experience in creating models that make data-driven decisions.

Analyzing the Impact of Climate Change on Bird Populations

In this project, you will explore how changing climate patterns affect bird sightings over time by leveraging ML techniques on ecological data. You will use logistic regression to analyze bird sighting data in conjunction with climate variables.

You'll start by cleaning and organizing the bird sightings and climate data. You'll prepare the data for spatial analysis, focusing on regions where climate shifts have been most significant.

Then, you will use the caret package to create pseudo-absences to balance the data, after which you will train generalized linear models with elastic net regularization (glmnet). Finally, you'll visualize the results, mapping bird population changes across four decades to identify trends and hotspots of climate impact.

To get this project done, you will need a strong grasp of:

- Logistic regression and generalized linear models (GLM).

- Data cleaning and preparation for spatial analysis.

- The caret package for model training.

- Hyperparameter tuning techniques.

- Techniques for visualizing spatial and temporal data.

By completing this project, you'll gain valuable experience in ecological modeling. You'll also enhance your skills in predictive modeling and spatial analytics.

Our article on regression algorithms offers an in-depth look at how machine learning models use these algorithms to make data-driven predictions.

Advanced Machine Learning Projects

Advanced machine learning projects are ideal for developers with a solid understanding of all major ML fields. Below are 10 machine learning projects perfect for seasoned developers looking to test their skills.

Forecasting Demand for Inventory Management

This project focuses on developing a demand forecasting model that helps businesses manage their inventories.

You'll begin by analyzing historical sales data to identify patterns and trends that influence customer demand. You'll then apply various machine learning algorithms to build a predictive model. The resulting model will forecast future demand, offering an output businesses could use to improve inventory management.

Here are the knowledge requirements for this machine learning project:

- Experience with multiple machine learning algorithms (Bagging, Boosting, XGBoost, GBM, and SVM).

- Familiarity with time series forecasting.

- Experience with data analysis and preprocessing.

- A solid understanding of inventory management principles.

This project provides hands-on experience in demand forecasting, which is a highly sought-after skill in the ML market.

Building a Rick Sanchez AI Chatbot

This project involves creating and fine-tuning an AI chatbot that mimics Rick Sanchez from the "Rick and Morty" TV show.

You'll process and transform the Rick and Morty dialogue data set to fine-tune DialoGPT, a large-scale Pretrained Response Generation Model from Microsoft. By leveraging the Hugging Face Transformer library, you'll develop an AI that can generate responses in Rick Sanchez's unique conversational style.

To execute this project, you should have sound experience in the following fields:

- Natural language processing.

- Transformer architectures.

- The Hugging Face library.

- Data preprocessing and transformation techniques.

- Model fine-tuning.

Working on this project provides hands-on experience with using transformer models and building AI chatbots with personalized conversational abilities.

Interpreting American Sign Language (ASL) Using Deep Learning

This project involves building a Convolutional Neural Network (CNN) using the Keras library to classify images of ASL gestures. The goal is to develop a robust model that accurately recognizes ASL signs from images.

You'll start by visualizing and analyzing the image data set to understand its distribution and challenges in the data. Next, you'll preprocess the images to optimize them for the CNN.

After compiling and training the model, you'll focus on identifying wrong predictions. This step is crucial as it allows you to refine the model iteratively to boost its accuracy.

Here are the requirements for this machine learning project:

- A sound understanding of CNNs and deep learning.

- Solid familiarity with the Keras and TensorFlow libraries.

- Experience with image preprocessing techniques.

- Ability to analyze model performance and make smart iterative improvements.

Completing this project provides practical experience in building and optimizing a deep learning model for image classification tasks.

Forecasting Stock Market Prices with GRU Models

This project focuses on using Gated Recurrent Units (GRUs) to build a deep learning model for predicting stock prices by analyzing trends and seasonality in extensive historical data.

You'll begin by exploring time series data to identify key trends, patterns, and seasonal fluctuations in stock prices. This analysis will guide your data preprocessing decisions as you prepare the data for model training.

Next, you'll implement a GRU model using PyTorch, a Python-based library designed for sequential data. Throughout the project, you'll have access to a GitHub codebase for guidance and reference.

Here are the prerequisites for this machine learning project:

- Knowledge of time series analysis and forecasting techniques.

- Experience with GRUs and sequential data modeling.

- Familiarity with PyTorch.

- Understanding of data preprocessing for time series.

By completing this project, you will gain practical experience in building and fine-tuning GRU-based forecasting models.

Creating Multilingual Automatic Speech Recognition (ASR)

This project involves fine-tuning the Wave2Vec XLS-R model to develop an automatic speech recognition system. Your goal is to create a robust ASR model that accurately transcribes multilingual speech using state-of-the-art transformer models.

You'll start by understanding the audio and transcription data set, then tokenize the text, extract audio features, and preprocess the audio files to prepare them for model training.

Next, you'll set up a trainer and a Word Error Rate (WER) function, load pre-trained models, tune hyperparameters, and train the ASR model. Finally, you'll evaluate the model's performance and deploy it using the Hugging Face platform.

Here is what you will need to complete this machine learning project:

- Familiarity with transformer-based models, especially Wave2Vec.

- Experience with audio data preprocessing and feature extraction.

- Knowledge of fine-tuning pre-trained models.

- Proficiency with the Hugging Face platform.

Going through with this project provides valuable skills in building and deploying multilingual ASR systems. You will get hands-on experience in fine-tuning transformer models for real-time speech transcription.

Generating Music with Neural Networks

This project focuses on creating music with neural networks by training an LSTM model using MIDI files. The goal is to generate original music compositions by learning from existing musical data.

You'll begin by exploring MIDI files and learning about the structure of notes and chords. Using the Music21 library, you'll preprocess this data to prepare it for training. You'll then build the LSTM model architecture with Keras, incorporating checkpoints, loss functions, and optimization algorithms to improve the model's training process.

After training the model on MIDI files, you'll use random inputs to predict new notes, which will be sequenced together to create complete musical pieces. Finally, you'll convert these sequences into MP3 files.

This machine learning project requires the following prerequisites:

- Excellent data preprocessing skills.

- Knowledge of LSTM networks and sequential data processing.

- Familiarity with MIDI files, notes, and chords.

- Experience with Keras for model building.

- Understanding of model evaluation.

This project provides practical experience in using neural networks for creative tasks.

Stylizing Faces with GANs

This project tasks you with using generative adversarial network (GAN) inversion and fine-tuning a pre-trained model to create stylized images from a single input face. The goal is to create a model that generates realistic, artistic renditions of faces in a chosen style.

You'll start by understanding various GAN architectures, particularly those used for image stylization. You'll then create a data set that pairs images in both original and desired styles. Using GAN inversion, you will generate new images from the original input, fine-tuning a pre-trained StyleGAN model to produce realistic and artistic face stylizations.

Here is what you need to complete this project:

- Knowledge of GAN architectures and image generation.

- Experience with StyleGAN and GAN inversion techniques.

- Proficiency in data set collection and preparation.

- An excellent sense for model fine-tuning.

By completing this project, you gain hands-on experience in using GANs for creative and artistic image transformations.

Providing Personalized Fashion Recommendations

This project requires developers to build a recommendation system that combines NLP and computer vision (CV) techniques to create personalized fashion recommendations for H&M customers. To make recommendations, the model will leverage:

- Past transactions.

- Customer data.

- Product metadata.

You'll start by exploring the provided data set packed with customer information, transaction history, and product attributes. You'll use this data to create a baseline model that predicts recommendations based on text and categorical features.

Once you establish the baseline, you'll enhance your model by integrating NLP and CV techniques. This step will allow the model to process both textual descriptions and visual elements of the products.

Here are the prerequisites for this machine learning model:

- Proficiency in NLP and computer vision.

- Experience with deep learning and recommendation systems.

- High familiarity with data preprocessing and feature engineering.

- Ability to analyze and interpret customer and product data.

Completing this project will enable you to acquire advanced skills in building a hybrid recommendation system that integrates NLP and CV techniques.

Developing a MuZero-Based Reinforcement Learning Agent for Atari 2600

This project involves building and training a reinforcement learning agent using the MuZero algorithm to play Atari 2600 games. The focus is on understanding and implementing the MuZero architecture to create a highly competitive agent.

The process starts by learning about the MuZero algorithm, which combines aspects of reinforcement learning, tree search, and model-based planning. You'll then either create a new architecture or modify an existing one to optimize your agent's performance.

This project is heavily math-focused. While proposed solutions are available, achieving a top global rank will demand an original, well-tuned approach. Here is an overview of what you need to achieve a high rank on the leaderboard:

- Excellent understanding of reinforcement learning algorithms.

- Strong proficiency in Python and mathematical concepts.

- Familiarity with MuZero and related architectures.

- Experience in model development, training, and validation.

Working on this project grants expertise in implementing advanced reinforcement learning techniques. You will enhance your ability to create high-performing agents capable of excelling in complex gaming environments.

Building and Deploying an End-to-End Machine Learning System with MLOps

This project focuses on creating an end-to-end ML system using machine learning operations (MLOps) tools. Your goal is to build, deploy, and automate a machine learning pipeline.

You'll start by building a location image classifier with TensorFlow, then deploy it using Streamlit as the front end. You'll containerize the application with Docker and manage its deployment using Kubernetes. The project also involves setting up CI/CD pipelines using cloudbuild, integrating GitHub for version control, and deploying the final model on Google Cloud.

Here is what you need to successfully complete this project:

- Proficiency in TensorFlow and model deployment.

- Knowledge of Docker, Kubernetes, and CI/CD pipelines.

- Familiarity with cloud platforms.

- Experience with MLOps tools and practices.

By completing this project, developers will gain hands-on experience in creating and automating end-to-end machine learning systems. You'll learn how to orchestrate data, manage model versioning, and automate entire workflows, from development to production.

Advanced machine learning models that process vast amounts of data are notoriously compute-heavy. Check out our GPU servers page to see how well ML workloads run on dual Intel Max 1100 GPUs.

Start Building Your ML Portfolio

Pursuing a career in machine learning is a smart decision, but you will need various skills to succeed in that role. Use the machine learning projects in this article to hone your expertise and build a nice-looking portfolio.

Due to their realistic nature, performing these projects will also ensure you're ready to contribute at a high level once you land a job as an ML developer. Completing these projects demonstrates practical experience to potential employers and strengthens your confidence in applying ML concepts.