While most discussions surrounding artificial intelligence (AI) focus on its positives, concerns about the unethical use of this technology are increasingly entering the conversation. As a result, we're seeing a growing interest in establishing rules that dictate how companies should and should not use AI technologies.

This article provides an in-depth look at AI ethics, a field focused on defining frameworks that ensure responsible and sustainable use of AI systems. Jump in to see how clearly defined guidelines help ensure AI does not lead to more societal harm than economic good.

While critical, ethical concerns are not the only problem of rapid AI development. Check out our article on artificial intelligence risks if you want an in-depth look at all the dangers of this technology.

What Are AI Ethics?

AI ethics focuses on the moral implications and responsibilities associated with developing and deploying AI systems. Other common names for AI ethics are the AI value platform and the AI code of ethics.

The primary goal of AI ethics is to ensure that AI technologies are developed and used in ways that align with fundamental human values. AI ethics encompasses a wide range of topics, with the following five areas considered the primary focuses:

- General fairness.

- Accountability for actions.

- Transparency of how models make decisions.

- The right to data privacy.

- The impact of AI on humanity and ecology.

As of August 2024, there are no official worldwide regulations that legally enforce the ethical use of AI. Unless you operate in the EU, organizations are currently expected to define and abide by their own guidelines for the responsible use of AI.

Considerations for AI ethics should be integrated into the design and development process from the outset. For example, bias detection and mitigation techniques should be applied from the start of algorithm development instead of being treated as a post-deployment fix.

AI Ethics Principles

AI ethics principles are specific guidelines designed to ensure that AI systems are fair, transparent, and beneficial to humans. Each organization interested in AI ethics defines its own principles, but here are a few widely recognized ones:

- Non-discrimination and bias mitigation. AI systems must not perpetuate or exacerbate existing biases and discrimination.

- Explainability. AI systems must be understandable and interpretable by users. Decision-making processes should be transparent and explainable.

- Equal opportunity. AI technologies must provide equal opportunities and benefits to all individuals, regardless of their background.

- Input data privacy. AI systems must respect individuals' rights to privacy and handle personal data responsibly and securely.

- Accountability. Organizations must establish clear lines of responsibility for the outcomes of their AI systems. There should also be mechanisms to address and rectify any harm caused by AI.

- Security guarantees. AI systems must operate safely under all expected conditions. Systems must be able to withstand attacks and avoid causing unintended harm.

- Consent. Data collection and usage must be based on informed consent, with clear information provided to users about how AI models use their data.

- Oversight. There should be meaningful human oversight of AI systems to ensure they align with human values and ethical standards.

- Human-centricity. AI systems must respect and promote human dignity and autonomy.

- Environmental impact. AI technologies must be developed and used in environmentally sustainable ways and with a minimal ecological footprint.

The principles of AI ethics must also apply to machine learning (ML) systems. ML models process vast amounts of data during the "learning" process, which makes these systems highly susceptible to data privacy and bias issues.

Why Are AI Ethics Important?

Recent studies indicate that more than 82% of companies are either utilizing or exploring the adoption of AI. Without ethical guidelines, organizations using AI would have free rein to do whatever they want with AI systems, regardless of the consequences.

Here's an overview of why adopting and adhering to AI ethics is so important:

- Ethical guidelines. AI ethics are crucial as they establish a framework for the responsible and beneficial use of these technologies.

- Responsible innovation. AI ethics provide guidelines that ensure AI development maintains a balance between innovation and the potential impacts on humanity.

- Public trust. A strong ethical foundation is crucial for building and maintaining public trust in AI systems. AI technologies are more likely to gain wide acceptance and support if they are used ethically.

- Protection of fundamental human rights. By incorporating ethical considerations into models, we ensure AI systems uphold human dignity, privacy, and autonomy.

- Sustainability. Considerations for long-term environmental impacts promote the creation of AI that is both environmentally responsible and beneficial to future generations.

A universal understanding of AI ethics also fosters a sense of global collaboration and shared responsibilities. This aspect of AI ethics will be crucial in the coming years as governments worldwide will have to deal with the rapid evolution of AI technologies.

Ethical Concerns of Artificial Intelligence

AI raises several ethical issues that must be addressed to ensure its responsible and beneficial use. Below is a close look at the most notable ethical concerns surrounding the use of artificial intelligence.

Bias and Fairness

Bias in AI refers to errors in the outputs of AI systems that disadvantage certain groups of people. These issues can manifest in several ways, including gender, racial, age, and socioeconomic biases. Biases can originate from multiple sources, including:

- Training data bias (i.e., if the training data contains biases or is not representative of the entire population).

- Algorithmic bias (i.e., when bias is unintentionally introduced through design choices, which most commonly occurs during feature selection or weight balancing in neural networks).

- User interaction bias (i.e., when human interactions with the model force the system to adapt to feedback that reflects biased human behavior).

Here are a few areas in which bias poses the most significant risks to AI implementations:

- Healthcare. Biases in diagnostic tools, recommendations, and patient care management can result in misdiagnoses or unequal access to medical resources.

- Hiring and employment. Biased AI recruitment tools can inadvertently favor specific demographics over others.

- Criminal justice. Biased AI algorithms in predictive policing, sentencing, and parole decisions can lead to unjust treatments and punishments.

- Finance. Biased AI systems in lending and credit scoring can discriminate against certain socioeconomic groups, affecting their access to loans and financial services.

- Education. AI learning tools and admission systems can reinforce existing educational disparities if they are biased against specific student populations.

Addressing bias in AI requires a mix of different strategies. Current go-to precautions are diverse and representative training data sets, transparency in algorithm design, and continuous monitoring of deployed models.

Data Privacy

AI systems require large volumes of data during model training. In many use cases, this data includes sensitive personal information such as health records, financial details, and personal communications.

Ensuring personal information is safe from unauthorized access, use, and disclosure is a priority in ethical AI. Threats to data privacy are a concern throughout the lifecycle of AI systems:

- Data collection. During the initial data collection, model admins must ensure they gather data ethically and with the consent of involved individuals.

- Data usage. Once collected, data must only be used in ways that respect individuals' privacy rights. AI systems should not use personal data for purposes beyond those for which it was initially collected without obtaining additional consent.

- Data storage. Ensuring that collected data is stored securely is paramount to preventing unauthorized access, breaches, and leakage.

Ensuring user data is not misused or exposed requires several precautions. Here are the most effective ones:

- Data minimization. Organizations should only collect data they require for model training and minimize the retention period to reduce privacy risks.

- Federated learning. This technique allows AI model training across multiple decentralized servers, each with a unique database of samples.

- Anonymization and pseudonymization. Transforming data to protect identities while retaining its utility for analysis is a standard measure.

- In-use encryption. Encryption in use enables computation on encrypted data without decrypting it, ensuring data privacy during processing.

In addition to technical measures, granting users control over their data is essential. Transparent usage policies, easy-to-understand consent mechanisms, and opt-out options are vital to ethical AI.

Learn about private AI, a concept that companies seek to adopt to ensure their data is processed and insights are generated with strict privacy and security controls.

Fraud and Misuse

Fraud and potential misuse are pressing concerns in AI ethics, especially as the technology becomes more sophisticated and pervasive. These issues can have widespread and damaging effects on individuals, organizations, and societies.

Malicious actors are increasingly using AI to commit fraud. Here are a few standard tactics:

- Deepfakes. AI-generated videos or audio that convincingly mimic real people enable threat actors to perform identity theft and manipulate targets into sharing sensitive information.

- Phishing attacks. AI can craft highly personalized phishing emails that are more likely to deceive the targeted recipients.

- Automated scams. AI chatbots can be used to execute scams at scale. Systems automatically interact with potential victims and extract sensitive information.

Artificial intelligence can also contribute to the creation and spreading of misinformation. AI algorithms can generate fake news articles, social media posts, and images that can be distributed widely and rapidly to sway public opinion. AI systems on social media can also inadvertently amplify misinformation by promoting content that generates high engagement regardless of its accuracy.

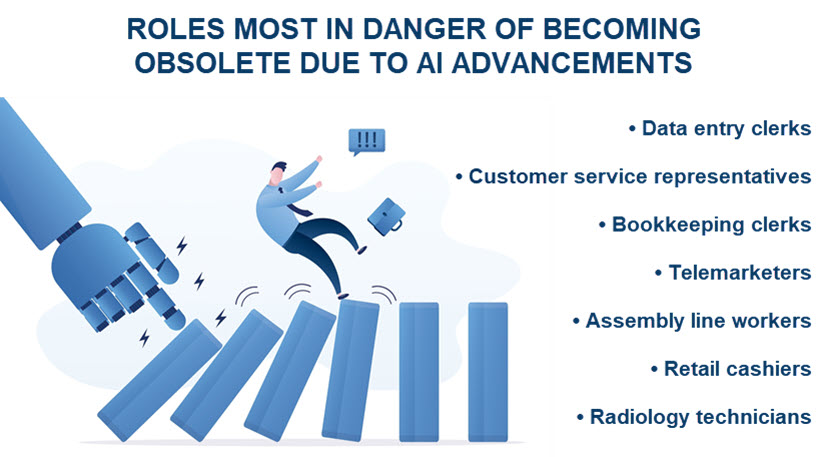

Job Displacement

Job displacement is a significant concern due to the rapid advancement of AI technologies, which are fundamentally altering the job market. Here are the main concerns within this area of AI ethics:

- Economic inequality. AI-driven automation is likely to exacerbate economic inequality by disproportionately affecting low- and middle-skill jobs. Workers in these roles will find it challenging to transition to new employment opportunities, which threatens to increase economic disparity.

- Social disruption. The loss of jobs due to AI may potentially lead to social unrest and instability. Communities dependent on specific industries may face significant challenges if those industries undergo rapid AI-driven transformation.

- Loss of purpose. Work is often a source of personal fulfillment and identity. Job displacement will lead to a loss of purpose for individuals whose roles are replaced by AI.

Ensuring a fair transition for displaced workers is imperative to AI ethics. Affected workers should have access to retraining, education, and support to help them adapt to new roles and industries. Promoting growth in sectors such as development, data analysis, and AI ethics will be crucial in offsetting job losses in other areas.

As for businesses, they have a responsibility to consider the long-term impacts of AI on their workforce. Organizations must take proactive steps to support employees through AI-related transitions.

Environmental Impacts

Environmental impacts are a significant concern in AI ethics due to the substantial resources required to develop, train, and deploy AI systems. These concerns encompass the following areas:

- Energy consumption. Training and running AI models, especially large-scale deep learning algorithms, require significant computational power. Models demand substantial amounts of energy, contributing to carbon emissions and exacerbating climate change.

- Electronic waste. Rapid advancements in AI technology can lead to shorter hardware lifecycles, contributing to electronic waste. Disposing of outdated AI hardware will be environmentally damaging if not managed properly.

- Resource usage. The production of hardware necessary for AI, such as GPUs and specialized chips, involves the extraction of finite natural resources. Mining and processing these rare earth elements can have severe environmental impacts.

Companies can mitigate the environmental impact of AI by using renewable energy sources for their data centers and server rooms. Transitioning to solar, wind, and other clean energy sources will be vital to reducing carbon emissions. Advanced cooling systems, energy-efficient hardware, and sustainable design practices will also be key factors.

Model Transparency

Transparency and explainability refer to the extent to which AI decision-making mechanisms are understandable and accessible to humans. Here are the leading causes of low transparency in AI systems:

- Model complexities. Many advanced AI models, particularly complex deep neural networks (DNNs), operate as "black boxes" with internal workings that are not easily interpretable. This complexity makes it difficult to understand how models make specific decisions.

- The communication gap. Translating complex technical details into easily understandable explanations is inherently tricky.

- Lack of standardization. Different AI models require different approaches and techniques to achieve transparency and explainability.

- Disclosure of secrets. Providing detailed explanations of AI decision-making processes often reveals sensitive information about the model, which poses privacy and security risks.

Despite these issues, ensuring AI models have high levels of transparency is vital. Users who understand how an AI system makes decisions are more likely to trust its outputs. This trust is crucial in areas like healthcare and finance, where decisions have significant real-life consequences.

Stakeholders in AI Ethics

Expecting each organization to define and enforce correct AI ethical guidelines independently is not a long-term solution. Instead, stakeholders are required to enforce these guidelines on a regional or international level. Here are the different types of stakeholders we can rely on for this responsibility:

- Policymakers. Governments can create and implement policies and regulations that govern the use of AI.

- International organizations. Entities like the United Nations (UN) and the International Organization for Standardization (ISO) can develop international standards for AI.

- Regulatory agencies. Agencies such as the European Commission and the U.S. Federal Trade Commission (FTC) can develop guidelines and enforce AI ethics in their respective industries.

- Ethics and research institutions. We can also rely on research centers and think-tank organizations to conduct studies and develop ethical AI frameworks.

While these entities dictate universal AI ethics, individual companies require in-house stakeholders to enforce rules. Here are the different roles commonly involved with this responsibility:

- C-level executives. Many CEOs and founders personally set the strategic vision and priorities for AI ethics. CTOs are also commonly involved with AI ethics since they already oversee the technological direction and implementation of AI systems.

- AI ethics officers. Some companies have dedicated roles or teams solely in charge of AI ethics.

- Legal and compliance teams. These teams monitor and enforce internal and external compliance, making them a common choice for ensuring adherence to AI ethics.

Some companies also rely on external consultants to define and help align with AI ethics. These external experts advise on best practices and help companies navigate complex ethical issues related to AI.

AI Ethics Standards and Regulations

Several initiatives emerged over the last few years that seek to create a balanced and ethical approach to AI development.

The EU AI Act

The EU AI Act is the first-ever legal framework on AI that governs the way EU-based companies develop, use, and apply AI technologies. The goal of this act is to ensure AI systems respect fundamental human rights, safety, and ethical principles.

The EU AI Act categorizes systems into different risk levels:

- Minimal risk. AI systems within this category have no obligations under the AI Act as they pose minimal risk to EU citizens' rights and safety. AI systems like AI-enabled recommender systems and spam filters fall into this category.

- High risk. AI systems within this category must comply with strict requirements. Examples of high-risk systems include autonomous robots, recruitment platforms, and banking software.

- Unacceptable risk. The EU AI Act bans systems that threaten people's fundamental rights, which primarily refers to applications that manipulate human behavior.

All AI systems that fall in the high-risk category are subject to strict requirements. Here's a list of what these applications must have before they can be put on the market:

- Adequate risk assessment and mitigation systems.

- Activity logs that ensure traceability of results.

- High-quality data sets.

- Clear and adequate end-user information.

- Detailed documentation on the system's workings and its purpose.

- High level of overall robustness and security.

- Appropriate human oversight.

The EU AI Act also prohibits certain AI practices, such as governmental social scoring and real-time biometric identification in public spaces.

The EU AI Act came into effect on August 1, 2024. The enforcement of rules for high-risk AI systems is scheduled to start on August 2, 2026, while the prohibitions on unacceptable-risk AI practices will become enforceable from February 2, 2025.

Other Notable AI Frameworks

While the EU AI Act is the only official regulation of its kind, some organizations have developed frameworks that companies can voluntarily use as a basis for ethical AI.

UNESCO's Recommendations on the Ethics of AI

UNESCO created a framework for the ethical use of AI. Here are the key principles of UNESCO's Recommendations on the Ethics of AI:

- AI must respect human rights and dignity.

- AI must benefit all of humanity, promoting inclusivity and equality.

- Systems must be transparent and have precise accountability mechanisms.

- AI should contribute to environmental sustainability.

UNESCO's Recommendations on the Ethics of AI encourage all member states to adopt these principles in their national AI policies.

IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

The IEEE has also developed a framework for ethical AI practices. Here are the core tenets of the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems:

- AI must prioritize human well-being and societal benefit.

- The development of AI use cases must be transparent.

- AI admins must ensure open communication about the model's processes and outcomes.

- Systems must be designed to be robust and safe, mitigating potential risks.

- Ethical considerations must be integrated into the design and deployment stages of AI.

Unlike UNESCO's framework, which focuses solely on member states, the IEEE's initiative aims to guide the responsible creation and use of AI technologies worldwide.

OECD AI Principles

The Organization for Economic Cooperation and Development (OECD) has established its own universal AI principles. Here's what the OECD AI Principles suggests organizations should adhere to:

- AI should contribute to global sustainable development and general well-being.

- Organizations creating AI systems must design them to respect human rights, fairness, and equity.

- AI actors should provide transparency and responsible disclosure.

- Systems relying on AI in any fashion must function robustly and safely.

- Organizations must be accountable for the outcomes and impacts of their AI systems.

Interested in getting into artificial intelligence? You'll have to learn how to code, so check out our article on AI programming languages to see where to get started.

The Future of AI Ethics

As different regions develop their own AI ethics guidelines, expect to see efforts to harmonize these standards to create a more cohesive global framework. International organizations like the UN, OECD, and ISO are likely to play a central role during this process.

Any global framework will insist on integrating ethical considerations early in the AI development lifecycle. Expect future frameworks to require incorporating precautions into the algorithms of AI models. The primary focuses of these precautions will likely be:

- Ensuring AI systems do not perpetuate or exacerbate existing biases.

- Protecting privacy rights during data collection and usage.

- Making AI decision-making processes understandable to users.

Governments will likely establish permanent regulatory bodies responsible for AI ethics, similar to financial regulatory authorities. These bodies will be responsible for monitoring and enforcing ethical standards in specific industries.

However, any regulatory body responsible for international supervision of AI ethics will face considerable challenges as it navigates the following factors:

- Different cultural perspectives on privacy, fairness, and transparency.

- Varying levels of regulatory maturity and enforcement capabilities.

- Diverse political ideologies and governance structures.

- Variations in technological infrastructure and capabilities across countries.

- Jurisdictional conflicts and disputes over authority.

You should also expect more efforts to increase public understanding of AI ethics. We can expect to see an increase in education programs and public awareness campaigns that help people become more informed about AI technologies.

Whatever ethical guidelines we set will need to be continuously updated to keep pace with technological advancements and emerging ethical issues. Ongoing evaluation and iterative review processes will be essential to adapting ethical principles to new AI applications and use cases.

Now's the Time to Pave the Way for Ethical AI

AI technologies are poised to transform the world at a speed unprecedented since the invention of the printing press six hundred years ago. Whether this transformation is beneficial or detrimental largely depends on our ability to establish ethical guidelines for AI quickly. If we fail to establish these rules on time, the negative consequences of AI may outweigh the positive aspects of these technologies.