Supervised and unsupervised learning are the two primary types of machine learning (ML). Both methods enable you to build ML models that learn from and adapt to input data. However, the two strategies train models in significantly different ways. Supervised and unsupervised learning also excel at different use cases, so choosing the correct learning method is vital when training an ML model.

This post explains the main differences between supervised and unsupervised machine learning. By the end of this article, you'll have all the information you need to make an informed decision on what learning technique best suits your ML use case.

Machine learning is a crucial subset of artificial intelligence (AI). Without ML, we could only create AI systems that rely on rule-based systems and symbolic reasoning.

What Is Supervised Machine Learning?

Supervised learning is a type of machine learning in which an ML model is trained on labeled input and output data. Labeled data consists of corresponding input-output pairs:

- Inputs (features). Inputs are the variables or attributes that the model uses to make predictions.

- Outputs (labels). Outputs are the target variables that the model aims to predict.

In labeled data, each input is associated with the correct output. For example, in a data set of medical images, each image would be associated with an output indicating whether the input picture contains signs of illness.

The goal of supervised learning is for the ML model to establish a relationship between inputs and outputs (so-called mapping). During training, the model learns from labeled data by iteratively making predictions on inputs and adjusting its parameters for the correct answer.

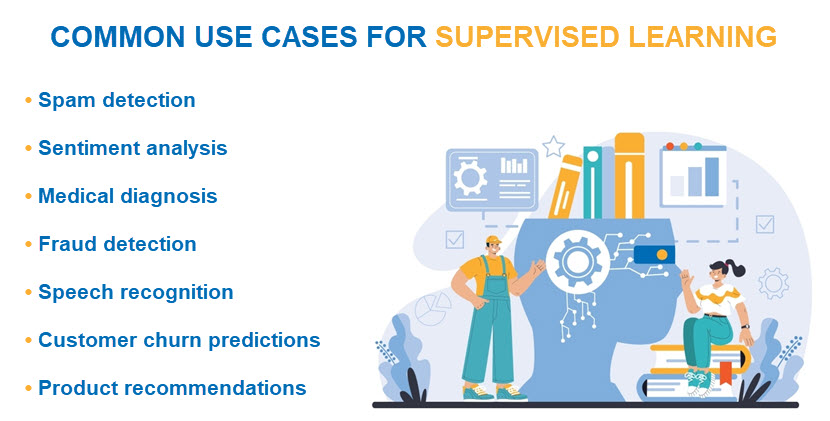

Once trained on enough labeled examples, the ML model makes reliable predictions on new, previously unseen data. Supervised learning is the go-to method of training for ML models that perform one of two tasks:

- Classification. Supervised learning is excellent at training models to classify data into two (binary classification) or multiple (multiclass classification) categories. A common example of ML classification is deciding whether an email is potentially loaded with malware.

- Regression. The other type of task suitable for supervised machine learning is making predictions about future outcomes based on specific indicators. The output here is a continuous value, such as price or probability.

Models trained with supervised machine learning are highly accurate in their assigned tasks. However, you require a large, labeled data set to train a model this way, and this data can be challenging, expensive, or impossible to gather for certain use cases.

Types of Supervised Learning

Supervised learning encompasses a variety of methods for training models to classify data and make predictions. Here are the most common supervised learning techniques:

- Linear regression. This algorithm finds linear relationships between the input features and corresponding outputs. Linear regression is commonly used for sales forecasts or to predict continuous values such as house prices.

- Logistic regression. This algorithm estimates the probability that a given input belongs to a particular class. This type of regression uses a logistic function to map any real-valued number into the [0, 1] range, which represents different probabilities. Logistic regression is often used for binary classification tasks.

- Decision trees. Decision trees are classification algorithms that split the data into subsets based on specific criteria. The splits occur at nodes that lead to different branches, and the branches lead to additional nodes or leaves until the model reaches a final prediction.

- Random forests. Random forests combine multiple decision trees to solve problems. The final prediction is made by averaging the predictions of all trees (regression) or by majority voting (classification). Random forests reduce the risk of overfitting, an issue that causes ML models to perform poorly on new data due to the noise in training data.

- Support Vector Machines (SVM). SVM works by finding the best boundary (or hyperplane) that separates different classes of data points. The goal is to maximize the distance between the closest points of each class and the boundary. SVM is commonly used for image classification and text categorization.

- Neural networks. These networks consist of layers of interconnected neurons. They learn complex patterns in data through backpropagation, a process during which the network adjusts its parameters based on prediction errors. Neural networks are excellent at image and speech recognition as they capture intricate data patterns.

Our guides to neural networks and deep nets (DNNs) provide an in-depth look at how neural networks work and what kinds of systems they enable us to create.

Supervised Learning Advantages

Supervised learning offers several benefits that make this technique a popular choice for data-driven decision-making and predictive analytics. Here are the main advantages of supervised learning:

- High accuracy. Supervised learning enables ML models to achieve high predictive accuracy. Models learn from labeled examples, which allows them to generalize well to previously unseen data of the same format as the training data.

- Wide range of applications. Supervised learning is applicable to a variety of regression and classification tasks. This versatility makes supervised learning useful in various fields, including finance, healthcare, marketing, and more.

- High interpretability. Supervised learning produces models that are relatively easy to interpret. This interpretability allows adopters to understand how predictions are made, which is crucial for mission-critical decision-making and highly regulated industries.

- Guided learning. Since the model learns from labeled data, the training process is guided by known outcomes. As a result, models converge more quickly to an optimal solution than with other machine learning types.

- Simple evaluations. Supervised learning allows for straightforward model performance evaluation using accuracy, precision, recall, and F1-score metrics. Additionally, cross-validation techniques help ensure the model generalizes well to new data.

- Incremental learning. Supervised learning models can be continuously improved by retraining with new data. Adopters get to enhance the model's performance over time as more labeled data becomes available.

Remember that all the benefits of supervised learning rest on the availability of high-quality labeled data. Without sufficient training data quality or quantity, no amount of supervised learning will enable you to build reliable ML models.

Supervised Learning Disadvantages

While supervised learning offers many advantages, this type of machine learning also has a few must-know drawbacks. Here are the main challenges of supervised learning:

- Labeled data availability. Supervised learning requires a significant amount of labeled data to train the model effectively. Acquiring and labeling this data is time-consuming, expensive, and labor-intensive.

- Label quality and consistency. The performance of supervised learning models heavily depends on the quality of the labels. Inconsistent or incorrect labeling leads to poor model performance and unreliable predictions.

- Feature engineering. Poorly chosen features (input variables) often significantly degrade model performance. Adopters require considerable expertise to avoid mistakes while preparing training data.

- Scalability issues. Supervised learning algorithms often struggle to scale with very large data sets. Scalability issues are common both in terms of memory and computational power.

- Inability to discover new patterns. Supervised learning is constrained by the patterns present in the training data. A trained model cannot discover new patterns or structures beyond the provided labels.

- High computational cost. Training supervised learning models with large data sets or complex algorithms is computationally expensive and time-consuming. Most adopters cannot afford to run the necessary hardware for supervised learning in-house.

Our Bare Metal Cloud (BMC) enables you to deploy powerful GPU instances (dual Intel Max 1100 GPUs) ideal for demanding ML and AI tasks. As an extra benefit, our GPU servers also make it simple to set up an ML environment.

What Is Unsupervised Machine Learning?

Unsupervised learning is a type of machine learning where the model is trained on unlabeled data, in which inputs do not have corresponding output labels. The goal is to enable the model to learn patterns and structures from unlabeled data without requiring explicit guidance from the admin.

Since the model only receives input data, supervised learning algorithms attempt to identify hidden data patterns, correlations, and structures on their own. This approach provides insights into the intrinsic properties of the data that are not visible to a human analyst.

Unsupervised learning models don't make predictions, they only group data. Models trained with unsupervised learning excel at the following tasks:

- Clustering. This data mining technique groups similar data points into clusters based on their similarities or differences.

- Dimensionality reduction. Reducing data dimensionality requires the ML model to reduce the number of features (i.e., variables) while preserving as much information in the input data as possible.

- Association. The goal of association tasks is to discover relationships or associations between variables in the data.

- Anomaly detection. Detecting anomalies requires the ML model to identify rare items and events within the provided data.

While supervised learning models are more accurate, unsupervised learning does not require adopters to label data to process inputs. For example, an unsupervised learning model can cluster images by the objects they contain (e.g., people, animals, buildings) without being told what those objects are ahead of time.

Types of Unsupervised Learning

Below is an overview of the most commonly used unsupervised learning algorithms and techniques:

- K-means clustering. This algorithm partitions data into so-called k clusters. Each data point is assigned to the nearest cluster center, and the cluster centers are recalculated iteratively. K-means clustering is helpful for market segmentation, image compression, and document clustering.

- Hierarchical clustering. This unsupervised learning technique builds a hierarchy of clusters either through a bottom-up approach (agglomerative) or a top-down approach (divisive). Hierarchical clustering is beneficial for document organization and social network analysis.

- DBSCAN. The DBSCAN algorithm groups data points that are closely packed and marks points that lie alone as outliers. DBSCAN effectively discovers clusters of varying shapes and sizes, so many adopters use the algorithm for spatial data analysis and noise filtering.

- Principal Component Analysis (PCA). PCA transforms data into a set of uncorrelated components that maximize variance. This process reduces data dimensionality. PCA is widely used in gene expression analysis, image compression, and exploratory data analysis.

- Isolation forests. This technique builds an ensemble of trees by randomly selecting a feature and splitting the data. The algorithm then detects anomalies by looking for points that require fewer splits to isolate. Isolation forests are often used in fraud detection and network security.

- One-class SVM. This method learns a boundary that separates normal data points from outliers. One-class SVM is beneficial for high-dimensional data and is often applied in anomaly detection tasks, such as detecting manufacturing defects or credit card fraud.

Check out our article on the market's top AI processors if you plan to run some compute-heavy AI or ML workloads on in-house hardware.

Unsupervised Learning Advantages

Unsupervised learning offers significant benefits in scenarios where supervised learning is not an option due to the lack of labeled training examples. Here are the main advantages of unsupervised learning:

- No need for labeled data. Unsupervised learning does not require labeled data, which is often expensive and time-consuming to obtain.

- Discovery of hidden patterns. Unsupervised learning algorithms are excellent at identifying hidden patterns, correlations, insights, and structures in data. This capability leads to the discovery of insights and relationships that may not be apparent through manual analysis.

- Dimensionality reduction. Unsupervised learning algorithms are ideal for reducing data dimensionality while preserving essential information and overall data integrity.

- Anomaly and outlier detection. Unsupervised learning is effective at detecting anomalies and outliers in the provided data. This capability is helpful in various applications, such as fraud detection, network security, and quality control.

- Model pre-training. Many ML adopters use unsupervised learning for pre-training models. Once the model identifies the underlying patterns in the data, the adopter feeds it with labeled data for fine-tuning. This approach, known as self-supervised learning, significantly improves model performance, particularly in the fields of natural language processing (NLP) and computer vision.

- Cost-effectiveness. Eliminating the need for labeled data reduces the cost of ML training. Adopters can jump into model training almost immediately, bypassing the need for expensive and time-consuming data gathering and preprocessing.

Check out our article on IT cost reductions to see the most effective strategies for lowering IT-related expenses.

Unsupervised Learning Disadvantages

While unsupervised learning has several unique advantages, this ML type also has a few notable problems. Here are the main disadvantages of unsupervised learning:

- Lack of interpretability. The results of unsupervised learning are often hard to interpret. The absence of labels makes it challenging to understand the meaning and significance of identified clusters and patterns.

- No clear metrics. Evaluating the performance of unsupervised learning models is challenging due to the lack of ground truth labels for comparison. Standard evaluation metrics, such as accuracy and precision, are often insufficient, so adopters must use less intuitive metrics like silhouette scores and the Davies-Bouldin index.

- Resource-intensity. Most unsupervised learning algorithms are computationally intensive as they must process vast volumes of noisy data. Adopters often encounter scalability issues when working with large data sets.

- Overfitting problems. Unsupervised machine learning often suffers from overfitting as models train on noisy, unlabeled data. Correct regularization techniques and careful model selection are necessary to improve generalization performance.

- Dependence on feature quality. The effectiveness of unsupervised learning heavily depends on the quality of the used features. Poorly chosen features lead to poor clustering or pattern recognition.

Our article on artificial intelligence examples presents the most popular uses of AI technologies across different industries.

Supervised vs. Unsupervised Machine Learning

The table below provides a supervised vs. unsupervised learning comparison that highlights the respective strengths and limitations of both ML types.

| Point of Comparison | Supervised Machine Learning | Unsupervised Machine Learning |

|---|---|---|

| Input Data Requirements | Requires labeled data. | Requires unlabeled data. |

| Primary Goal | Classify data or make predictions. | Group data points or find hidden structures in input data. |

| Model Outcome | Specific predictions or classifications. | Data groupings. |

| Most Popular Algorithms and Techniques | Linear regression, logistic regression, decision trees, and neural networks. | K-means clustering, hierarchical clustering, and DBSCAN. |

| Most Common Applications | Image classification, spam detection, medical diagnosis, credit scoring, and sentiment analysis. | Customer segmentation, anomaly detection, market basket analysis, dimensionality reduction, and feature learning. |

| Human Supervision | Requires human intervention to provide labeled training data. | Does not require human intervention or explicit guidance. |

| Main Advantage | High accuracy. | Ability to discover hidden patterns in input data. |

| Main Disadvantage | Requires large amounts of labeled data. | Evaluation challenges due to the absence of labels. |

| Go-To Evaluation Metrics | Accuracy, precision, recall, and F1-score. | Silhouette score and the Davies-Bouldin index. |

| Feature Engineering Importance | Vital for improving performance. | Crucial for meaningful clustering and pattern identification. |

| Adaptability | Adapts only to well-defined, specific tasks. | Adapts to exploratory tasks and feature learning. |

| Use in Pre-Training | Not common, as models directly use labeled data for training. | Commonly used to pre-train models, which are then fine-tuned with supervised learning. |

| Data Noise Sensitivity | Handles noise if the labeled data is accurate. | Struggles with noise, as there are no labels to guide the learning process. |

How to Choose Between Supervised and Unsupervised Learning

The right learning method for your ML model depends on the available training data and the intended use of the model. Go with supervised learning if your use case meets the following criteria:

- The goal of the ML model is to predict an outcome or classify data based on input features.

- You have a labeled data set where each data point has an associated label (e.g., class, category, value).

- You need to evaluate the model's performance using clear metrics (e.g., accuracy, precision, recall).

On the other hand, unsupervised learning is the right option when your use case meets the following criteria:

- The goal of the ML model is to discover hidden patterns or structures that human analysts were unable to identify.

- You have vast amounts of unlabeled data that is either impossible or impractical to label.

- You want to reduce the number of features in your data or identify unusual events in the input.

Remember that you can also opt for semi-supervised machine learning. This approach is a happy medium in which the adopter uses a training data set that combines both labeled and unlabeled examples.

Semi-supervised learning helps improve model performance without the need for extensive labeling efforts. For example, a radiologist could label a small subset of CT scans for diseases so the machine can more accurately predict which patients require medical attention. The remaining millions of images stay unlabeled, but a few thousand labeled images help the model improve its accuracy.

Choose the Right Learning Method and Get Your ML Project off to a Good Start

The choice between supervised and unsupervised machine learning depends on the specific goals of your ML project and the available training data. Choosing the wrong option is a costly mistake, so use what you learned here to clearly define whether your up-and-coming ML model is a better fit for supervised or unsupervised learning.