In 2022, the world created 97 zettabytes of data. Recent estimates project we will reach 175 zettabytes by 2025. To put these figures into perspective, if you were to print out 175 zettabytes of text, the amount of paper you would use could cover planet Earth 10 times.

Most of this information is stored in data centers – buildings dedicated to housing computer servers. These servers power the digital world, running everything from websites and social media platforms to streaming services and cloud computing applications.

Data centers are the backbone of the internet and are essential to our way of life. However, they are not sustainable. Data centers consume 3% of the world's electricity and produce 2% of its greenhouse gases – more than the aviation industry.

The data center has grown exponentially since its inception, but this rapid growth is putting a strain on our planet and generating a significant amount of waste. With the sector predicted to grow further, how do we reconcile this expansion with the impending environmental crisis?

This article explores what data center sustainability is, why it's essential, and how we can achieve it.

What Does Data Center Sustainability Mean?

Data center sustainability is the practice of designing, operating, and maintaining data centers in a way that minimizes their environmental impact.

Simply put, sustainability is about using less and wasting less to preserve the future of our planet and its resources for the next generation.

How is Data Center Sustainability Measured?

By quantifying sustainability, we can identify areas for improvement and implement meaningful changes.

Here are the most common metrics for measuring data center sustainability.

Power Usage Effectiveness (PUE)

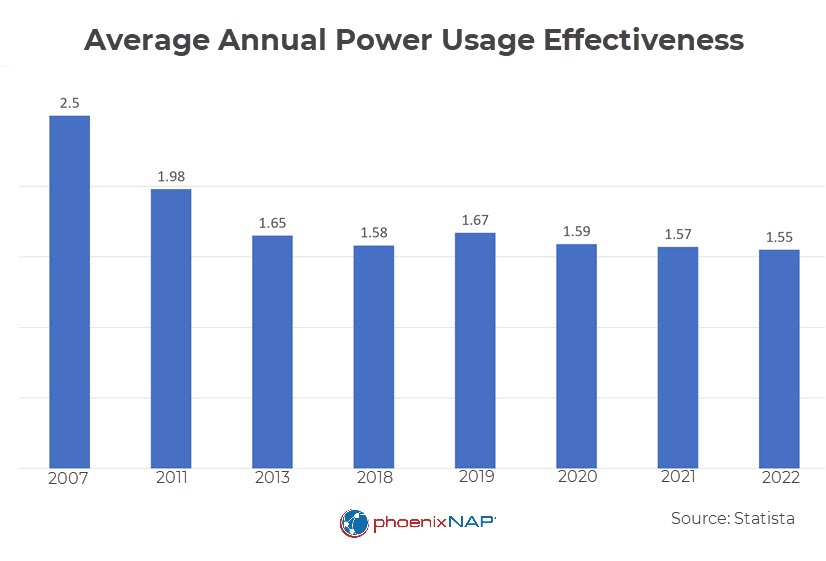

Power Usage Effectiveness (PUE) measures how efficiently a data center uses power. It is calculated by dividing the total energy consumed by the data center by the energy consumed by the IT equipment. A lower PUE indicates greater efficiency.

For example, a data center with a PUE of 1.2 uses 20% of total energy to power non-IT equipment, such as cooling systems and lighting. A PUE of 1.0 would be ideal, but there is always some energy loss, even when using the most efficient equipment and practices.

Learn more about data center energy efficiency.

Carbon Footprint

The carbon footprint of a data center is the total amount of greenhouse gases it emits. Data centers don't run on fossil fuel, except in the rare cases of electricity blackouts, where they may use diesel generators. However, if they consume electricity from coal and natural gas power plants, it still counts towards their carbon footprint.

For example, a data center that consumes 100 megawatt-hours of electricity annually and uses coal-fired power would have a carbon footprint of about 43 metric tons of CO2.

Water Usage

Data centers use water to cool their servers in evaporative cooling. Evaporative cooling works by spraying water into the air, which causes the water to evaporate and absorb heat. Data centers exhibit seasonal fluctuations in water usage, with lower consumption in winter and higher demand in summer. As outdoor temperatures rise, data center cooling systems require more energy and water to effectively regulate the facility's internal air temperature.

Monitoring water usage is a relatively simple process that involves tracking the amount of water used over a period. Additionally, efficiency is measured via a metric called Water Usage Effectiveness (WUE). WUE is calculated by dividing the total water consumption of a data center by the total energy consumption of the IT equipment. The lower the WUE, the more efficient the data center's water use.

Waste Production

Data centers generate a significant amount of waste, including electronic waste (e-waste), construction waste, and food waste.

Of these three types, e-waste is the most problematic due to the lead, cadmium, mercury, arsenic, and other hazardous materials in the disused equipment. As e-waste degrades, these hazardous components seep into the environment, posing a severe threat to human health and the environment.

The Current State of Data Center Sustainability

Alongside the growth of workloads, there has been a remarkable increase in data center efficiency. The average PUE ratio worldwide is 1.55 and has steadily decreased for over a decade. Between 2015 and 2021, data center electricity consumption increased by 10% to 60%. During the same time, workloads grew by 260% and internet traffic by 440%.

Additionally, data center operators used 50% more renewable power in 2023 and were collectively the most prominent corporate buyers of renewable energy, buying over half of all renewables in the U.S. Many operators have pledged to become carbon neutral by 2030, with some even building their own renewable energy infrastructure.

Despite the positive trend, the current state of sustainability is mixed, with significant challenges ahead.

Energy Consumption

In the United States, data centers consume around 90 billion kilowatt-hours annually, about 2% of the national energy consumption. This already vast figure is expected to increase in the coming years, driven by the increased demand for resource-intensive technologies like artificial intelligence.

While data centers are essential to the modern way of life, their energy consumption is putting a strain on the already fragile electrical grid. This fact is especially concerning when considering other factors, such as increasing energy demand, climate change, the rise of electric vehicles, and natural gas prices.

Water Consumption

There are fewer reliable statistics on data center water usage, as half of the data center operators worldwide do not collect water usage data. However, a study from 2018 found that U.S. data centers used an estimated 5.13 billion cubic meters of water annually. The average data center has a WUE of 1.8 L/kWh, meaning it uses 1.8 liters of water for every kilowatt-hour of electricity.

Data center water usage is especially problematic in water-stressed regions, as it strains local resources and deprives communities of access to clean water.

Waste Production

Humans generate 50 million tons of e-waste annually, more than the combined weight of all commercial airliners ever manufactured. Only 20% of this waste is formally recycled.

Data centers are one of the major contributors to e-waste production, but they are not alone in creating this problem. In the U.S., the law lets individuals decide what to do with their discarded electronics, and most people throw them in the waste bin. To address this challenge, we must implement policies that incentivize recycling and educate people about the environmental impact of e-waste.

How Is Sustainability Achieved in Data Centers?

The data center industry has many avenues to diminish its environmental impact.

Here are the elements of a sustainable data center.

Renewable Energy

Data centers can significantly reduce their carbon footprint by incorporating renewable energy sources such as solar and wind.

The transition to renewables is also increasingly cost-effective. The price of renewable energy has steadily decreased over the years, and it is now cheaper than fossil fuels in many places worldwide. Research suggests that renewables will soon become the cheapest form of energy for almost all applications. Furthermore, abandoning fossil fuels by 2050 could save the global economy trillions of dollars.

Here are the two primary methods for implementing renewables:

- Direct connection to renewable sources. Data centers can connect directly to renewable energy sources, such as solar panels or wind turbines. The direct connection can reduce the data center's reliance on the grid and lower its carbon footprint.

- Purchase of renewable energy certificates (RECs). RECs are a way to support the development of renewable energy without generating electricity yourself. When you purchase a REC, you are paying for a certain amount of renewable energy to be generated and added to the grid. RECs reduce the overall carbon intensity of electricity, which benefits everyone, including data centers.

In addition to these two methods, data center operators can relocate workloads among data centers based on the grid's capacity for renewable electricity. For example, if a data center in one region is experiencing a shortage of renewables, the operators can relocate workloads to another region with surplus energy.

Computing Efficiency

Increased computing efficiency has been a critical driver of data center sustainability, and it will continue to play a vital role.

Here are some specific examples of how computing efficiency improves data center sustainability:

- Server virtualization allows multiple operating systems to run on a single physical server, reducing the number of servers needed and saving energy and money. Virtualization creates a virtual layer on top of a physical server, allowing many operating systems to run independent workloads. Virtual servers also enable dynamic resource allocation, allowing data centers to quickly adjust their resource usage and avoid overprovisioning.

- Server consolidation involves combining multiple workloads onto a single server. Like virtualization, consolidation reduces the number of physical servers needed.

Cloud Computing

Cloud computing is the on-demand delivery of IT resources and applications over the internet. Instead of building and maintaining their own data centers, organizations can access computing resources from a cloud provider.

Big, professionally operated data centers are much more efficient and sustainable than small on-premises data centers for several reasons:

- Management. Cloud providers have the expertise and experience to manage data centers efficiently and minimize energy consumption.

- Equipment. Cloud providers use the latest energy-efficient equipment and technologies.

- Economy of scale. Cloud providers can achieve economies of scale that are impossible for small on-premises data centers.

Cooling

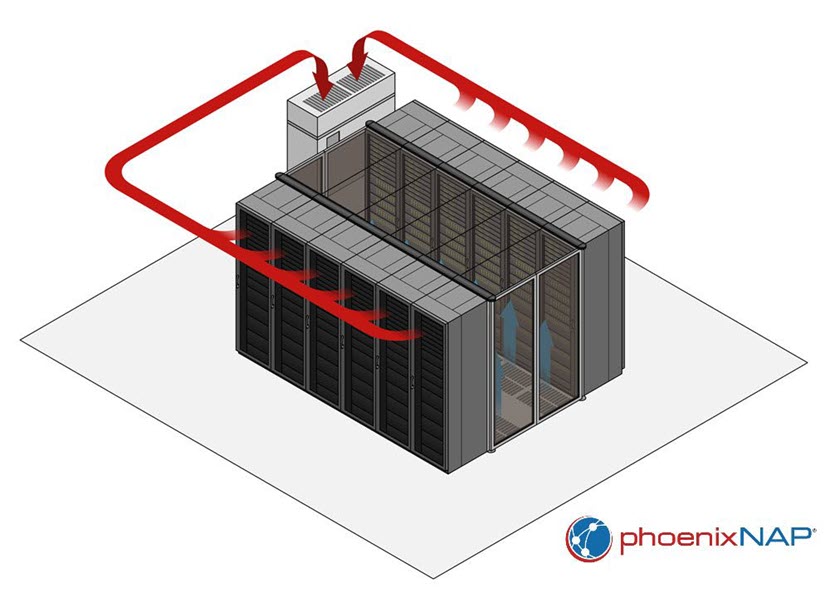

Electronic devices generate heat during operation. Keeping the server room temperature between 68 -71 degrees Fahrenheit (20-22 degrees Celsius) is essential for server reliability and performance. As computer components become faster and smaller, the heat they generate intensifies and becomes more concentrated. Cooling and ventilation are vital and account for up to 40% of the total energy consumption of a data center. Inadequate cooling not only damages equipment but, contributes to the e-waste problem.

Traditionally, data centers use mechanical air cooling to circulate air around the servers and other equipment. Fans manipulate the air, which absorbs heat and exits the data center. Air cooling is the standard because it is inexpensive and easy to implement. However, it remains the least efficient and effective, even with modern advancements.

Another method is evaporative cooling, which uses water to cool the air around servers. As water evaporates, it absorbs heat from the surrounding air, causing the air to cool. This is the same principle that makes your skin feel cooler when you sweat. Evaporative cooling is more efficient than pure air cooling, but it comes at the cost of using a precious resource.

Liquid Cooling

As chips become more powerful, air cooling becomes less effective at removing heat. Data center operators are now looking to liquid cooling, which uses coolant to absorb and transfer heat away from servers.

Some innovative cooling technologies show great potential to make data centers more efficient and environmentally friendly.

- Direct-to-chip liquid cooling circulates coolant directly over the CPU and other heat-generating server components to dissipate heat. The coolant flows through a cold plate attached to the chip, absorbing the heat. The heated coolant then flows through a piping network to a heat exchanger, releasing heat into the environment. Direct-to-chip is much more efficient than air cooling because liquid has a higher thermal conductivity than air.

- Immersion cooling involves submerging computer components in a thermally, but not electrically, conductive coolant. By immersing servers in mineral oil, the need for fans and other moving parts is eliminated, enabling the efficient deployment of high-density applications that would be difficult to cool using traditional methods. Additionally, coolant can be recycled, further reducing the environmental impact of the cooling process.

Artificial Intelligence

Artificial intelligence (AI) and machine learning (ML) are transforming the data center. They can optimize cooling, workload placement, and failure prediction, resulting in significant energy savings and reduced environmental impact.

Automatic Cooling

One cutting-edge development is AI-assisted automatic cooling. AI algorithms can quickly detect and respond to temperature changes throughout a data center, ensuring optimal temperature and minimizing energy consumption.

Optimized Workload Placement

AI algorithms can also optimize workload placement. By automatically placing workloads on the most appropriate servers based on their requirements and the availability of resources, AI can ensure that workloads run efficiently, reducing the overall load and energy consumption.

Failure Prediction and Prevention

AI and ML can predict and prevent hardware failures in data centers. By analyzing data from sensors, logs, and other sources, AI algorithms identify patterns and trends that indicate an impending failure. This warning system allows data center operators to prevent the early destruction of hardware.

Energy Optimization

AI and ML algorithms can optimize energy usage in data centers by monitoring energy consumption and workload data. AI finds ways to reduce energy use, for example, by turning off idle servers or adjusting the temperature of the data center.

Location and Energy Sourcing

Favorable climate conditions, such as low ambient temperatures, naturally reduce the need for cooling and ventilation, which translates to lower water consumption and reduced greenhouse gas emissions.

Data centers in hot climates work harder to keep servers cool, which increases wear and tear on the equipment, shortens its lifespan, and leads to more frequent failures. In contrast, building data centers in cooler climates extends the lifespan of equipment and reduces e-waste.

In addition to climate considerations, the composition of local power plants greatly influences a data center’s environmental footprint. Those who rely on fossil fuel-powered electrical grids will have a much higher carbon footprint than those who rely on renewable energy.

E-waste Management

Data centers can minimize e-waste by adopting a sustainable lifecycle strategy.

This approach includes:

- Extending the lifespan of equipment through regular maintenance and proactive care.

- Monitoring the cooling efficiency to lower the risk of prematurely discarding quality equipment.

- Implementing smart thermostats to balance temperature maintenance with energy efficiency.

- Repurposing older hardware into backups instead of letting it deteriorate and discarding it.

- Designing new equipment with future reuse in mind.

- Reusing and recycling as much as possible.

- Partnering with waste management companies that operate a 'zero waste to landfill' policy and avoid overseas shipping, which generates high levels of carbon emissions and often leads to dumping.

Benefits of the Sustainability Approach

Consumers are increasingly favoring brands that are committed to environmental sustainability. Four out of five people say they are more likely to do business with a company that focuses on sustainability.

Furthermore, 71% of employees and job seekers find environmentally sustainable companies more appealing. Over two-thirds are more likely to apply for and accept positions with organizations that prioritize environmental and social responsibility.

Finally, as the cost of resources and electricity rises, it makes economic sense to go green. For example, electricity constitutes up to 70% of a data center’s overall operational expenses. Wasting less reduces costs and improves profitability.

Sustainable Data Centers: A Beacon of Hope for a Greener Future

Technological advancement and preserving our planet's precious resources need not be at odds and sustainable data centers are the bellwether for achieving a more harmonious relationship between these two aspirations.

So far, we have made significant progress in laying the foundation for sustainable data centers. This foundation includes increased accessibility of affordable renewable energy and revolutionary advancements in energy, cooling, and computing efficiency.

While this is a positive development, we have more work ahead of us. Climate change is an existential threat, and the next decade will be crucial in our efforts to reduce its impact. Carbon-free and sustainable data centers will play a vital role in this endeavor and are a critical milestone in our journey toward environmental responsibility.