Kubernetes relies on a clustered architecture to provide distributed workload execution and enable dynamic resource scaling. Clusters provide an operational context for container orchestration and offer a flexible and resilient deployment environment.

This article provides an overview of the essential aspects of Kubernetes cluster architecture and offers recommendations for effective cluster management.

What Is Kubernetes Cluster?

A Kubernetes cluster is a distributed system of nodes that work together to run containerized applications. It abstracts underlying infrastructure and enables automated deployment, scaling, and lifecycle management.

By distributing a workload across nodes, Kubernetes improves its resilience against failures, ensuring high availability and fault tolerance. This approach reduces the complexities of large-scale container deployments, allowing developers to focus on the application rather than infrastructure management.

Kubernetes Cluster Components

Every Kubernetes cluster consists of two primary components: a control plane and one or several worker nodes. The control plane manages the cluster, while the worker nodes run the containerized applications.

A single-node control plane is suitable for testing and development. Production environments require multi-node control planes for high availability and fault tolerance.

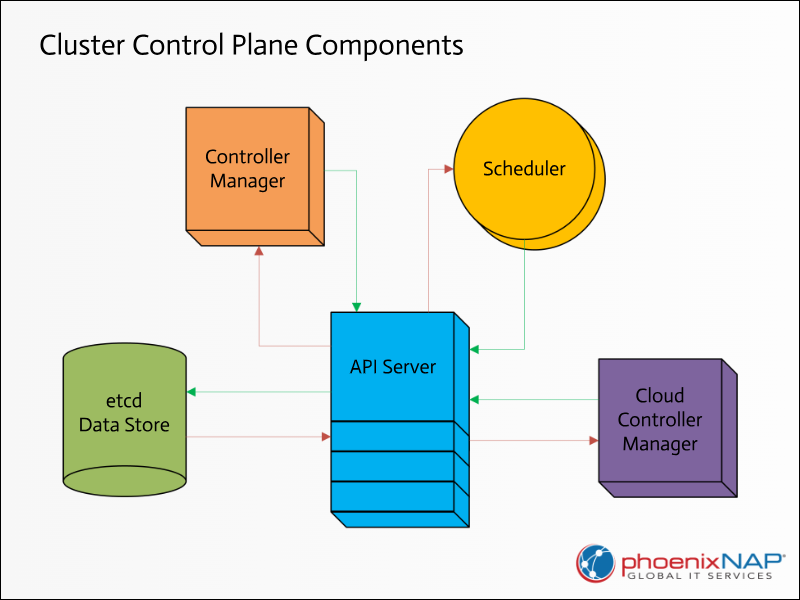

The following components make up a control plane:

- API server (kube-apiserver). The control plane's front end that exposes the Kubernetes API and enables interaction with the cluster.

- etcd. A distributed key-value data store for collecting cluster data (e.g., configuration, state, and metadata).

- Scheduler (kube-scheduler). The component that monitors Kubernetes pod creation and assigns pods to nodes based on resource requirements, constraints, and other factors.

- Controller manager (kube-controller-manager). The component that runs various controller processes (e.g., replication, node, and endpoint controllers) and ensures that the state of the cluster matches the desired state.

- Cloud controller manager (cloud-controller-manager). Specific to cloud provider integrations, this control plane element handles cloud-specific functionalities, such as managing load balancers, persistent volumes, and network routing.

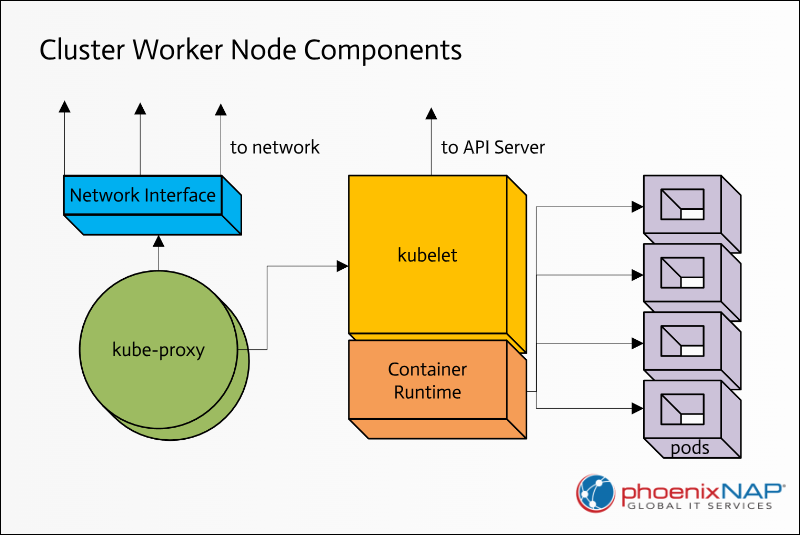

Each worker node consists of the components below:

- kubelet. An agent that receives pod specifications from the API server and manages the lifecycle of the containers within the pods.

- kube-proxy. A network proxy that maintains network rules necessary for pods to communicate over a network. It handles network routing and load balancing for services.

- Container runtime. The component that manages containers and container resources in pods. The most common runtimes include Docker, containerd, and CRI-O.

- Pods. The smallest deployable units in Kubernetes. Each pod hosts one or more containers that share network and storage resources.

How to Create Kubernetes Cluster

Creating a Kubernetes cluster involves different procedures depending on the project's needs and the selected deployment method.

The table below describes the most common approaches:

| Method | Process | Advantages | Considerations |

|---|---|---|---|

| Managed Kubernetes Services, e.g., Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), Azure Kubernetes Service (AKS) | Use the cloud provider's console or CLI to define cluster configurations. The cloud provider handles the control plane and node management. | Simplified setup and management with automatic scaling and updates. Integration with other cloud services. High availability. | Cloud-specific features and APIs create dependencies. More expensive than self-managed clusters, especially for large-scale deployments. Limited customization. |

| kubeadm (Self-Managed Kubernetes) | Manually set up the control plane and worker nodes. Use kubeadm to initialize and join nodes to the cluster. | More control over cluster configuration. Suitable for on-premises deployments or custom environments. | Increased operational overhead. Requires a deeper understanding of Kubernetes components. |

| Minikube/Kind (Local Development) | Install the tool and run the relevant command to create the cluster. Minikube creates a local Kubernetes cluster on the machine. Kind uses Docker containers to run nodes. | Simple setup of a single-node Kubernetes cluster for local development. Ideal for testing and experimentation. | Not suitable for production. |

| Docker Desktop | Enable Kubernetes within the Docker desktop application. The application creates a local Kubernetes cluster. | Very easy to enable a single-node Kubernetes cluster. Good for local development. | Not suitable for production. |

Manual Cluster Deployment

Each managed service provider has a different procedure for setting up a cluster. The steps below describe the manual deployment steps:

- Infrastructure Preparation. This step involves provisioning bare metal servers, virtual machines, or cloud instances that comprise the cluster. Additionally, cluster networking and security settings need to be configured.

- Component Installation. The Kubernetes components that must be installed include:

- kubelet. A node agent for communication with the control plane.

- kubeadm. A tool that helps automate the installation and configuration of core Kubernetes components.

- kubectl. A CLI tool that allows users to interact with the Kubernetes API server.

- Cluster Initialization. The procedure includes initializing the control plane and adding worker nodes to the cluster.

- Network Configuration. Choosing a Container Network Interface (CNI) plugin and configuring network policies, ingress controllers, and service meshes enables inter-cluster communication and external connectivity.

- Verification. Verifying cluster functionality using kubectl commands.

Note: For more information about the manual method for Kubernetes cluster deployment, read our comprehensive tutorials:

Working With Kubernetes Cluster

The tasks performed in a Kubernetes-related project depend on the role in it (e.g., app developer, operator, cluster administrator). The general workflow involves the following steps:

- Developing an application. An app developer writes application code.

- Containerizing the application. The developer creates a container image and pushes it to a registry.

- Defining a deployment. An operator creates a YAML file describing the application deployment. YAML files define how applications should be deployed (e.g., using deployments or stateful sets) and specify container images, resource requests, and other configurations. Services are defined to expose applications within the cluster or externally.

- Deploying the application. The operator uses kubectl to deploy the application to the cluster.

- Managing the application. During the application's lifecycle, a cluster administrator uses kubectl to monitor, scale, and update the application.

- Monitoring. The administrator uses logging and monitoring tools to track application and cluster health.

Cluster Management Tools

The sections below introduce tools that improve Kubernetes cluster management, from initial setup to maintenance and security.

Configuration Management

The following tools help Kubernetes application configuration and deployment by automating processes through declarative methods and Git-based workflows:

- Helm. A package manager for Kubernetes, with a CLI that simplifies defining, installing, and upgrading Kubernetes apps.

- Kustomize. A configuration transformation tool that helps users edit YAML configurations without having to modify the base YAML files.

- Argo CD. A GitOps continuous delivery application for Kubernetes. It helps automate the app deployment by keeping the app's live state synchronized with its desired state as defined in the Git repository.

- Flux. A modern GitOps tool designed for Kubernetes with a focus on automating the deployment and management of applications. It supports many configuration management tools, such as Helm and Kustomize.

Networking and Security

To secure and manage communication within Kubernetes, the tools below provide advanced network policies, application-layer visibility, and service mesh functionalities:

- Calico. A tool that provides networking and network policy capabilities, allowing users to secure communication between workloads.

- Cilium. A cloud-native networking and security tool. Cilium enables Layer 7 (application layer) visibility and security, meaning it can understand and control network traffic based on application protocols like HTTP, gRPC, and Kafka.

- Istio. A service mesh for connecting, securing, and controlling microservices. It uses Envoy proxies, which are deployed as sidecars alongside each microservice.

Automation and Infrastructure as Code (IaC)

Below are infrastructure and configuration automation tools that help automate IT tasks and free up time for cluster administrators:

- Terraform. An IaC platform for defining and managing infrastructure (e.g., servers, databases, networks) using code that describes the desired state.

- Crossplane. An open-source add-on that enables users to manage infrastructure from various cloud providers and services using the Kubernetes API.

- Ansible. A tool that automates repetitive IT tasks. Unlike other automation tools, Ansible does not require agents to be installed on the managed systems.

Cluster Monitoring

The tools presented in this section improve observability by providing metrics, dashboards, log aggregation, centralized logging, and unified monitoring:

- Prometheus. A system monitoring and alerting toolkit for cloud-native environments. It is primarily used for collecting time-series data like CPU usage, memory utilization, and request latency.

- Grafana. A data visualization and monitoring tool. It enables users to create dashboards that display data from various sources in a visually appealing and informative way.

- Loki. A highly available, scalable multi-tenant system for log aggregation. Unlike traditional log aggregation systems that index the contents of logs, Loki indexes metadata (labels).

- EFK stack. A combination of tools for centralized log management. EFK consists of three separate tools: Elasticsearch (a RESTful search and analytics engine), Fluentd (a data collector), and Kibana (a visualization tool)

- Datadog. A monitoring and security platform for cloud applications. It provides tools to monitor the health and performance of applications and infrastructure.

Maintaining Kubernetes Clusters

Kubernetes clusters require consistent maintenance to preserve operational stability. Below is a general task list for a Kubernetes cluster administrator:

- Cluster setup and maintenance.

- Setting up and configuring the Kubernetes cluster.

- Managing node resources and scaling.

- Upgrading and patching the cluster.

- Networking configuration.

- Setting up network policies and ingress controllers.

- Managing service discovery and load balancing.

- Storage management.

- Configuring persistent volumes and storage classes.

- Managing storage access for applications.

- Cluster security.

- Implementing RBAC (Role-Based Access Control).

- Securing cluster communication.

- Managing secrets and configurations.

- Monitoring and logging.

- Setting up monitoring tools.

- Configuring logging aggregation.

- Troubleshooting.

- Diagnosing and resolving cluster issues.

- Fixing application-related problems.

Conclusion

This article summarized the architecture and operational procedures related to Kubernetes clusters. It also listed essential tools for working in a cluster environment.

For a deeper dive into the underlying structure of Kubernetes, read Understanding Kubernetes Architecture with Diagrams.