Introduction

Modern processors are built to handle increasingly complex workloads, and understanding their architecture is crucial for optimizing performance. Two fundamental concepts of CPU specifications are cores and threads.

This text will explain the differences between CPU cores and threads, how they interact, and their impact on overall performance.

CPU Threads vs. Cores: Overview

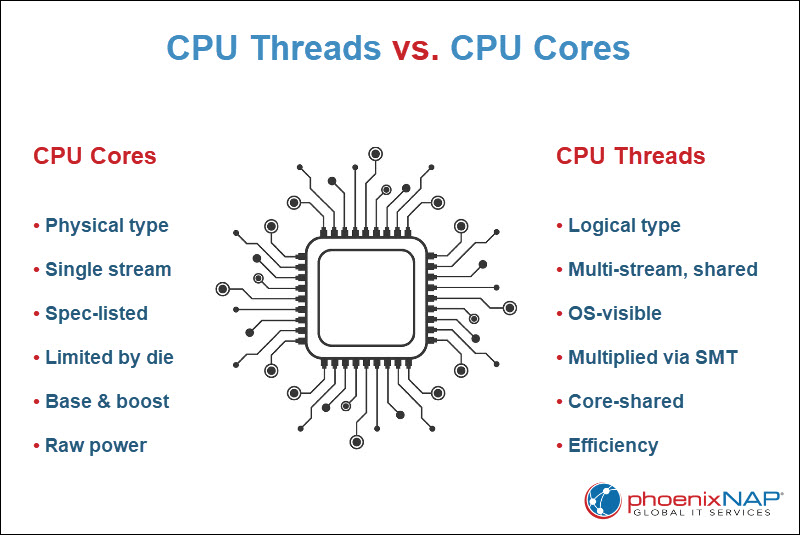

CPU cores and threads are fundamental elements that define how a processor executes tasks. Cores are the physical processing units that carry out instructions, while threads allow each core to manage multiple tasks simultaneously.

The following table compares cores and threads across several key features to highlight their differences and roles in CPU performance:

| Feature | CPU Cores | CPU Threads |

|---|---|---|

| Type | Physical processing unit. | Logical execution unit. |

| Instruction handling | One instruction stream per core. Reads, decodes, and executes instructions. | Allows a core to manage multiple instruction streams concurrently. Increases efficiency but does not double performance. |

| Visibility | Listed in CPU specifications. Physically present on the CPU die. | Not always explicitly listed. It depends on technology like Hyper-Threading. |

| Count | Limited by the physical space available on the chip. | Double the number of cores if Hyper-Threading or Simultaneous Multithreading (SMT) is supported. |

| Speed | Base and boost clock speeds determine how fast instructions are processed. | Follows core speed, but individual thread execution is slower due to shared resources. |

| Impact on performance | Higher core count generally maximizes raw processing power. | More threads improve efficiency in parallel workloads, but do not double performance. |

CPU Threads vs. Cores: In-Depth Comparison

While cores and threads are closely related, each plays a distinct role in CPU performance. Understanding how they differ across specific features helps in choosing the right processor for your workload and optimizing system efficiency.

The following sections break down each key aspect of cores and threads.

Type

CPU cores are the physical processing units inside the processor. Each core independently executes instructions, which contribute to a CPU's overall computing power. Modern CPUs include different architectures, such as high-performance cores and efficiency cores, but all are still physical units.

However, threads are logical constructs. They represent separate execution paths that a core handles, enabled by technologies like Hyper-Threading or Simultaneous Multithreading (SMT). Threads allow a single core to manage multiple instruction streams, improving parallel task handling without adding extra physical cores.

Instruction Handling

CPU cores handle one instruction stream at a time per physical core. Each core reads, decodes, and executes instructions from the operating system or applications, providing the raw computational power of the processor.

Note: While a CPU core processes one thread's instruction stream at a time, some modern superscalar designs can execute multiple instructions in a single cycle.

On the other hand, threads let the core manage multiple instruction streams concurrently. This improves CPU utilization because it keeps cores busy when one thread is stalled, which enhances multitasking and performance in parallel workloads.

For example, multi-threaded applications or running multiple programs simultaneously benefit from threads keeping cores active. While threads do not double a core's performance, they help maintain smooth execution and better overall efficiency.

Visibility

Visibility in CPU terms refers to whether cores or threads are apparent to users and software.

CPU cores are physical components inside the processor. Each core is a distinct unit contained within the CPU die, not visible to the naked eye. Cores are listed in processor specifications and sometimes on the box, and in the OS, making it easy to know the physical processing capacity of a CPU.

Similarly, threads cannot be seen on the CPU die and are only visible to the operating system and software, which schedule multiple threads per core if Hyper-Threading or SMT is supported.

Count

The number of CPU cores determines how many tasks a processor can handle simultaneously at the hardware level. Older or simpler CPUs often feature single, dual, or quad cores, which limits the number of tasks they are able to process at once. Modern consumer processors have between two and 16 cores, while workstation and server CPUs have 32, 64, or more cores per socket.

Some high-end server processors, such as the AMD EPYC 9965, feature up to 192 cores per socket, which enables extreme levels of parallel processing for data centers, AI workloads, and large-scale virtualization. More cores improve performance for workloads that can be parallelized, such as video rendering, scientific simulations, or hosting multiple virtual machines (that have their own vCPU).

Threads further increase the CPU's ability to manage concurrent tasks. A core with SMT is able to handle two or more threads, doubling the number of tasks the CPU manages in parallel. The operating system schedules these threads to keep the core's processing units utilized, which boosts efficiency.

Speed

The speed of a CPU core is determined by its clock rates, expressed as base and boost (or turbo) frequencies:

- The base clock frequency. The standard operating speed of the core.

- The boost clock frequency. Temporarily increased speed under demanding workloads.

Higher clock speeds mean the core processes more instructions per second, which improves single-threaded performance.

Threads run at the core speed, but since multiple threads share the same resources, each thread doesn't always achieve the full performance of a core running alone. Actual performance per thread often depends on how efficiently the workload uses shared execution units, cache, and memory bandwidth.

Impact on performance

Cores have a direct impact on raw processing power. More cores allow a CPU to handle more tasks in true parallel, which increases performance in multi-threaded applications and workloads that split across multiple cores.

Threads improve efficiency by allowing a core to keep working when a part of a task would make it idle. While they do not match the performance gain of adding extra cores, threads complement cores by boosting multitasking and parallel workload efficiency.

How Do CPU Threads and Cores Work Together?

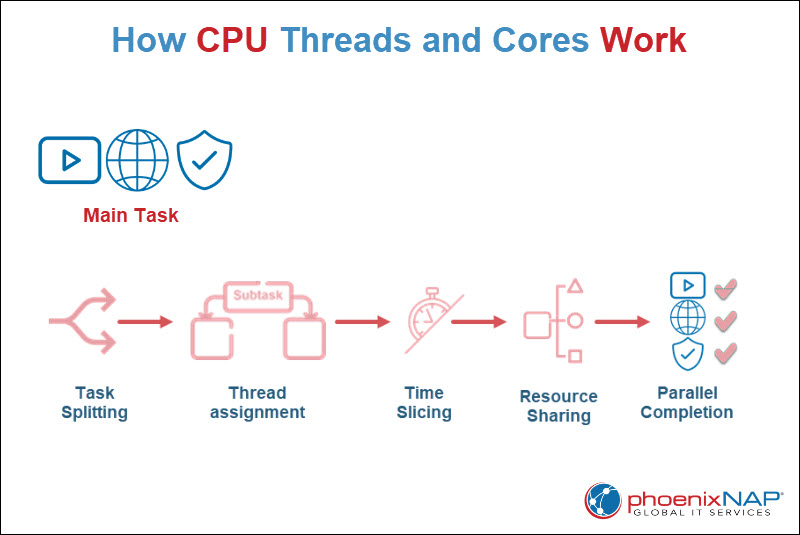

CPU cores and threads work in tandem to maximize processing efficiency. Cores handle the actual execution of instructions, while threads allow each core to manage multiple tasks concurrently.

For example, a user wants to run a video rendering application, a browser with multiple tabs, and a background antivirus scan simultaneously.

To do that, each core must handle a main task, such as rendering a video frame or processing a browser tab. Threads allow these cores to divide subtasks and remain active.

The process includes:

1. Task splitting. The operating system or application breaks each main task into smaller subtasks that run independently. For example, a video frame is divided into multiple sections to be processed simultaneously.

2. Thread assignment. Each subtask is assigned to a thread. If a core supports multiple threads, it handles more than one subtask concurrently.

3. Time slicing. The CPU schedules each thread in short intervals, rapidly switching between them when one subtask is waiting for data or resources.

4. Resource sharing. Threads share the core's execution units, cache, and registers, allowing efficient use of the core's hardware while executing multiple instruction streams.

5. Parallel completion. As threads complete subtasks, the results are combined, completing the overall main task faster than if the core processed each subtask sequentially.

This mechanism allows cores and threads to work together efficiently, which improves performance and maintains smooth operation when multiple applications run simultaneously.

Time Slicing

Time slicing is an operating system scheduling method that allocates CPU time to each thread in short intervals. In simultaneous multithreading, hardware can execute parts of multiple threads in the same cycle by sharing execution resources. When threads compete for the same assets or SMT is unavailable, the OS uses time slicing to alternate execution, creating the appearance that multiple threads are running at once.

This ensures cores are never inactive while waiting for a thread to complete or access resources. This process maximizes CPU utilization and enables smooth multitasking.

Even though threads do not have the full power of a physical core, this rapid scheduling helps maintain high overall system performance.

Which Is Better: Cores or Threads?

Since cores and threads serve distinct purposes, neither is technically better. The optimal choice depends on the workload and software optimization.

The following table presents the main differences, showing which scenarios benefit most from more cores or more threads:

| Feature | More Cores | More Threads |

|---|---|---|

| Strength | Enables multiple independent tasks to run simultaneously. | Handles multiple instruction streams per core, keeping cores busy. |

| Typical workloads | High-demand parallel tasks include video rendering, 3D modeling, scientific simulations, and managing multiple virtual machines. | Multitasking and smaller parallel tasks, such as office apps, web browsing, or background processes. |

| Performance effect | Increases raw computational power. | Adding more threads advances utilization, but the core's shared resources limit gains. |

| Scalability | Adding more cores directly increases the CPU's ability to process independent tasks in parallel. | Adding more threads optimizes utilization, but the core's shared resources limit gains. |

| Cost and power | More cores increase power consumption and CPU cost. | Threads require no additional power or hardware changes, so efficiency gains are achieved without extra cost. |

Conclusion

This text explained what CPU threads and cores are and their differences based on different criteria. It also showed how they work together on a practical example. Moreover, it elaborated on whether CPU cores or threads are better and in which situations.

Next, learn what load balancing is and how to determine the Linux average load.