The popularity of AI development has influenced recent advances in computational hardware. While GPUs regularly receive updates for deep learning and scientific computing, the specific requirements of large language models (LLMs) demanded a new type of hardware, the Language Processing Unit (LPU).

This article explains the differences between LPUs and GPUs, examining their architectural designs, operational principles, and specific use cases.

What Is LPU?

The Language Processing Unit (LPU) is a specialized microprocessor class catering to the computational requirements of large language models (LLMs). Unlike general-purpose processors, LPUs excel in sequential data processing and running bandwidth-hungry applications.

The design of LPUs prioritizes efficiency for inference workloads, e.g., generating text or processing natural language inputs. The LPU excels at real-time AI by handling long sequences of operations with low latency and high throughput.

How Does LPU Work?

LPUs use a specialized parallel architecture (e.g., systolic array) tailored to the matrix multiplication and vector operations necessary for developing LLMs. Because LLMs need constant access to their parameters, LPUs feature special on-chip memory and connections to minimize the time spent fetching data.

LPUs improve the speed using two essential techniques:

- Sparse attention optimization, i.e., reducing the number of unnecessary computations.

- Tensor parallelism, i.e., distributing a single tensor across multiple processing cores.

The stream-based operational model of an LPU has data flowing through a series of processing elements, each performing a pre-defined task.

LPU Practical Applications

The primary LPU function is AI inference. LPUs can process user queries and generate responses with significantly lower latency than a general-purpose GPU. Practical LPU applications include:

- Generative AI services. Powering chatbots, content creation tools, and conversational AI agents.

- Natural language understanding (NLU). Enhancing search engines, sentiment analysis tools, and language translation services.

- Real-time transcription and summarization. Processing and summarizing spoken language in real-time.

- Code generation and debugging. Accelerating AI assistants that write and analyze software code.

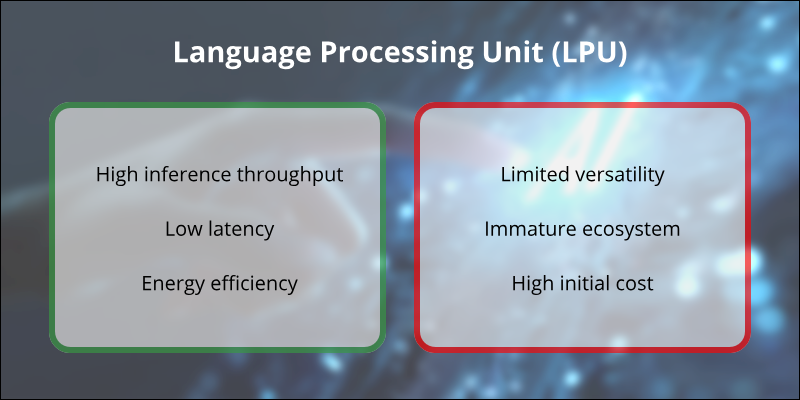

LPU Advantages

The advantages of LPUs include:

- High inference throughput. By minimizing data movement and optimizing for specific LLM operations, LPUs process more tokens per second than a general-purpose processor.

- Low latency. The focus on sequential processing reduces the time between a request and a response, which is crucial for real-time applications.

- Energy efficiency. LPUs consume less power per inference task compared to GPUs, making them a viable option for data centers, where they reduce operational costs and environmental impact.

LPU Disadvantages

Despite their strengths, LPUs have several limitations, such as:

- Limited versatility. LPUs are not general-purpose processors. They are ineffective for tasks like graphics rendering, scientific simulations, or deep learning.

- Immature ecosystem. The LPU market and software ecosystem are in an early stage of growth. As a result, using them in software development can be challenging (limited tool support, a smaller community of developers).

- High initial cost. Specialized hardware often carries a high development and manufacturing cost, which can make LPUs more expensive to acquire than a comparable GPU in the short term.

What Is GPU?

A Graphics Processing Unit (GPU) is an electronic chip designed to manipulate and alter memory to accelerate image creation. Initially developed for graphics rendering, GPUs have evolved into powerful parallel processors capable of handling a wide range of computational tasks.

The GPU architecture is based on a massive number of cores optimized to perform the same operation on multiple data points simultaneously. This parallel processing capability makes them ideal for tasks that can be broken down into thousands of independent, identical computations.

How Does GPU Work?

GPUs operate on the principle of massively parallel computing. They consist of hundreds or thousands of smaller, dedicated cores grouped into streaming multiprocessors (SMs). Each core can execute a single instruction on a different piece of data. This architecture is perfect for single instruction, multiple data (SIMD) workloads.

When a program is executed on a GPU, the workload is divided into thousands of threads. Each thread performs the same operation (such as a matrix multiplication or a pixel shader calculation) on a different element of the input data.

The GPU's memory hierarchy, including high-speed on-chip caches and dedicated VRAM (Video Random Access Memory), ensures that data can reach these cores at a pace that matches their computational speed.

GPU Practical Applications

GPUs are versatile processors with applications in the following fields:

- Deep learning training. The parallel nature of GPUs makes them the standard for training complex deep neural networks requiring trillions of floating-point operations.

- Scientific computing. GPUs accelerate simulations in fields like physics, chemistry, and meteorology, modeling everything from protein folding to weather patterns.

- Gaming and graphics rendering. Powering realistic visuals in video games and professional rendering applications.

- Data analytics and cryptography. GPUs accelerate complex data queries, machine learning models, and cryptographic hashing.

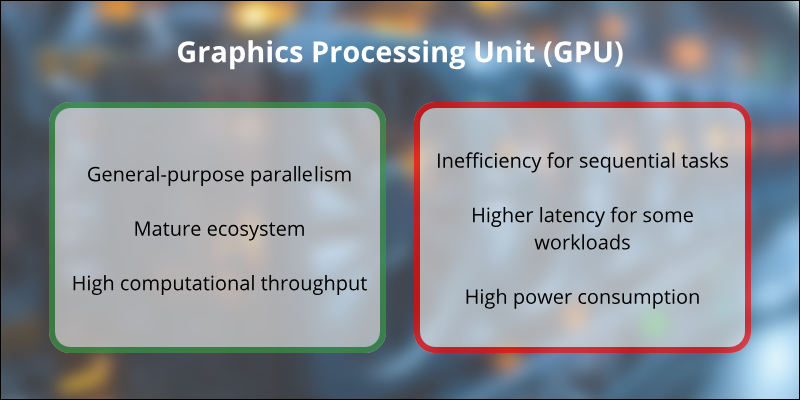

GPU Advantages

The key strength of GPUs is their versatility. Other advantages include:

- General-purpose parallelism. GPUs can handle many parallel workloads, from training AI models to rendering complex 3D scenes.

- Mature ecosystem. The GPU market has a well-established software and hardware ecosystem, including extensive libraries like CUDA and ROCm, and a large community of developers.

- High computational throughput. GPUs offer excellent raw floating-point performance, making them the superior choice for compute-intensive tasks, especially training large models from scratch.

GPU Disadvantages

The general-purpose nature of GPUs presents the following drawbacks:

- Inefficient for sequential tasks. GPUs are not optimized for sequential processing. A single-threaded task does not benefit from the parallel architecture and may even run slower than on a CPU.

- Higher latency for specific workloads. While GPUs excel at throughput, their design can introduce higher latency for specific inference tasks, especially those requiring frequent data fetching or non-uniform data access.

- Power consumption. GPUs, especially high-end models, can be power-hungry, leading to significant thermal management challenges and higher operational costs.

LPU vs. GPU: Differences

The fundamental difference between an LPU and a GPU is their purpose. A GPU is a general-purpose parallel processor, while an LPU is a specialized processor for language model inference.

The table below provides a quick overview of their key differences:

| LPU | GPU | |

|---|---|---|

| Primary use case | LLM inference. | General-purpose parallel computing (training, graphics). |

| Architectural focus | Sequential and sparse computations. | Massively parallel SIMD computations. |

| Workload type | High-throughput, low-latency inference. | High-throughput, compute-intensive tasks. |

| Memory access | Optimized for on-chip memory access, with minimal data movement. | High-bandwidth VRAM for massive data processing. |

| Efficiency | High energy efficiency per inference task. | High energy efficiency for parallel tasks. |

| Programming model | Software-defined, deterministic assembly line. | Hardware-controlled, non-deterministic scheduling. |

| Data types and precision | Optimized for lower precision inference (e.g., INT8). | Versatile, supporting a wide range of precisions (FP64, FP32, FP16). |

| Scalability | Linear, synchronous scaling via deterministic networking. | Complex, multi-layered scaling with network contention. |

| Design goals | Minimizing latency and maximizing sequential throughput. | Maximizing parallel throughput and graphics rendering. |

| Ecosystem maturity | Young, with a limited number of vendors and tools. | Mature, with a vast ecosystem and extensive tool support. |

Primary Use Case

LPUs are a relatively new class of chip for LLM inference, i.e., the process of using a pre-trained large language model to generate a response. They are optimized for the sequential, context-heavy tasks dealing with understanding and generating human language, making them highly efficient for applications like chatbots and AI-powered writing assistants.

A GPU, on the other hand, was developed for general-purpose parallel computing. Parallel processing capability makes GPUs incredibly versatile and powerful for a wide range of tasks beyond graphics, including machine learning training, scientific simulations, and video rendering.

Architecture

The LPU's architecture is a highly specialized pipeline. It uses a systolic array or a similar dataflow design optimized for the specific matrix multiplications and attention mechanisms within transformer models. Built for model parallelism and sequential dataflow, LPUs minimize the time data spends traveling between memory and the processor.

The GPU's architecture is a collection of thousands of tiny, general-purpose cores designed for parallel execution of simple tasks. GPUs are useful in data parallelism scenarios, where they apply the same operation to many different data points simultaneously.

Workload

The LPU's workload is characterized by sequential, dependency-heavy computations, such as those found in autoregressive language model inference. Generating a new word depends on the previous word, creating a sequential chain of operations that LPUs can handle with low latency.

A GPU's workload features a high degree of independent, parallelizable computations. It is ideal for training a neural network, where each neuron's weights can be updated in parallel.

Memory

LPUs are designed to minimize external memory access. They incorporate larger on-chip caches and specialized interconnects to keep the massive model parameters and intermediate activations as close to the processing units as possible. These hardware features reduce the latency when fetching data from off-chip memory.

GPUs rely on high-bandwidth external memory (VRAM) to feed their thousands of cores. Their design assumes that the data being processed is large and can be accessed in parallel.

Performance

LPUs can offer better performance in terms of tokens per second and reduced latency, particularly for real-time LLM applications. Their specialized architecture avoids the overhead and inefficiencies of a GPU executing a sequential, dependency-heavy task.

GPUs offer superior raw computational power for model training, especially for large-scale models from scratch. Their ability to perform trillions of floating-point operations per second in parallel makes them unrivaled.

Efficiency

In terms of power efficiency per operation, LPUs are designed to be more efficient for their specific workload. By eliminating unnecessary components and optimizing the data path, they can perform an LLM inference task with less power consumption than a general-purpose GPU.

A GPU's efficiency is high for general-purpose parallel tasks, but this efficiency diminishes when faced with workloads that don't fit its massively parallel model, such as LLM inference.

Programming Model

LPUs often use a software-defined, deterministic approach, where a compiler statically schedules all operations, including memory access and data flow. This assembly line model eliminates contention and guarantees a predictable execution time.

In GPUs, the hardware scheduler manages thread execution and resource allocation. The potential side effects include non-deterministic timing and potential for performance variability.

Data Types and Precision

Because LPUs specialize in inference, they often optimize for lower-precision data types (INT8, INT4) to maximize throughput and energy efficiency. While this can impact training accuracy, it is a suitable trade-off for inference with fixed model weights.

GPUs are versatile and support a wide range of data types, including high-precision floating-point numbers (FP64, FP32) for scientific computing and training, as well as lower-precision types (FP16, INT8).

Scalability

LPUs are designed for linear, synchronous scalability. Their deterministic network allows multiple chips to function as a single, larger pipeline, with the compiler orchestrating data movement between them with predictable timing. This property simplifies the scaling process and maintains low latency even across a large number of chips.

GPUs typically scale using complex interconnects like NVLink and PCIe, creating a multi-layered network that can introduce communication overhead and non-deterministic latencies. This network can be challenging to manage as the number of GPUs increases.

Design Goals

The LPU was designed from the ground up with the specific goal of minimizing latency and maximizing sequential throughput for language model inference. Every architectural choice, from on-chip memory to the deterministic pipeline, serves this single purpose.

The GPU's initial design focused on graphics, with the primary goal of rendering polygons and pixels in parallel. Its evolution for AI has centered on adapting this parallel processing capability to general-purpose scientific and deep learning tasks.

Ecosystem Maturity

The LPU ecosystem is still in its infancy. It is dominated by a few key players, and its software support still is not as extensive or as universally compatible as that of the GPU. This fact can make development and deployment more challenging for early adopters.

The GPU ecosystem, pioneered by companies like NVIDIA, has been established for decades. It includes a vast array of software libraries (CUDA, cuDNN), a large community of developers, and a wide selection of hardware from multiple vendors.

LPU vs. GPU: How to Choose

Selecting between an LPU and a GPU depends on the specific application requirements. It's not a matter of one being inherently superior, but rather a choice of the right tool for the job.

Choose an LPU:

- For real-time LLM inference. If the application requires low latency and high throughput for conversational AI, chatbots, or other generative text tasks, an LPU can offer better performance and energy efficiency.

- For cost-sensitive and power-constrained LLM environments. Its lower power consumption per inference task can lead to significant long-term savings in a data center.

- For competitive advantage. While the ecosystem is less mature, early adoption could lead to a competitive advantage in specific LLM-centric applications.

Choose a GPU:

- For AI model training. Its raw parallel processing power and mature software ecosystem are essential for training large models efficiently.

- For multi-purpose computing. If the hardware needs to handle a variety of tasks, including graphics, scientific simulations, and both AI training and inference, the GPU's flexibility is a significant asset.

Conclusion

After reading this comparison, you are equipped to decide whether to choose LPUs or GPUs for your AI development project. The article covered the architectural design and operational principles of GPUs and LPUs, provided direct comparison in the key categories, and offered tips on how to choose the right chip.

Next, read why you should use GPUs for deep learning.