Kubernetes networking offers a framework for providing pods, services, and external resources with a flexible and scalable way to exchange data. By abstracting the underlying network infrastructure, Kubernetes makes it easy for applications deployed in the cluster to communicate securely and reliably.

Aside from powering intra-cluster networking, Kubernetes helps users connect applications with resources outside the cluster. To achieve this, Kubernetes uses the concept of Ingress.

This guide will provide essential information about the types and benefits of Kubernetes Ingress, alongside practical examples.

What is Kubernetes Ingress?

Kubernetes Ingress is a resource that defines the external traffic routing rules for the cluster. It is a Kubernetes API object that manages external access to services by specifying the host, path, and other necessary information. Administrators define Ingress using YAML manifests, CLI, or Kubernetes API calls.

Ingress allows for the flexible creation of DNS routing rules and provides cluster services with:

- Load balancing.

- Name-based virtual hosting.

- SSL/TLS termination (decryption).

By acting as a single cluster entry point, Ingress facilitates application management and troubleshooting routing-related problems.

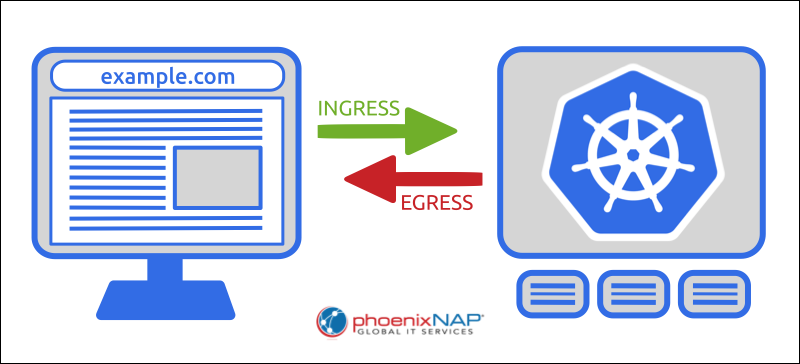

Ingress vs. Egress

Kubernetes network policy allows administrators to manage and create a separate set of rules for the incoming and outgoing pod traffic. Ingress and Egress are used to differentiate between the two traffic directions:

- Ingress routes external traffic into the cluster.

- Egress controls the traffic leaving the cluster. The egress traffic is usually initiated by a cluster service or a pod and forwarded to an external destination using predefined rules.

What is Kubernetes Ingress Controller?

A Kubernetes Ingress controller is an Ingress API implementation that performs load balancing and reverse proxy functions in the Kubernetes cluster. The controller interprets and implements the rules in the Ingress resource specification. It monitors the cluster for resource changes and dynamically reconfigures itself to maintain the proper functioning of the incoming traffic routing.

The Ingress controller object is similar to other Kubernetes controllers, such as Deployments, ReplicaSets, and StatefulSets. It tracks resources in the cluster and ensures that the state of the resources matches the desired state defined by the user.

More than one ingress controller can run inside a single cluster. Additionally, multiple implementations of ingress controllers exist, such as NGINX Ingress Controller, Kong Ingress, HAProxy, and Traefik. These controllers offer features like traffic splitting and path-based routing. They differ in the number of transfer protocols, authentication protocols, and load-balancing algorithm support.

How Does Kubernetes Ingress Work?

Exposing cluster services externally using Kubernetes Ingress is a multi-step process. The following section explains the mechanism behind Ingress and lists the usual steps necessary to expose a service.

1. Creating an Ingress resource. The user creates an Ingress resource and provides configuration details. The necessary information includes host names, paths, and the services that need external access.

2. Deploying an Ingress controller. The user creates and applies an Ingress controller. Once operational, the controller monitors the API server and reacts to changes in ingress specification by updating routing rules and reconfiguring the system.

3. Proxying and load balancing. The ingress controller is the point of contact for an external request coming into the cluster. The traffic is routed toward the controller using a load balancer or a DNS entry that connects to the controller's IP address. Once the connection is live, the ingress controller takes the proxy and load-balancing role.

4. Routing. The controller analyzes incoming requests, collects host name, path, and header attributes, and determines the service to forward to based on the Ingress resource rules.

5. SSL/TLS Termination. Optionally, the Ingress controller can perform SSL/TLS certificate encryption and decryption.

6. Communicating with the service. When the controller determines which service was requested by the client, it proxies the request and allows the service to handle it.

Types of Ingress

Different ingress types exist depending on the design and size of the cluster and the number of included services. The following sections describe the most common types.

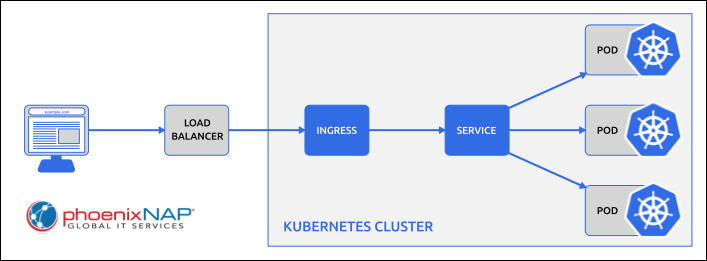

Single Service Ingress

Single Service Ingress (also known as Simple Ingress) is the basic Ingress setup for exposing a single service to external traffic. In this setup, an Ingress is directly associated with a service, as shown in the diagram below.

This type of Ingress connection removes the need to configure multiple host names or apply path-based routing.

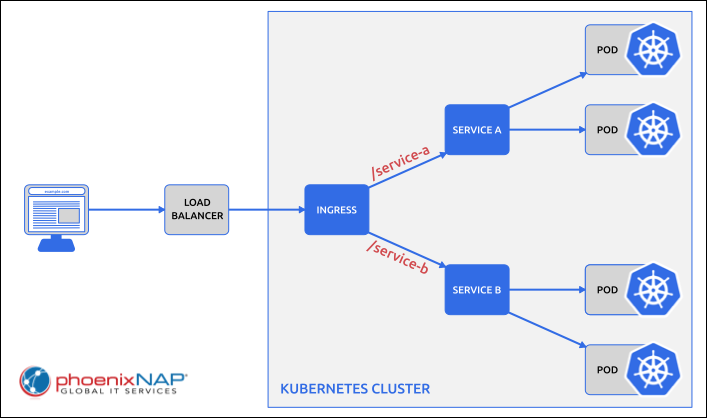

Fanout Ingress

In the cases when an Ingress needs to expose more than one service in the cluster, a fanout ingress allows you to expose the services based on their different hostnames.

The diagram above shows an example of a simple Fanout Ingress. The Ingress forwards the request to the required service based on its HTTP URL, e.g., example.com/service-a. This type allows routing traffic to more than one service using the same IP address.

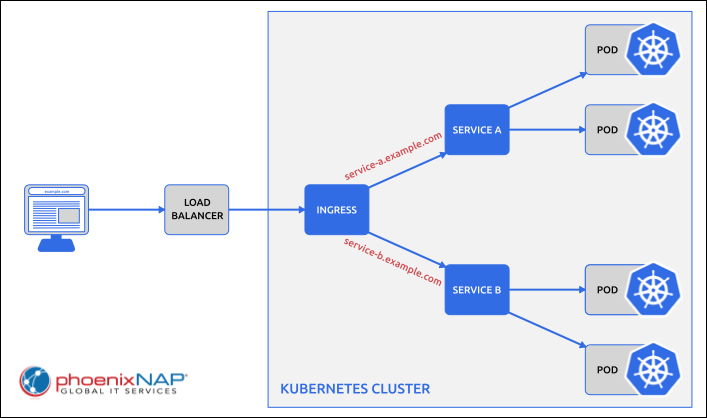

Name-based Virtual Hosting

Name-based Virtual Hosting (NBVH) Ingress routes HTTP traffic to more than one hostname at the same IP address. NBVH allows users to employ different domain names to host multiple services or websites on a single IP address and port. The diagram below illustrates how NBVH works.

NBVH Ingress reads HTTP request headers to route traffic to required services. For example, a client request to visit the domain service-a.example.com would be forwarded to Service A, while Service B on the same domain can be reached by going to service-b.example.com.

Difference Between Ingress, ClusterIP, NodePort, and LoadBalancer

Ingress is one of many ways to expose a service in the cluster. Other standard techniques include using ClusterIP, NodePort, and LoadBalancer service types.

- ClusterIP uses an internal cluster IP address to expose a service internally. This method is advantageous during the development and testing phases but can also be used to run dashboards and enable internal traffic.

- NodePort is a VM that exposes a service using a static port number. While NodePort is not recommended for use in a production environment, it is frequently utilized in development and testing when there is no need for load balancing, i.e., when exposing a single service.

- LoadBalancer is the only method of the three that can replace an Ingress since it is suitable for the production environment and can expose services to external traffic.

Kubernetes Ingress Benefits

The concept of Ingress improves Kubernetes networking in several important ways, from simplifying the intra-cluster processes and configuration to allowing for greater flexibility and extensibility. By configuring Ingress in a cluster, users can:

- Simplify the external connectivity management. Ingress allows users to set up, fine-tune, and control access to their services.

- Define hostname-based routing rules and URL paths. This Ingress property enables better routing control.

- Set up built-in load balancing. Creating multiple service replicas for traffic distribution improves scalability and availability. With built-in load-balancing support, Ingress optimizes the utilization of available resources and ensures that the application can handle large traffic volumes.

- Simplify service discovery. Ingress standardizes how services are exposed and accessed in the cluster and abstracts away the details. Therefore, clients can discover and communicate with the needed services via a consistent interface.

- Encrypt and decrypt HTTPS traffic. SSL/TLS termination allows clients and services to communicate more securely with end-to-end encryption.

- Adopt flexible and extensible routing strategies. A variety of supported Ingress Controllers, and the ability to run more than one controller in the cluster, allow users to create the design that fits their use case.

Kubernetes Ingress Examples

The most common way to introduce Ingress to a Kubernetes cluster is by specifying its configuration in a YAML file and then applying it using kubectl. Each Ingress YAML must contain the following fields:

apiVersionkindmetadataspec

The metadata field contains the Ingress object's name, which must be a valid DNS subdomain name.

Below are a few examples of Ingress YAML files.

- Single Service Ingress:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: simple-ingress-example

spec:

defaultBackend:

service:

name: example-service

port:

number: 80- Fanout Ingress:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: fanout-ingress-example

spec:

defaultBackend:

service:

name: example-service

port:

number: 80

rules:

- host: service-a.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: service-a

port:

number: 80

- host: service-b.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: service-b

port:

number: 80

Note: If an HTTP request does not match any of the objects listed in the rules field, Ingress defaults to the service specified in the defaultBackend field. In the example below, it is example-service.

- Name-based Virtual Hosting Ingress:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nbvh-example

spec:

rules:

- host: service-a.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: service-a

port:

number: 80

- host: service-b.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: service-b

port:

number: 80Note: Learn how to set up Kubernetes Ingress with MicroK8s.

Conclusion

After reading this guide, you should have a basic understanding of the concept of Ingress in Kubernetes. The guide explained how Ingress and Ingress controllers work, provided examples and diagrams, and presented the most important benefits of setting up Ingress in your cluster.

If you are interested in Kubernetes networking, read our Kubernetes Networking Guide.