Automation is one of the fundamental DevOps principles and an essential practice in every CI/CD pipeline. With automation, teams increase productivity and streamline workflows by eliminating repetitive tasks.

Both sides of the DevOps pipeline benefit from this practice - developers automate the build and test steps of the development phase, while operations engineers achieve greater agility by automating infrastructure.

This article discusses server automation and its role in the software development life cycle. The guide will also introduce you to the relevant tools and explain the benefits of server automation.

What Is Server Automation?

Server automation refers to the practice of automating the process of server management. It includes provisioning, deployment, monitoring, maintenance, and de-provisioning of servers using automation software toolkits.

Modern software development projects frequently require complex server networks that include on-premise, hybrid, and cloud solutions. Manually maintaining these networks requires manpower and time most companies cannot afford to spend.

The rise of the Infrastructure-as-Code (IaC) solutions allowed DevOps infrastructure engineers to use simple, human-readable code to manipulate server infrastructure like software. Taking the manual work out of server management resolved multiple issues related to the human factor.

How Server Automation Works

There are two main approaches to automating server resources.

- The imperative approach involves executing a set of commands that instruct the system on how to create the desired server configuration.

- The declarative approach provides engineers with greater flexibility by requiring only a description of the desired configuration state. The system then compares the description with the current state and automatically performs all the necessary adjustments to achieve the desired configuration.

The integration of cloud-based servers into a DevOps workflow is performed using the cloud API technology. Cloud APIs enable data exchange between the users and the cloud service providers, allowing the users to:

- Provision and de-provision servers.

- Provision and manage storage options.

- Automate infrastructure billing.

- Monitor, track, and organize resources.

- Configure networking.

Tools for Server Automation

Many tools available on the market help set up server automation. The tools range from server provisioning platforms like Terraform to containerization solutions like Kubernetes. The following list features the most popular tools across all the categories.

Terraform

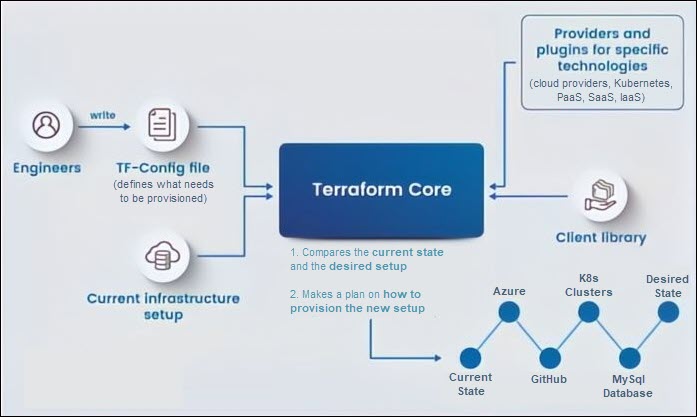

Terraform is an IaC platform for infrastructure task automation whose primary function is the provisioning of cloud resources. It is an open-source, Go-based tool that allows engineers to define their infrastructure by writing TF configuration files in the HashiCorp Configuration language or JSON.

Terraform has two main components:

- Terraform Core is a statically-compiled binary featuring the

terraformcommand-line tool. Terraform Core reads the configuration files and compares the desired state with the current infrastructure state. This component also creates a plan for executing the necessary changes and communicates with plugins using remote procedure calls (RPC). - Terraform Plugins are executable binaries that expose specific service and provisioner implementations. These implementations include various cloud service providers (such as phoenixNAP or AWS) and provisioners (like

local-execandremote-exec).

The declarative approach to infrastructure configuration and many providers to choose from make Terraform a secure and efficient tool for provisioning cloud resources.

Note: Learn Terraform basics by reading How to Provision Infrastructure with Terraform.

Ansible

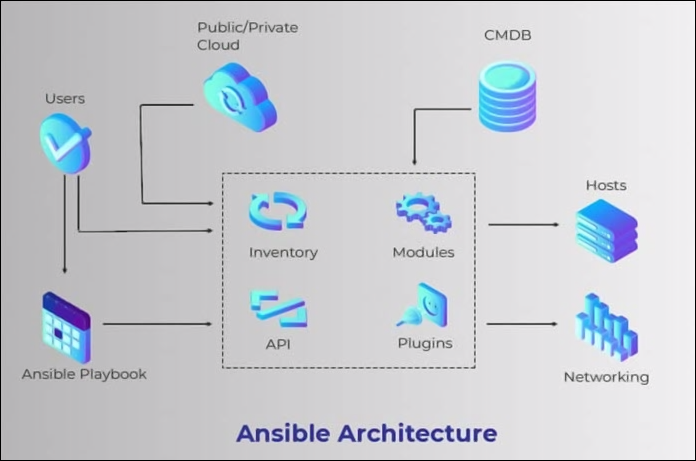

Ansible is an open-source software suite that automates provisioning, orchestration, software deployment, and other IT processes. The tool is particularly useful for managing and monitoring remote servers using a single control node.

The primary tool for passing job instructions in Ansible is called a playbook. Each Ansible playbook uses the YAML format to list plays, i.e., sets of tasks to be completed.

The following components comprise the Ansible engine:

- Modules are small programs that contain models of a desired state of the system. Ansible pushes modules to server nodes and executes them over SSH.

- Plugins extend Ansible's functionality. Users can choose between using premade plugins or writing extensions of their own.

- Host inventory is an INI file that puts machines in custom, user-defined groups.

- Ansible Python API can control nodes, create plugins, and manipulate inventory data from external sources.

Ansible excels in multitier application deployments and is often used in scenarios involving zero-downtime CI/CD workflows. Since it is simple to learn, this tool represents a good entry point to the world of DevOps.

Note: Ansible is a Linux-based tool. If you need to run Ansible on Windows, read How to Install and Configure Ansible on Windows.

Pulumi

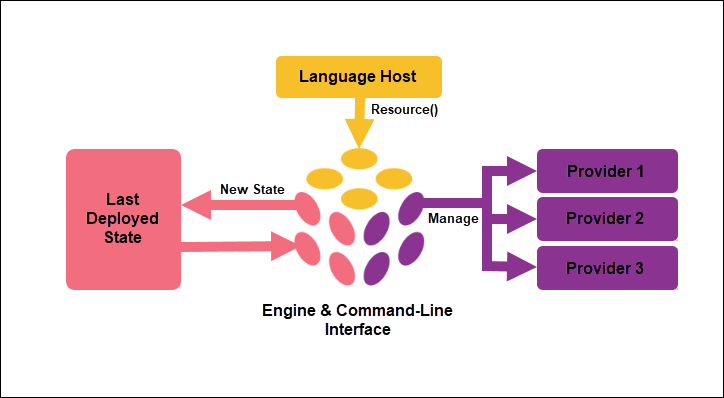

Pulumi is an open-source IaC platform that uses popular general-purpose programming languages to manage cloud infrastructure. Unlike Terraform, which utilizes a proprietary language, Pulumi lets engineers define their infrastructure using Python, JavaScript, TypeScript, Go, and .NET languages. This property makes Pulumi's learning curve significantly less steep than the learning curves of other platforms.

The typical Pulumi workflow has three stages:

- The language host executes a program with new resource parameters and creates an environment for interaction with the deployment engine.

- The deployment engine receives the desired infrastructure state and computes what needs to be changed about the current state of the resources.

- The engine sends resource management instructions to the resource providers that host the affected resources.

Each resource provider has an SDK with resource bindings and a resource plugin that the deployment engine uses to communicate with the provider's resources.

Puppet

Puppet is a tool for server configuration management and automation. Like all other tools listed above, Puppet requires users to provide the desired state of the managed server infrastructure, which is then automatically enforced and maintained.

Puppet's design includes the following important elements:

- The Puppet Code is the declarative language for providing infrastructure specifications in manifest files.

- The Puppet Master is a daemon that knows the configuration of the entire system and each connected agent node.

- The Puppet Agent is an application installed on each node on the system. Its purpose is to collect information about the node and perform management actions.

- Facter is a standalone CLI tool that collects facts about nodes and reports them to the Master.

The standard Puppet workflow can be roughly divided into three steps:

- The Puppet Master detects an infrastructure change that a user-initiated via a manifest file.

- The file is then compared against the data Facter supplied, and the Puppet Master calculates how the system needs to change.

- Finally, the master sends instructions to the relevant agent(s), who reports back when finished.

Note: Understand the similarities and differences between some of the infrastructure tools listed above by referring to our article on Terraform vs. Puppet.

Jenkins

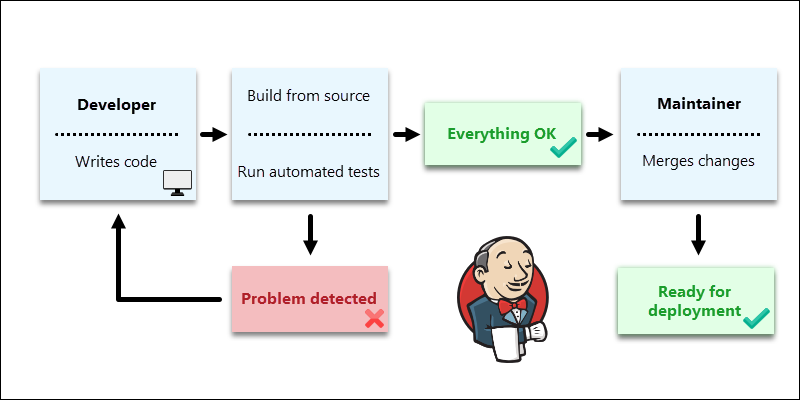

Jenkins is an open-source system-agnostic CI/CD platform that features integration, testing, and deployment technologies for pipeline automation. DevOps teams use Jenkins to:

- Automate building and testing with CI pipelines for applications and infrastructure.

- Automate delivery and achieve non-stop code deployment via CD pipelines.

- Automate routine tasks, such as logging, backup, and stats collection.

The diagram below illustrates the continuous integration aspect of Jenkins and shows how test automation helps maintainers receive only relevant software builds.

Jenkins installation occurs across multiple servers. The Jenkins CI server (Master) is the main machine that checks the source code repository and pulls the newly committed changes. The master then splits the testing workload between different nodes, i.e., worker machines hosting the build agents.

While Jenkins is not an IaC tool, it integrates IaC tools and infrastructure deployments into the CI/CD pipeline. For example, running Terraform from Jenkins eliminates the need to apply TF files from the command line manually.

Chef

Chef is an open-source configuration management tool for server automation. Unlike the previously mentioned infrastructure management tools, Chef uses imperative syntax. It features a Ruby-based domain-specific language (DSL) that allows users to specify the exact steps to achieve the desired state of infrastructure in the files called Chef Recipes.

A typical Chef installation consists of the following components:

- The Chef Server is the system that stores recipes, cookbooks (recipe collections), and other critical infrastructure configuration data.

- The Nodes are physical and virtual servers running the Chef Client. The client communicates with the Chef Server using REST API.

- The Chef Workstation is the application that enables communication between the users and the Chef Server. This developer toolkit provides all the necessary tools for creating and maintaining recipes, cookbooks, and policies.

One of the main benefits of Chef is an extensive database of over 3000 cookbooks available in the Chef Supermarket. The premade cookbooks facilitate and speed up the process of deploying basic frameworks and toolkits.

Kubernetes

Kubernetes is an open-source container orchestration tool with multiple important use cases in application development. In CI/CD, Kubernetes is frequently chosen as the primary orchestration solution due to its automation capabilities and efficient resource management.

Each server in a Kubernetes cluster has a role.

- The Master Node uses the API Server to establish and maintain the intra-cluster communication and manage load balancing. The node stores the cluster data in the etcd key-value store and utilizes the Scheduler to create pods on nodes.

- The Worker Nodes host workloads and perform the tasks assigned by the Master Node. Each node runs Kubelet, a daemon that creates, monitors, and destroys the pod on the node. Pods host containers controlled by a Container Runtime.

Kubernetes is a practical solution for deployments using hybrid and multi-cloud environments. The environment-agnostic approach and concepts, such as services, volumes, and ingress controllers, allow Kubernetes to abstract deployments from the underlying infrastructure. Therefore, this feature allows it to achieve application portability.

SUSE Rancher

Rancher is a software stack that provides an interface for Kubernetes cluster maintenance and app development. Since most cloud providers include Kubernetes as part of the standard infrastructure, Rancher can be a helpful tool for streamlining multiple cluster deployments on private and public clouds.

In the DevOps context, Rancher supports and complements many popular toolsets, such as Gitlab, Istio, Prometheus, Grafana, and Jenkins.

Note: For a tutorial on how to use Rancher with Kubernetes, read How to Set Up a Kubernetes Cluster with Rancher.

Benefits of Server Automation

Server automation simplifies, speeds up, and reduces the cost of infrastructure management. The sections below provide more details on the specific benefits of server automation in the DevOps world.

Scalability and Flexibility

Automated server deployments trivialize the process of provisioning and deprovisioning a server. Therefore, scaling the resources up and down to meet the current project needs becomes a much more accessible practice. Additionally, this flexibility allows for streamlining of infrastructure management and utilizing the full potential of orchestration and automation.

Cost Reduction

The ability to scale resources also provides the benefit of cost reduction. Decommissioning unused servers and spinning them up when needed allows software companies to cut their expenses related to cloud infrastructure.

Another financial benefit of server automation lies in the savings accumulated through hiring fewer server maintenance workers.

DevOps Workloads Optimization

The DevOps teams utilizing IaC solutions increase their productivity. The reason is the ability to eliminate infrastructure maintenance tasks from their workload, which leaves more time to focus on building software. The IaC model ensures the same environment is deployed every time - this includes the same server configurations, as well as the same networks, load balancers, VMs, etc.

Server Infrastructure Standardization

Different servers come with different hardware and software solutions that often make manual maintenance difficult and time-consuming. The server-agent architecture of most IaC tools standardizes server deployment and maintenance.

Using automation software to abstract away most of the server maintenance tasks from the hardware helps streamline management and increase performance. Furthermore, reusing configuration templates promotes infrastructure consistency in the entire SDLC.

Speed and Ease of Use

Most server automation tools are designed to be simple and efficient. They enable engineers to further speed up the deployment process by quickly creating test environments, replicating them into production, and rolling back the changes in case of an error.

This property of server automation strongly aligns with the DevOps philosophy and makes it an integral part of every efficient pipeline.

Security and Reliability

Server automation significantly lowers the chance of human error during server provisioning and maintenance. Once the infrastructure code is finished and tested, it can be deployed as often as necessary without the fear of misconfiguration.

The automated process also reduces the number of people who need access to sensitive parts of the infrastructure. This property creates a system that is inherently more secure.

Conclusion

After reading this guide, you should better understand server automation and its role in the DevOps framework. The article presents some of the most popular tools that enable or facilitate server automation and listed the benefits.