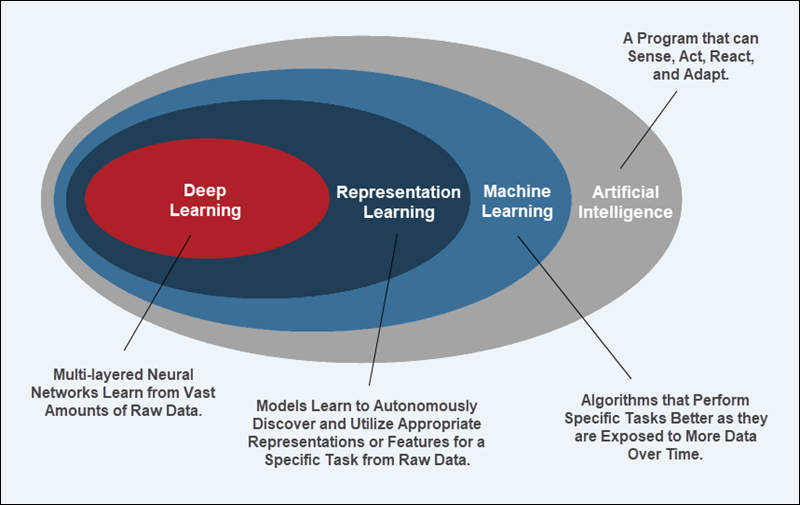

For many SMEs, once elusive concepts like AI, machine learning, and big data are now tangible business opportunities. To support this AI-driven transformation, organizations need significantly more processing power.

This is why Intel has equipped its latest Xeon Scalable processors with the AMX extension, designed to accelerate high-performance computing workloads.

Learn more about AMX and use it to optimize your AI development pipelines.

What Is Intel AMX (Advanced Matrix Extensions)?

Intel Advanced Matrix Extensions (AMX) is an instruction set extension integrated into 4th Gen (Sapphire Rapids), 5th Gen (Emerald Rapids), and now 6th Gen (Granite Rapids) Intel Xeon Scalable CPUs. The AMX extension is designed to accelerate matrix-oriented operations, which are primarily used in training deep neural networks (DNNs) and AI inference.

By introducing new data types and instructions, AMX aims to streamline matrix multiplication and accumulation operations and reduce power consumption.

Deep learning frameworks, like PyTorch and TensorFlow, can leverage AMX data types and instructions. This enables developers to run and optimize AI inference routines without needing to handle hardware specifics.

AMX Architecture

AMX uses an innovative tile structure to increase the density of matrix operations. By facilitating parallel computations, it opens the way for significant performance enhancements in AI and machine learning tasks.

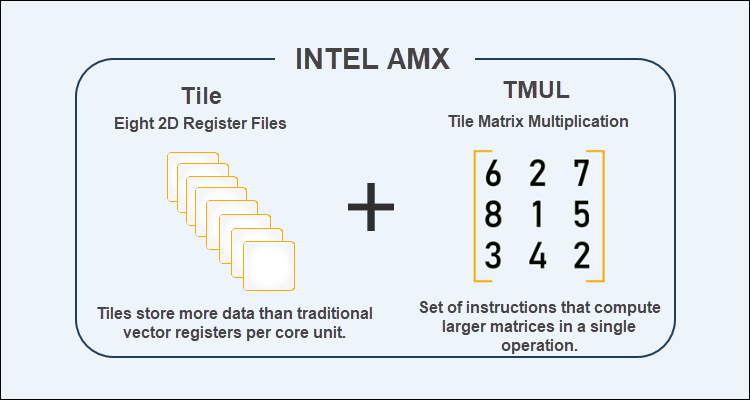

The most important components of Intel AMX architecture include:

1. Tile-Based Architecture. Large data chunks are stored in two-dimensional (2D) 1 kilobyte register files. Data is formatted into a set of eight 2D register files called a tile. Tiles are designed to keep data near the execution units, which improves data reuse and reduces memory bandwidth requirements for matrix operations.

2. Tile Matrix Multiplication (TMUL): TMUL is an accelerator engine for controlling and managing tiles and their states. It focuses on matrix-multiply computations like dense linear algebra workloads, essential for AI training and inference.

The tile-based architecture allows Intel AMX to store more data in each core and compute larger matrices in a single operation.

AMX Data Types

The FP32 floating-point format is used in AI workloads for its precision. It is ideal for higher accuracy but requires more computing resources and longer computation times, which may not be practical for all applications.

AMX supports lower precision INT8, BF16, and FP16 data types:

- INT8 for Inferencing. AMX provides enhanced support for INT8 operations, which are critical for inferencing workloads. The INT8 data type sacrifices precision to process multiple operations in each compute cycle. It requires fewer computing resources, which makes it ideal for deployment in real-time applications and matrix multiplication tasks where speed and efficiency take precedence.

- Bfloat16 (BF16) Support. AMX provides native support for the BF16 floating-point format. This data type occupies 16 bits of computer memory. BF16 delivers intermediate accuracy for most AI training workloads and can also deliver higher accuracy for inferencing if needed. It is particularly useful in ML because it allows models to be trained with almost the same accuracy as when using 32-bit floating-point numbers but at a fraction of the computational cost.

- FP16 (16-bit IEEE) for training and inference. 6th Gen Xeon (Granite Rapids) processors support the FP16 data type, which fully complies with the IEEE 754 half-precision standard. Since FP16 uses half the memory of FP32, more values can fit into the processor's cache, resulting in faster computations. Many AI frameworks already use FP16 on GPUs. This enables users to deploy FP16-trained models from GPU environments directly on CPUs, without needing to convert data types or retrain the model.

Note: Open-source frameworks such as TensorFlow and PyTorch are optimized to use INT8, BF16, or FP16 automatically based on hardware performance.

The tiled architecture and native support for the BF16 and FP16 data types give Intel CPUs with integrated AMX acceleration a significant performance advantage over their predecessors.

The table shows performance utilization for different data types, detailing operations per cycle for the 3rd Gen Intel Xeon (Intel AVX-512 VNNI) and AMX-eqquipped Intel Xeon processors.

| Operations per Cycle (Data Type) | 3rd Gen Intel Xeon (Intel AVX-512 VNNI) | 4th Gen Intel Xeon (AMX) | 5th Gen Intel Xeon (AMX) | 6th Gen Intel Xeon (AMX+FP16) | SPEED INCREASE |

|---|---|---|---|---|---|

| FP32 | 64 | 1024 | 1024 | 1024 | Up to 16x faster |

| INT8 | 256 | 2048 | 2048 | 2048 | Up to 8x faster |

| BF16 | N/A | 1024 | 1024 | 1024 | Up to 12x faster (when switching from FP32 on 3rd Gen to BF16 on AMX) |

| FP16 | N/A | N/A | N/A | 1024 | Only available in 6th Gen Xeon (Granite Rapids) processors. |

Raw operations per cycle for AMX remain constant from Gen 4 through Gen 6. However, 5th and 6th Gen Intel Xeon Scalable processors also benefit from DDR5 memory, higher memory bandwidth, and deliver more consistent performance during sustained workloads.

AMX Performance

Relative Throughput

The semiconductor industry has consistently doubled computing power roughly every two years.

The following table shows that AMX architecture outperforms the incremental core count across various Xeon processor generations. Although the number of cores has only increased 2.3 times since the first Intel Xeon Scalable processor, the relative throughput has increased over 15 times.

Performance test parameters include:

- Reference Point: 1st Gen Intel Xeon Scalable processor (Intel DL Boost Instruction Set).

- Deep Learning Model: ResNet-50 v1.5 (Batch Inferencing).

- Framework: TensorFlow.

- Data Type: INT8.

| Processor Generation | Instruction Set Extension | Cores | Relative Throughput |

|---|---|---|---|

| 1st Gen Intel Xeon Scalable CPU | Intel DL Boost | 28 cores | Baseline |

| 2nd Gen Intel Xeon Scalable CPU | Intel DL Boost | 28 cores | 2x |

| 3rd Gen Intel Xeon Scalable CPU | Intel DL Boost | 40 cores | 4x |

| 4th Gen Intel Xeon Scalable CPU | Intel AMX | 56 cores | 11x |

| 5th Gen Intel Xeon Scalable CPU | Intel AMX | 56-64 cores | ~13x |

| 6th Gen Intel Xeon Scalable CPU | Intel AMX | 64 cores | ~15x |

Architectural enhancements, such as improved AMX tile utilization and higher sustained base frequencies, are the primary reasons for the relative throughput gains in 5th Gen (Emerald Rapids) and 6th Gen (Granite Rapids) Xeon processors.

AI Training Performance Boost

This table illustrates the acceleration in PyTorch training performance across successive generations of Intel Xeon processors. The baseline is the 3rd Gen Intel Xeon Platinum 8380 processor, which uses FP32 precision on AVX-512. The 4th Gen Intel Xeon Platinum 8480+ processor introduces Intel AMX with BF16, while 6th Gen (Granite Rapids) further accelerates AI training throughput by adding support for the FP16 data type.

Performance test details are:

- Reference Point: 3rd Gen Intel Xeon Platinum 8380 processor (FP32).

- Framework Used: PyTorch.

- Data Types: FP32 (Gen 3), BF16 (Gen 4 & 5), FP16 (Gen 6).

| Task/Model | Category | Gen 4 (AMX+BF16) Performance Increase | Gen 5 (AMX+DDR5+BF16) Performance Increase | Gen 6 (AMX+DDR5+FP16) Performance Increase |

|---|---|---|---|---|

| ResNet-50 v1.5 | Image classification | 3x | 3.3x | 6x |

| BERT-large | Natural Language Processing (NLP) | 4x | 4.8x | 9.6x |

| DLRM | Recommendation system | 4x | 4.6x | 8.8x |

| Mask R-CNN | Image segmentation | 4.5x | 5.2x | 9.4x |

| SSD-ResNet-34 | Object detection | 5.4x | 6.2x | 11x |

| RNN-T | Speech recognition | 10.3x | 12.4x | 24x |

Real-Time Inference Performance Boost

As in the previous section, the 3rd Gen Intel Xeon Platinum 8380 processor (FP32) is used as a baseline to illustrate real-time inference performance across AMX-powered Intel Xeon processors. The 4th and 5th Gen Xeon processors introduce support for BF16, while the 6th Gen (Granite Rapids) adds FP16 support.

These lower-precision data types increase throughput without sacrificing model accuracy. Combined with architectural improvements such as DDR5 memory, they enable faster real-time inference at a lower computational cost.

Performance test parameters are:

- Reference Point: 3rd Gen Intel Xeon Platinum 8380 processor (FP32).

- Framework: PyTorch.

- Data Types: FP32 (Gen 3), BF16 (Gen 4 & 5), FP16 (Gen 6).

| Task/Model | Category | Gen 4 (AMX+BF16) Performance Increase | Gen 5 (AMX+DDR5+BF16) Performance Increase | Gen 6 (AMX+DDR5+FP16) Performance Increase |

|---|---|---|---|---|

| ResNeXt101 32x16d | Image classification | 5.70x | 6.6x | 12.5x |

| ResNet-50 v1.5 | Image classification | 6.19x | 7.1x | 13.5x |

| BERT-large | Natural Language Processing (NLP) | 6.25x | 7.5x | 15x |

| Mask R-CNN | Image segmentation | 6.24x | 7.2x | 13x |

| RNN-T | Speech recognition | 8.61x | 10.3x | 20x |

| SSD-ResNet-34 | Object detection | 10.01x | 11.5x | 23x |

Note: Check out our list of the best AI processors.

Intel AMX on phoenixNAP BMC Platform

Owning and maintaining AI infrastructure is not a viable option for many companies due to the high costs and lack of flexibility.

Transitioning to an AI-oriented environment with OpEx-based access to infrastructure has substantial financial and strategic benefits:

- Costs shift from capital expenditures to ongoing operational expenses.

- Businesses can scale their services seamlessly to meet their immediate needs.

- Expenses are more predictable, which is significant for cash flow management.

Note: AI-focused cloud environments that combine edge computing, multi-cloud orchestration, and OpEx-modeled access are increasingly being described as neocloud. Learn more about this emerging industry buzzword in our What Is Neocloud? article.

phoenixNAP's Bare Metal Cloud (BMC) is an OpEx-modeled platform that allows quick provisioning and scaling of dedicated servers via API, CLI, or Web UI.

BMC offers pre-configured server instances powered by Intel Xeon Scalable CPUs with built-in AMX accelerators. By combining the capabilities of Intel AMX and the BMC platform, users can:

- Deploy enterprise-ready environments optimized for extracting value out of large datasets in minutes.

- Leverage tools like Terraform and Ansible to automate deployments and scale AI infrastructure as needed.

- Accelerate matrix operations to boost AI application accuracy and speed.

- Reduce time-to-insight with one-click access to CPUs and workload acceleration engines.

The latest generations of Intel Xeon Scalable processors available on Bare Metal Cloud deliver immediate value for the following use cases:

| Application Category | Use Case |

|---|---|

| Artificial Intelligence | Recommendation systems. Natural language processing. Image recognition. Object detection. Machine learning applications. Video analytics. |

| Data Analytics | Relational database management systems. In-memory databases. Big data analytics Data warehousing. |

| Networking | Hardware cryptography. Packet processing. Content delivery network. Security gateway. |

| Storage Deployment | Distributed and virtual storage. |

| High-Performance Computing (HPC) | Computational fluid dynamics. Molecular dynamics. Weather simulation. Heavy-duty AI training and inference. FinTech. Drug discovery. |

| Data Security | Confidential computing. Regulatory or compliance workloads. Federated learning systems. |

| Ecommerce | Reduce transaction time. Manage peak demands. UX and behavior analysis. Automated customer support. |

Conclusion

AI-driven solutions will become the norm for most end-users. As the cost of performing matrix computations on large datasets continues to rise, companies must explore solutions that will keep them competitive without breaking the bank.

Use the phoenixNAP BMC platform and Intel AMX to deploy and manage a flexible and scalable AI-focused infrastructure. This combination not only supports varied matrix sizes today but is also adaptable to potentially new matrix types down the line.