Memory management is a vital and complex task in operating systems. It enables running multiple processes simultaneously without interruptions.

Understanding how memory management in operating systems works is crucial for system stability and performance.

This article explains key concepts of memory management in operating systems.

What Is Memory Management?

The main components in memory management are a processor and a memory unit. The efficiency of a system depends on how these two key components interact.

Efficient memory management depends on two factors:

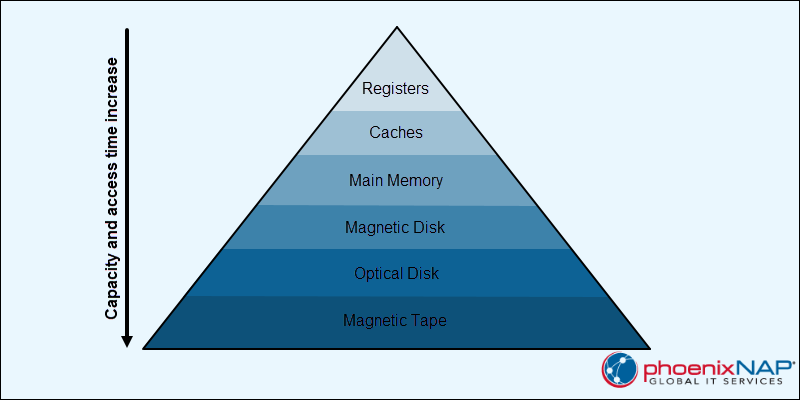

1. Memory unit organization. Several types of memory make up the memory unit. A computer's memory hierarchy and organization affect data access speeds and storage size. Faster, smaller caches are closer to the CPU, while slower, larger memory is further away.

2. Memory access. The CPU regularly accesses data stored in memory. Efficient memory access influences how fast a CPU completes tasks and becomes available for new tasks. Memory access involves working with addresses and defining access rules across memory levels.

Memory management balances trade-offs between speed, size, and power use in a computer. Primary memory allows fast access but no permanent storage. On the other hand, secondary memory is slower but offers permanent storage.

Why Is Memory Management Necessary?

Main memory is an essential part of an operating system. It allows the CPU to access the data it needs to run processes. However, frequent read-and-write operations slow down the system.

Therefore, to improve CPU usage and computer speed, several processes reside in memory simultaneously. Memory management is necessary to divide memory between processes in the most efficient way possible.

As a result, memory management affects the following factors:

- Resource usage. Memory management is a crucial aspect of computer resource allocation. RAM is the central component, and processes use memory to run. An operating system decides how to divide memory between processes. Proper allocation ensures that every process receives the memory it needs to run in parallel.

- Performance optimization. Various memory management mechanisms significantly impact system speed and stability. The mechanisms aim to reduce memory access operations, which are CPU-heavy tasks.

- Security. Memory management ensures data and process security. Isolation ensures that processes use only the memory they were given. Memory management also enforces access permissions to prevent entry to restricted memory spaces.

Operating systems use memory addresses to track allocated memory across different processes.

Memory Addresses

Memory addresses are vital to memory management in operating systems. A memory address is a unique identifier for a specific memory or storage location. Addresses help find and access information stored in memory.

Memory management tracks every memory location, maps addresses, and manages the memory address space. Different contexts require different ways to refer to memory address locations.

The two main memory address types are explained in the sections below. Each type has a different role in memory management and serves a different purpose.

Physical Addresses

A physical address is a numerical identifier pointing to a physical memory location. The address represents the actual location of data in hardware, and they are crucial for low-level memory management.

Hardware components like the CPU or memory controller use physical addresses. The addresses are unique and fixed, allowing hardware to quickly locate any data. Physical addresses are not available to user programs.

Virtual Addresses

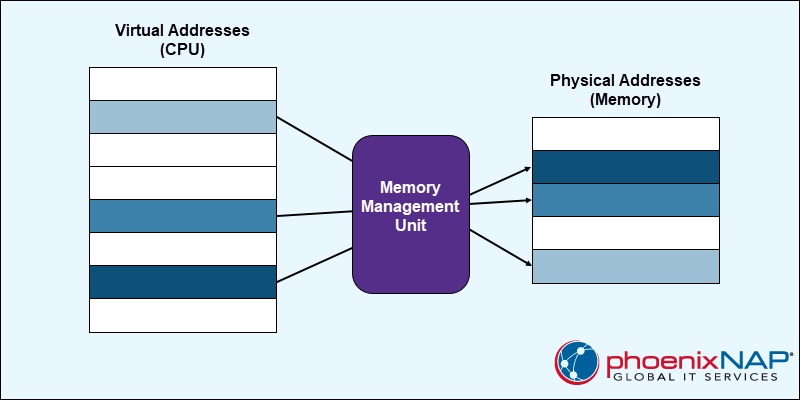

A virtual address is a program-generated address representing an abstraction of physical memory. Every process uses the virtual memory address space as dedicated memory.

Virtual addresses do not match physical memory locations. Programs read and create virtual addresses, unaware of the physical address space. The main memory unit (MMU) maps virtual to physical addresses to ensure valid memory access.

The virtual address space is split into segments or pages for efficient memory use.

Static vs. Dynamic Loading

Static and dynamic loading are two ways to allocate memory for executable programs. The two approaches differ in memory usage and resource consumption. The choice between the two depends on available memory, performance results, and resource usage.

- Static loading allocates memory and addresses during program launch. It has predictable but inefficient resource usage, where a program loads all necessary resources into memory in advance. System utilities and applications use static loading to simplify program distribution. Executable files require compilation and are typically larger files. Real-time operating systems, bootloaders, and legacy systems utilize static loading.

- Dynamic loading allocates memory and address resolutions during program execution, and a program requests resources as needed. Dynamic loading reduces memory consumption and enables a multi-process environment. Executable files are smaller but more complex due to memory leaks, overhead, and runtime errors. Modern operating systems (Linux, macOS, Windows), mobile operating systems (Android, iOS), and web browsers use dynamic loading.

Static vs. Dynamic Linking

Static and dynamic linking are two different ways to handle libraries and dependencies for programs. The memory management approaches are similar to static and dynamic loading:

- Static linking allocates memory for libraries and dependencies at program launch. Programs are complete and do not depend on external libraries at compile time.

- Dynamic linking allocates memory for libraries and dependencies as needed after program launch. Programs search for external libraries as the requirement appears after compilation.

Static loading and linking typically unify into a memory management approach where all program resources are predetermined. Likewise, dynamic loading and linking create a strategy in which programs allocate and acquire resources as needed.

Combining different loading and linking strategies is possible to a certain extent. The mixed approach is complex to manage but also brings the benefits of both methods.

Swapping

Swapping is a memory management mechanism that operating systems use to free up RAM. The mechanism moves inactive processes or data between RAM and secondary storage (such as HDD or SSD).

The swapping process uses virtual memory to address RAM space size limits, making it a crucial memory management technique in operating systems. The technique uses a section from a computer's secondary storage to create swap memory as a partition or file.

Swap space enables exceeding RAM space by dividing data into fixed-size blocks called pages. The paging mechanism tracks which pages are in RAM and which are swapped out through page faults.

Excessive swapping degrades performance because secondary memory is slower. Different swapping strategies and swappiness values minimize page faults while ensuring that only essential data is in RAM.

Fragmentation

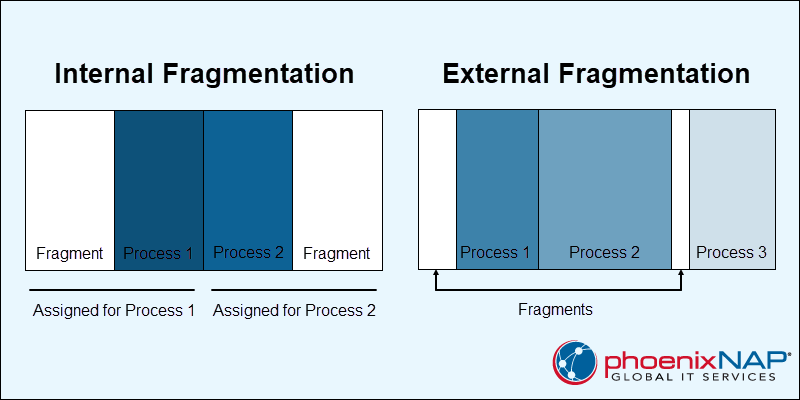

Fragmentation occurs when attempting to partition memory. An operating system reserves a portion of main memory, leaving the rest available for processes, which are further divided into smaller partitions. Partitioning does not utilize virtual memory.

There are two approaches to partitioning remaining memory: fixed or dynamic partitions. Both approaches result in different fragmentation types:

- Internal. When the remaining memory is divided into equal-sized partitions, programs larger than the partition size require overlaying, while smaller programs take up more space than needed. The unallocated space creates internal fragmentation.

- External. Dividing the remaining memory dynamically results in partitions with variable sizes and lengths. A process receives only the memory it requests. The space is freed when it is completed. Over time, unused memory gaps form, leading to external fragmentation.

Internal fragmentation requires design changes. The typical resolution is through the paging and segmentation mechanism.

External fragmentation requires the operating system to periodically defragment and free up unused space.

Memory Management Techniques

Different memory management techniques address the issues that arise from memory organization. One of the main goals is to improve resource usage on a system.

There are two main approaches to memory allocation and management: contiguous and non-contiguous. Both approaches have advantages, and the choice depends on system requirements and hardware architecture.

Contiguous Memory Management Schemes

Contiguous memory management schemes allocate continuous memory blocks to processes. Memory addresses and processes are linear, which makes this system simple to implement.

There are different ways to implement contiguous memory management schemes. The sections below provide a brief explanation of notable schemes.

Single Contiguous Allocation

Single contiguous allocation is one of the earliest memory management techniques. In this scheme, RAM is divided into two partitions:

- Operating system partition. A partition is reserved for the operating system. The OS loads into this partition during startup.

- User process partition. A second partition for loading a single user process and all related data.

Early operating systems, such as MS-DOS, used a single-contiguous allocation scheme. The system is simple and requires tracking only two partitions. The overly simple approach lacks process isolation, leading to wasted memory.

Modern operating systems do not use this memory management technique. However, the scheme laid a foundation for advancements in memory management techniques.

Fixed-Partition Allocation

Fixed-partition allocation is a memory management scheme that divides RAM into equal-sized partitions. The partition size is predetermined, requiring knowledge of process sizes in advance.

The operating system tracks and allocates partitions to processes. Each process receives a dedicated partition, providing process isolation and security.

However, because it uses fixed-size partitions, the scheme suffers from fragmentation consequences. Internal fragmentation appears when processes are smaller than the partition. External fragmentation happens over time, and larger processes are harder to allocate.

Buddy Memory Allocation

The buddy memory allocation is a dynamic memory management scheme. This technique divides RAM into variable-sized blocks. Sizes are often powers of 2 (2KB, 4KB, 8KB, 16KB, etc.).

When a process requests memory, the OS seeks the smallest size best-fit block to allocate. If no smaller block is available, larger blocks are split in half to accommodate. When memory is freed, the OS checks if neighboring blocks (buddy blocks) are free and merges them into larger blocks.

The buddy memory allocation system uses a binary tree to track memory block status and to search for free blocks. The scheme strikes a balance between fragmentation and efficient memory allocation. Notable uses include the Linux kernel memory and embedded systems.

Non-contiguous Memory Management Schemes

Non-contiguous memory management schemes allow processes to be scattered across memory. Memory addresses and processes are non-linear, and processes can access memory wherever it is available.

These memory management schemes aim to address fragmentation issues but are complex to implement. Most modern operating systems use non-contiguous memory management.

Below is a description of crucial non-contiguous memory management mechanisms.

Paging

Paging is a memory management approach for managing RAM and virtual memory. In this approach, memory is divided into the same-sized blocks:

- Pages. Virtual memory blocks that have logical addresses.

- Page frames. RAM blocks that have physical addresses.

The paging mechanism uses a page table for each process to keep track of address mapping between pages and frames. On the other hand, the operating system moves data between RAM and secondary storage as needed using the swapping mechanism.

Paging reduces external fragmentation. The mechanism is flexible, portable, and efficient for memory management. Many modern operating systems (Linux, Windows, and macOS) use paging to manage memory.

Segmentation

Segmentation is a memory management scheme where memory is divided into logical segments. Every segment corresponds to a specific area for a function or task within a process.

Unlike paging, segments have varying sizes. Each segment has a unique identifier known as a segment descriptor. The operating system maintains a segment table that contains a descriptor, offset, and base address. The CPU combines the offset with a base address to calculate the physical address.

Segmentation provides a dynamic, secure, and logical approach to managing memory. However, the mechanism is complex and only suitable for some use cases.

Conclusion

After reading this guide, you know everything about memory management in operating systems.

Next, read how stacks and heaps compare in variable memory allocation in our stack vs. heap guide.