A high-performance computing (HPC) cluster consists of multiple interconnected powerful computers. Therefore, this high-availability system can quickly process immense amounts of data using parallel performance to handle different workloads efficiently.

The following text explains what an HPC cluster is, its components, and how it works in practice.

What Is an HPC Cluster?

An HPC cluster is a network of interconnected computers working in parallel to solve complex computational tasks. Each computer is called a node. These server clusters combine processing power to efficiently analyze and process extensive datasets, simulate complex systems, and tackle intricate scientific and engineering challenges.

Each node is equipped with its processor, memory, and storage. The nodes are linked via a high-speed network, facilitating rapid communication and data exchange.

HPC Cluster Architecture

HPC cluster architecture includes a group of computers (nodes) that collaborate on shared tasks. Therefore, each node accepts and processes tasks and computations within this architecture, but the results are combined.

Below is the list of HPC architecture components:

- Compute nodes. This represents clusters of computers with individual processors, local memory, and other resources that collectively work on computations. Compute nodes focus on data processing, software execution, and problem-solving. Common node types are:

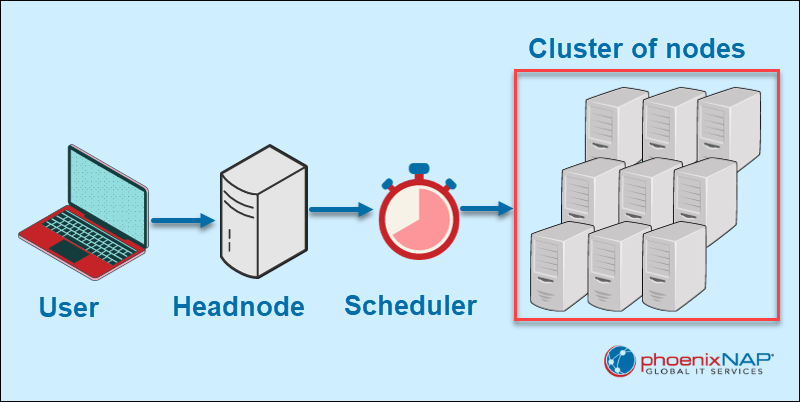

- Headnode or login nodes. Serves as the entry point where users log in to access the cluster.

- Specialized data transfer nodes. Dedicated to efficient data transfer within the cluster.

- Regular compute nodes. Presents a location where the computational tasks are executed.

- Fat compute nodes. Have substantial memory capacity, typically at least 1TB, to handle memory-intensive tasks.

- GPU nodes. Support computations on both CPU cores and Graphics Processing Units (GPUs), enhancing parallel processing capabilities.

- InfiniBand switch. An essential component that connects and facilitates communication between all nodes in the cluster.

- Software. Includes tools for monitoring, provisioning, and managing the HPC cluster, such as libraries, compilers, and debuggers.

- HPC Scheduler. Manages tasks within the cluster and distributes computational workloads effectively.

- Networking Infrastructure. Enables communication between nodes. Therefore, a high-speed network ensures quick and efficient external data handling, transfer between computing resources, and movement to and from storage.

- Facilities. Required to house the HPC cluster and accommodate server racks adequately. Additionally, sufficient power is essential to operate and cool the servers.

How Does an HPC Cluster Work?

HPC clusters operate by breaking down extensive computational tasks into smaller, more manageable segments distributed across the nodes.

Users log in from their local computers to the HPC cluster headnode using the SSH (Secure Shell) protocol. The headnode is an access point to the cluster that allows users to submit and manage tasks. From the headnode, task requests are sent to the scheduler.

A job scheduler optimizes the workload distribution by overseeing the event allocation of resources. Therefore, the scheduler ensures that each node functions at peak capacity and prevents processing bottlenecks that impede efficiency in the cluster's operations.

Each node autonomously executes its designated task, and the results are aggregated to generate the final output. This approach, known as parallel computing, is fundamental to the streamlined HPC clusters' functionality.

What Does an HPC Cluster Look Like in Practice?

HPC clusters are used in scientific research, engineering, financial analysis, medical research, machine learning, etc. As the complexity of scientific and engineering challenges grows, coupled with the increasing volume of big data, the demand for HPC clusters is unsurprising.

HPC clusters are versatile tools with applications in:

- Automotive industry. Crash test simulations, virtual airbag testing, pedestrian influence analysis, etc.

- Urban planning. Smog level prediction, skyscraper building, traffic prediction, etc.

- Aircraft industry. Training simulations, aircraft design improvement, fuel efficiency, etc.

- Financial market. AI fraud detection, automated trading, cryptocurrency mining, etc.

- Medical research. Early disease detection, clinical trial processing, precision medicine, genomic sequencing, etc.

- Machine learning. Training complex models, handling large datasets, etc.

When to Use an HPC Cluster

HPC clusters are advantageous over regular computers in scenarios where substantial computational power and parallel processing capabilities are essential. Moreover, HPC clusters are crucial when the computational demands exceed regular computer capabilities, when tasks benefit from parallelization, scalability, and the efficient use of computing resources.

Examples of workloads where HPC clusters are used are:

- Complex simulations and modeling. HPC clusters excel in parallelizing computations, enabling faster and more efficient simulation processing when significant computational resources are needed.

- Large-scale data processing. HPC clusters distribute data processing tasks across multiple nodes, accelerating the analysis of the extensive datasets.

- Scientific research and engineering. These clusters provide the computational power needed for scientific simulations, allowing researchers and engineers to tackle complex problems and optimize workflows.

- Parallel Workloads. HPC clusters handle parallel processing efficiently and are well-suited for machine learning training, where tasks are divided and executed concurrently.

- Time-critical applications. The processing times are reduced, making these clusters ideal for applications where quick results are crucial, such as financial modeling or real-time analytics.

- Resource-intensive applications. Scalable and robust computing resources allow users to match the computational power with the resource-intensive applications.

Conclusion

After reading this article, you now know what HPC clusters are, how they work, and their application.

Next, learn about the power of combining AI with HPC.