High-performance computing (HPC) involves multiple interconnected robust computers operating in parallel to process and analyze data at high speeds. HPC architecture refers to the HPC design and structure that enable HPC clusters to handle tasks involving large datasets and complex calculations.

This text explains what HPC architecture is and its key components.

Core Components of HPC Architecture

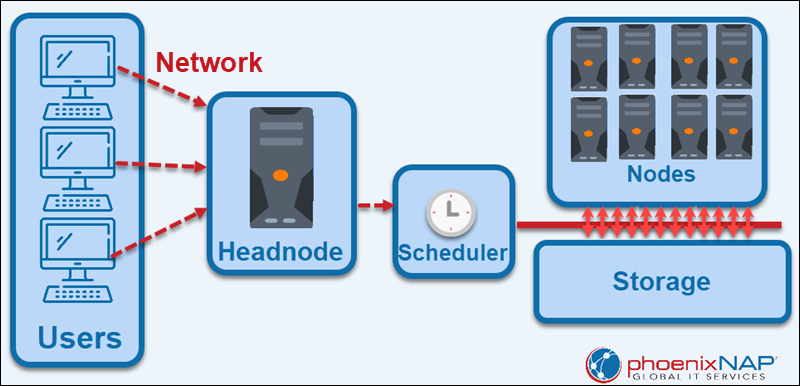

In an HPC architecture, a group of computers (nodes) collaborates on shared tasks. Each node in this structure accepts and processes tasks and computations independently. The nodes coordinate and synchronize execution tasks to produce a combined result.

The HPC architecture has mandatory and optional components.

Mandatory Components

The compute, storage, and network components are the basis of an HPC architecture. The following sections elaborate on each component.

Compute

The compute component is dedicated to processing data, executing software or algorithms, and solving problems. Compute consists of computer clusters called nodes. Each node has processors, local memory, and other storage that collaboratively perform computations. The common types include:

- Headnode or login nodes. Entry points where users log in to access the cluster.

- Regular compute nodes. Locations for executing computational tasks.

- Specialized data management nodes. Methods for efficient data transfer within the cluster.

- Fat compute nodes. Handlers for memory-intensive tasks, as they have large memory capacities, typically exceeding 1 TB.

Storage

The high-performance computing storage component stores and retrieves data generated and processed by the computing component.

HPC storage types are:

- Physical. Traditional HPC systems often use physical, on-premises storage. On-premise storage enables the inclusion of high-performance parallel file systems, storage area networks (SANs), or network-attached storage (NAS) systems. Physical storage is directly connected to the HPC infrastructure, providing low-latency data access for compute nodes within the local environment.

- Cloud Storage. Cloud-based HPC storage solutions offer scalability and flexibility. In contrast to traditional external storage, which is typically the slowest component of a computer system, cloud storage within an HPC system operates at high speed.

- Hybrid. A hybrid HPC storage solution combines on-premises physical storage with cloud storage to create a flexible, scalable infrastructure. This approach enables organizations to address specific requirements, optimize costs, and balance on-site control with the scalability of the cloud.

Network

The HPC network component enables communication and data exchange among the various nodes within the HPC system.

HPC networks focus on achieving high bandwidth and low latency. Various technologies, topologies, and optimization strategies are used to support the rapid transfer of large volumes of data between nodes.

HPC Scheduler

Task requests from the headnode are directed to the scheduler.

A scheduler is a vital HPC component. This utility monitors available resources and allocates requests across the nodes to optimize throughput and efficiency.

The job scheduler balances workload distribution and ensures nodes are neither overloaded nor underutilized.

Optional Components

Optional components in HPC environments are based on specific requirements, applications, and budget considerations. Optional components organizations choose to include in their HPC setups are:

- GPU-accelerated systems. Boost computations for tasks that can be parallelized on both CPU cores and Graphics Processing Units (GPUs), such as simulations, machine learning, and scientific modeling. GPU acceleration runs in the background, enabling large-scale processing across the broader system.

- Data management software. Systems that handle data storage, retrieval, organization, and movement within an HPC environment. Data management programs optimize system resource allocation to meet specific needs.

- InfiniBand switch. Connects and facilitates communication between all nodes in the cluster.

- Facilities and power. Physical space required to accommodate HPC.

- FPGAs (Field-Programmable Gate Arrays). Customizable hardware acceleration is used in environments where highly efficient and low-latency processing is essential.

- High-performance storage accelerators. Parallel file systems or high-speed storage controllers enhance data access and transfer speeds.

- Remote visualization nodes. Help maintain computational efficiency when data visualization is critical to HPC workflows. The nodes offload visualization tasks from the main compute nodes.

- Energy-efficient components. Energy-efficient processors, memory, and power supplies minimize the environmental impact and operational costs and improve data center sustainability.

- Scalable and flexible network fabric. Enhances node communication, improving overall system performance.

- Advanced security mechanisms. Include hardware-based encryption, secure boot processes, and intrusion detection systems.

Common Types of HPC Architecture

The main hardware and system types for processing demanding computational tasks in an HPC system include parallel, cluster, and grid computing.

Parallel Computing

In HPC architecture, parallel computing involves organizing multiple nodes to run computations simultaneously. The primary goal is to increase computational speed and efficiency by dividing tasks into smaller subtasks that can be processed concurrently.

There are different forms, including:

- Task parallelism. A complex computational task is split into smaller, independent subtasks when different parts of the task are performed concurrently with minimal inter-task dependencies.

- Data parallelism. Data distributed across multiple nodes allows each unit to execute the same operation on different data subsets simultaneously. Effective when the same operation needs to be performed on different segments concurrently.

- Instruction-level parallelism. Multiple instructions are executed concurrently within a single processor. The structure can exploit the parallel nature of instruction-level operations to improve the overall computation speed and efficiency.

This architecture type is well-suited for handling large-scale computations, such as simulations, scientific modeling, and data-intensive tasks, as it enables simultaneous calculations by harnessing the power of hundreds of processors.

Cluster Computing

In HPC architecture, cluster computing involves connecting multiple individual computers (nodes) into a single resource. Cluster computing enables nodes to collaborate, handle computational tasks, and share resources. A scheduler typically oversees the task coordination and resource management within a cluster.

This architecture is used in HPC for its ability to provide substantial processing power by leveraging the collective capabilities of interconnected nodes. Cluster computing is cost-effective because standard, off-the-shelf hardware can be scaled to fit the budget and requirements.

Grid and Distributed Computing

Grid or distributed computing connects geographically dispersed computing resources to form a single, virtual HPC system. Unlike cluster computing, which typically involves a localized node group, the grid reaches multiple locations within a single organization or across various institutions.

These nodes aren't necessarily working on the same or similar computations but are parts of a more complex problem. This architecture allows the pooling of computational power from diverse sources, enabling resource sharing and collaborative problem-solving over large distances.

Benefits of HPC Architecture

HPC is a driving force for new scientific discoveries and innovation. HPC applications range from healthcare, financial services, automotive and aircraft design, to space exploration.

Key benefits are:

- Faster tasks lead to lower costs. HPC accelerates application processing, delivering faster results and significant time and cost savings.

- Reduced need for physical testing. HPC enables simulations, eliminating the necessity for physical tests. The process saves time and cuts costs in various industries.

- Fault tolerance. If a node fails, the remaining nodes continue to operate more slowly.

- Processing speed. HPC delivers exceptional processing speed and performs extensive calculations within seconds.

Each HPC architecture type offers specific advantages and caters to different needs and use cases:

- Cluster computing. Offers scalability, cost-effectiveness, and high performance.

- Grid or Distributed Computing. Provides resource sharing, flexibility, and collaborative efforts across geographically distributed resources.

- Parallel Computing. It presents excellent processing speed, workload distribution, and task independence, making it suitable for tasks that can be divided into smaller, independent units for simultaneous execution.

Challenges of HPC Architecture Implementation

General HPC disadvantages include:

- High costs. Setting up an on-site HPC system requires a substantial budget, involving high upfront costs for hardware, a skilled technician team, and establishing an on-premises data center. Maintaining HPC also entails high ongoing costs for cooling and power.

- Infrastructure maintenance. As HPC equipment ages, node failures increase.

- Long purchasing cycles. Acquiring HPC equipment takes time due to high demand.

Apart from common challenges, each architecture type also has specific issues:

- Cluster computing. Managing many nodes in a cluster is complex, and inefficient networking or poorly optimized parallelization leads to bottlenecks, limiting overall performance.

- Grid or Distributed Computing. Communication between geographically distributed resources introduces latency and communication overhead. Also, integrating resources across different administrative domains raises security and data privacy concerns.

- Parallel computing. Coordinating and synchronizing parallel tasks can lead to synchronization issues. Parallel computing also comes with limited scalability.

HPC Architecture: General Design Principles

Despite the different architecture types of HPC systems, several overarching principles apply when designing an HPC environment. General architecture principles are:

- Dynamic architecture. Avoid rigid, static architectures and cost estimates dependent on a steady-state model.

- Scalability. Create a scalable architecture that allows you to seamlessly add or remove computational resources, like nodes or processors.

- Data management. Devise an effective data management strategy to accommodate different data types, sizes, and access patterns. How data is stored, processed, and accessed impacts system performance.

- Heterogeneous computing. Combine hardware and software components, like GPUs, FPGAs, and accelerators, to streamline specific computational tasks.

- Automation. Use automated solutions to manage and provision system resources. Manual input is the least efficient component in an HPC environment.

- Collaboration. When working on a project that spans multiple organizations, it is vital to preselect collaborative tools, scripts, and data-sharing protocols during the design phase to avoid future incompatibility issues.

- Workload testing. HPC applications are complex and require comprehensive testing. This is the only way to measure HPC application performance in various environments.

- Cost vs. Time. For time-critical tasks, prioritize performance over cost. Focus on cost optimization for non-time-sensitive workloads.

Conclusion

This article explained HPC architecture types, the pros and cons of using different models, and the basic principles of designing HPC environments.

Next, learn how HPC and AI work together.