Huge amounts of personal information collected and analyzed by artificial intelligence models create risks of data breaches and re-identification. Traditional data anonymization techniques do not hold up against re-identification attacks and create a need for better privacy methods.

Differential privacy is a framework that addresses this challenge by providing a mathematical approach to protect individual privacy. It ensures data utility while providing quantifiable privacy guarantees.

This article provides an overview of differential privacy and examines its advantages, limitations, and practical applications.

What Is Differential Privacy in AI?

Differential privacy is a mathematical concept that allows aggregate dataset analysis while protecting the privacy of involved parties. It adds controlled noise to a dataset before training an AI system and makes it difficult to extract sensitive information from the query results or the AI model.

Adding a calibrated amount of noise to data ensures that the presence or absence of a piece of data does not significantly affect an analysis.

Privacy protection is quantified using the following two concepts:

- Epsilon (ε), i.e., the privacy budget, defines the maximum change in the output distribution when a single individual's data is added or removed. A smaller ε indicates a stronger privacy guarantee but reduced accuracy.

- Delta (δ) represents the probability that the privacy guarantee might be violated. A δ value close to zero means a very low chance of information leakage. Selecting values for ε and δ involves a trade-off between privacy and data utility.

Note: Stronger privacy (lower ε and δ) reduces data utility and accuracy, and vice versa. Organizations must consider their risk tolerance and application to determine values for these parameters.

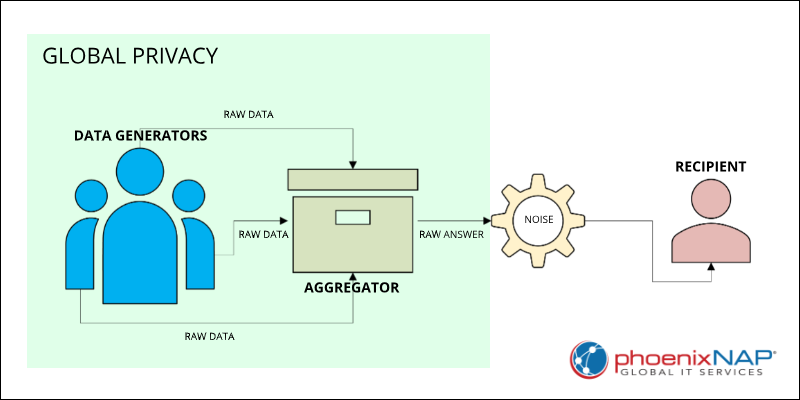

Global Differential Privacy

Global Differential Privacy (GDP) adds noise to the output of an algorithm that operates on the entire dataset. GDP is used when an aggregator holds the original data and adds noise before releasing query results or a trained model to a recipient.

For example, GDP can add noise to the final average value before sharing it. When training a machine learning model, noise can be added to the model's parameters after training to ensure differential privacy.

GDP relies on a trustworthy aggregator, i.e., the aggregator that does not reveal the original data or the noise-free results. Reliance on a trusted curator in GDP can be a point of vulnerability when data sensitivity is high, or trust is limited. Data owners may hesitate to entrust a single entity with raw data, even if noise is added later.

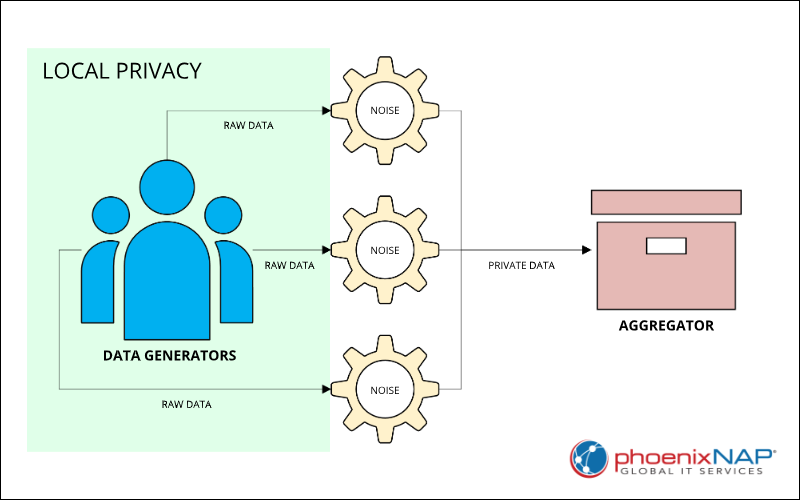

Local Differential Privacy

Local Differential Privacy (LDP) adds noise to raw data before sending it to a data aggregator. It provides a stronger privacy guarantee as it does not require individuals to trust a central entity with raw data.

An example of LDP is surveys or telemetry systems where users add noise to their data before submission to a central server. In federated learning, LDP can be applied by adding noise to model updates on each client's device.

While LDP offers enhanced privacy, it often reduces data utility and accuracy compared to GDP. Because noise is applied to each data point independently, the overall signal in the aggregated data may be weaker and require more data to achieve the GDP's level of accuracy.

Differential Privacy Use Cases

Differential privacy techniques improve sensitive data analysis, which is why they are popular in the following industries:

- Healthcare. Differential privacy allows the analysis of patient records and drug efficacy assessment while ensuring patient confidentiality. Furthermore, researchers use it when training AI models on medical images, allowing the models to learn without exposing private health information.

- Finance. Financial institutions use differential privacy for fraud detection and secure data sharing, gaining insights from transaction data without exposing individual financial details.

- Technology. Tech companies collect user behavior data, such as emoji preferences and browsing habits, to improve products and services.

- Government. Agencies like the U.S. Census Bureau use differential privacy to protect census data confidentiality while releasing demographic information for public use.

The following is a list of strictly AI applications of differential privacy:

- Processing sensitive text data, such as medical records or personal conversations, during Large Language Model (LLM) training.

- Image classification by ML models, especially in domains like medical imaging and biometrics.

- Web page optimization for search engines and voice-based queries.

Differential Privacy Examples

The following organizations implement differential privacy to protect user data and enable data analysis:

- OpenAI. Alongside other methods like encryption and access control, OpenAI uses differential privacy to reduce the risk of its models that memorize sensitive training data. The company prioritizes a comprehensive approach to privacy and applies specific measures for enterprise clients to ensure their data is excluded from model training.

- Google. Uses differential privacy across products and services like Chrome, YouTube, and Maps to analyze user activity and improve user experience without linking noisy data with identifying information.

- Microsoft. Analyzes data from Windows, Office, and Xbox users to enhance products and ensure customer privacy.

- Apple. Uses local differential privacy to collect and analyze data from user devices, including keyboard usage, emoji preferences, and browsing patterns, without seeing or storing the raw data.

- The U.S. Census Bureau. Used the framework in the 2020 Census to prevent re-identification of individuals. It added statistical noise to census results to protect confidentiality.

Differential Privacy Advantages and Disadvantages

Differential privacy protects data privacy but presents challenges concerning data utility and implementation complexity. Understanding the advantages and disadvantages is essential for making informed decisions about its application.

Advantages

Below is a list of advantages of using differential privacy in a project:

- Mathematically proven. The mathematical foundation of differential privacy techniques ensures a quantifiable level of privacy. Other anonymization methods can be vulnerable to re-identification attacks.

- Linkage attacks protection. Differential privacy preserves the utility of data for aggregate analysis, allowing organizations to extract insights without compromising individual privacy.

- Aggregated data accuracy. Individuals may be more willing to provide accurate and detailed information when confident that the aggregator protects their privacy.

- Compositional security. Differential privacy helps privacy risk management. Some differential privacy techniques adjust the accuracy of query results based on the query's privacy requirements.

- Compliance. Differential privacy helps organizations comply with data privacy regulations like GDPR.

Disadvantages

Differential privacy has the following limitations and disadvantages:

- Reduced data quality and accuracy in small datasets. The noise added to protect privacy can obscure signals in the data and produce less accurate results.

- Increased technical complexity of data analysis. More complexity means increased costs and decreased accessibility for organizations without expertise.

- Lower data utility in some cases. The added noise can decrease the usefulness of data, particularly when individual-level insights are required.

- Multiple queries on the same dataset weaken privacy. This property makes it challenging to balance privacy and accuracy when multiple analyses are needed.

- Computational overhead. For complex AI models, such as deep neural networks, applying differential privacy can impact the model's performance.

How Does Differential Privacy Relate to Private AI?

Differential privacy addresses a critical AI-related issue by providing a mechanism to reconcile the need for training effective models with data privacy concerns. Allowing analysis and model training on sensitive data without revealing individual information enables responsible AI development and deployment.

Private AI focuses on developing and deploying AI models while protecting data privacy in training and inference processes. It is an important factor in adopting AI across industries that handle vast amounts of personal data, such as healthcare, finance, and government.

Furthermore, differentially private synthetic data generation emerges as another powerful approach to private AI.

The users of this approach can:

- Train generative models on sensitive data with differential privacy guarantees.

- Create synthetic datasets that mimic the statistical properties of the original data without revealing any individual records.

- Use datasets for analysis and model development without raising privacy concerns.

Why Should You Implement Differential Privacy?

Differential privacy can offer new opportunities for organizations beyond simple risk mitigation. It enables them to securely share and analyze sensitive data for research, collaboration, and monetization without compromising individual privacy.

Consider implementing differential privacy for the following reasons:

- Preventing the re-identification of individuals from datasets. The method holds up even when potential attackers possess sophisticated data mining techniques or auxiliary information.

- Enhancing the security of sensitive data, providing a quantifiable and provable privacy guarantee. This property enables multi-partner research projects and fosters innovation.

- The framework helps organizations ensure compliance with strict regulations such as GDPR and provides a competitive advantage in a privacy-conscious market.

- Differential privacy can enable data monetization by allowing companies to share statistical insights derived from their data without revealing sensitive individual information.

Conclusion

This article presented differential privacy, a privacy-focused framework for analysis and the development of AI models. The overview included use cases, examples, and advantages and disadvantages of applying differential privacy to your project.

For more information about business aspects of working with AI, read Artificial Intelligence (AI) in Business.