Organizations across the globe are adopting a microservices-based, container driven approach to software delivery. However, most software dwas designed and written before modern, image-based containers existed.

If you are planning to transition to a Docker container-deployment model, you must consider the effects that migration will have on your existing applications.

This tutorial outlines the preparation work required and basic Docker commands for containerizing legacy applications.

Why Containerize Legacy Apps?

Companies that adopt container software deployment need to restructure their organization to mirror the processes of the new container workflow. Analyzing the potential benefits of container deployment is useful to determine the best approach for your application.

Efficiency and Portability

Container deployment can be an effective solution as containers typically start in seconds. The container image holds all the binaries, libraries, and dependencies, which means that there is no need to add environment-specific configurations. The same image is now portable across multiple systems. The image is free of environmental limitations making deployments reliable, portable, and scalable. By containerizing applications, you separate the filesystem and runtime from its host.

Maintainability and Scaling

Instead of managing an extensive monolithic application, you create an architectural pattern in which complex applications consist of small, independent processes that communicate with each other using APIs.

By solving application conflicts between different environments, developers can share their software and dependencies with IT operations and production environments. Developers and IT operations are bound tightly and can collaborate effectively. The container workflow provides Dev Ops with the continuity they need. The ability to identify issues early in the development cycle reduces the cost of a major overhaul at a later stage.

Container Management Tools

Third-party container management tools provide a mechanism for networking, monitoring, and persistent storage for containerized applications. Legacy applications can take advantage of state-of-the-art orchestration frameworks such as Kubernetes.

These tools improve uptime, analytic capabilities, and make it easier to monitor application health.

Note: Read the complete comparison between legacy apps and microservices in modern deployments.

Plan for Containers

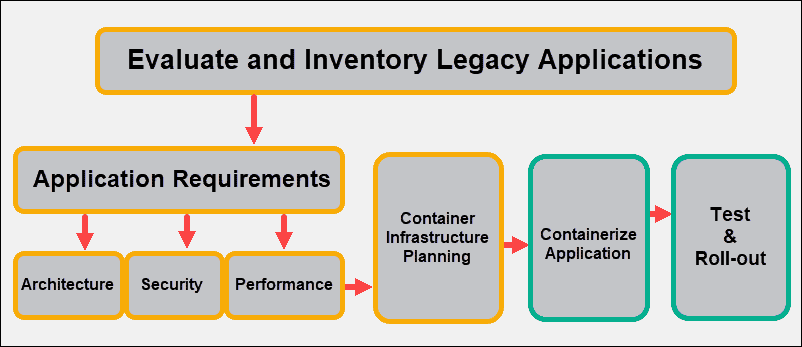

To successfully migrate your applications, you need to develop a strategy that examines the needs of your applications coupled with the nature of containers. On a technical level, any application can be deployed in a container. There are several possible solutions for deploying a legacy application in a container:

1. Entirely rewrite and redesign your legacy application.

2. Run an existing monolithic application within a single container.

3. Augment and reshape applications so they can take advantage of the new distributed architecture.

Regardless of the chosen path, it is crucial to correctly identify if an application is a good candidate to be deployed in a container environment in the first place. The focus needs to be on architecture, performance, and security.

Architecture

Applications need to be deconstructed into individual services so they can be scaled and deployed individually. To fully utilize container deployment, determine if it’s possible to split your existing application into multiple containers.

Ideally, a single process should be assigned to a single container. Even cron jobs should be externalized into separate containers. This might require redoing the architecture of your app.

Performance

Determine if your application has specific hardware requirements. Containers use Linux features that partition the underlying kernel. It might be necessary to configure individual containers with unique parameters or provide specific resources.

Furthermore, take into consideration that Docker does not have an init daemon to clean up zombie processes. Make sure to provide one as the ENTRYPOINT in your dockerfile. Consider dumb.ino as a possible lightweight solution.

Security

Containers offer less isolation than VMs, and it’s important to define the level of security your application needs. Set up strict constraints of user and service accounts. Keep secrets and passwords isolated from your container images, comply with the principles of least privilege, and maintain a defense-in-depth to secure Kubernetes clusters.

Persistent Memory Requirements

In container orchestration, all persistent data is not saved within containers’ writable layer. Instead, permanent data is saved to clearly defined persistent volumes. This approach ensures that permanent data does not increase the size of a container and exists independently from the life-cycle of the container.

If your legacy app’s permanent data is spread around the filesystem or is written to paths shared with the application itself, we advise you restructure the application so it writes all permanent data to a single path in the filesystem. That will simplify migrating app data to a containerized environment.

Externalize Services

Identify local services that may be externalized and run in separate containers. Look for caching and database services, those can be externalized the easiest. As an alternative, you may want to use managed services instead of configuring and managing it by yourself.

Prepare Image for Multiple Environments

It is expected that you will use a single Docker image in a development, QA, and production environment. Take into account environment-specific configuration variables. If you identify any, you will need to write a start-up script that will update the default application configuration files.

Deploy Legacy Application to Containers

Assuming that Docker is up and running on your system, there are several ways to create a Docker image:

- Use an empty image and add additional layers by importing a set of external files.

- Use the command line to interactively enter individual Docker commands and create a new image with

docker commit. - Employ a sophisticated configuration management tool (e.g., as Puppet and Chef) for complex deployment.

- Use an existing base image and specify a set of commands in a Dockerfile.

Dockerfiles are an excellent starting point to understand how to use a container runtime to create an image. If you make a mistake, you can easily amend the file and build a new container image with a single command.

Note: Consider using additional tools, such as Docker Swarm and Kubernetes. Docker Swarm is excellent for coordinating containers on a single server. Kubernetes, on the other hand, is an excellent tool for orchestrating containers across clusters.

Legacy App Dockerfile

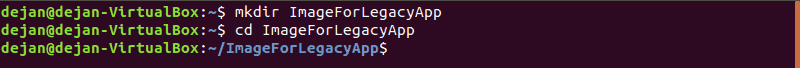

A Dockerfile is a simple text file with a set of commands run in strict order. Dockerfiles are usually based on existing images, with just a few additional settings. Use your command-line interface to create a directory that contains the files you need for your build:

mkdir ImageForLegacyAppcd ImageForLegacyApp

Once the build process starts, the files within that directory are sent to the Docker daemon. By restricting the number of files, we speed up the build process and save disk space.

Use your preferred text editor for coding or programming (we use vim) to create an empty Dockerfile, within the ImageForLegacyApp directory:

vim DockerfileNote: Each instruction adds a new layer to the existing image and commits an intermediate container image. Every time you initiate the docker build command on the same Dockerfile, the build continues from the most recent successful instruction. The exceptions to this rule are the ADD and COPY instructions.

The following build instructions are for a simple PHP application. We start by pulling an operating system with PHP and Apache installed.

FROM php:apacheFROM is the first instruction in the Dockerfile. It defines the base image, in this example Debian OS with PHP and Apache installed. If you want a specific version of a base image, be sure to use the corresponding tag (for example, php:7.0-apache).

COPY ./var/www/htmlUse the COPY command to copy your PHP code to the image. Use the ADD command instead if tar extraction is necessary.

WORKDIR /var/www/htmlThis defines the work folder. All subsequent commands will apply to this folder.

EXPOSE 80Set the port for the application to listen to with the EXPOSE command. Once you initiate the image and link the running one to another container, the exposed port is available to the other container as though it were on the same local system.

CMD ["php", "./legacy_app.php"]Use the CMD command to identify the default command to run from the image, along with the options you want to pass to it. You can only have one CMD line in a Dockerfile.

LABEL version="1.1"We use the LABEL instruction to add metadata to the image. Besides the label you assign, the image will pull all labels assigned to the parent image invoked with the FROM command.

Note: The CMD value runs by default when you start the container image. If the ENTRYPOINT value is present in the Dockerfile, it is run instead. The values of CMD are used as options to the ENTRYPOINT command. Learn how to override Entrypoint using Docker Run.

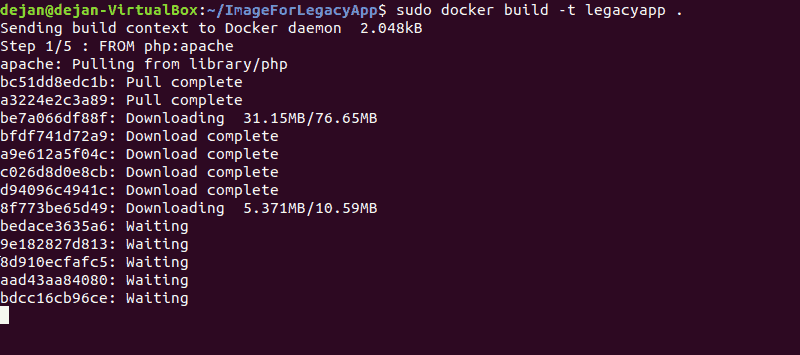

Building a Docker Image

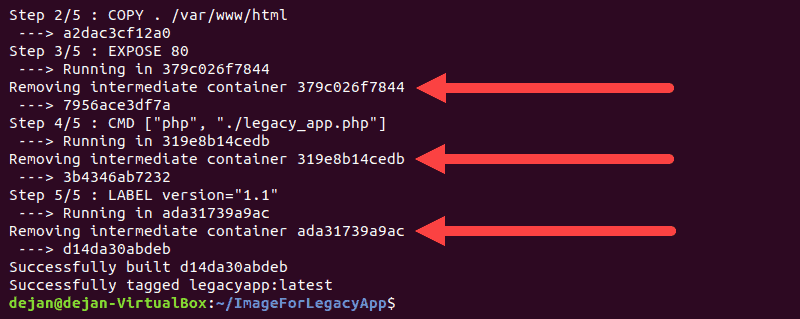

We have successfully defined the instructions within the Dockerfile. Start the build process by entering the following command in your command terminal:

docker build -t legacyapp [location_of_Dockerfile]During the build process, each image receives a unique ID. To easily locate and identify the image in a registry, use the -t command to name the image. In this example, the image is going to be named legacyapp.

docker build -t legacyapp .Note: As we are already within the directory we created for this purpose, we can use “.” instead of the full location.

- The

docker buildcommand instructs the Docker daemon to create an image based on the Dockerfile. - The path supplied to the

docker buildcommand is used for uploading files and directories. - Each build step is numbered as the Docker daemon proceeds with executing the commands form the Dockerfile.

- Each command results in a new image. And the image ID is presented onscreen.

- Each intermediate docker container is removed before proceeding to the next step to preserve disk space.

The Docker image is ready, and you have received a unique ID reference. Additionally, we did not use a version tag so Docker automatically tagged the image as :latest.

Running a Docker Image

The image is now ready to be deployed. The docker run sub-command starts the container:

sudo docker run --name apache2 legacyappThe --name argument provides the container with a unique name, while the legacyapp argument represents the name of the static docker image we created.

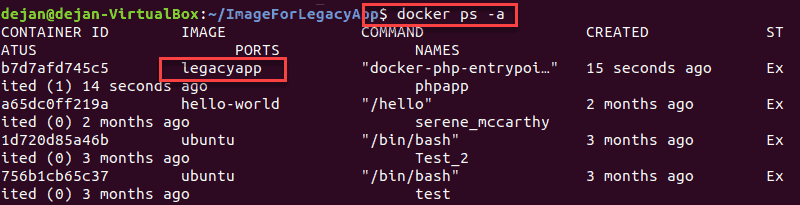

To view a list of containers that have been started and removed type the following command:

docker ps -aThe output provides an overview along with a container ID and status.

Navigate to localhost to check whether your app is being served properly by the Apache server.

Conclusion

You have successfully written a Dockerfile, used it to create a Docker image, and used the Docker engine to deploy that image to a container.

This guide helps maintain high levels of performance and security during the transition process. Following the outlined advice should result in higher-quality software being delivered faster with reduced cost.