In 2018, only 10% of all enterprise-generated data was created and processed outside a centralized cloud or data center. By 2025, this figure is expected to reach a staggering 75%.

As most processing moves towards the edge of the network, edge computing is becoming increasingly more cloud-native. Cloud-native principles enable an edge device to efficiently process data locally, reducing latency and enhancing real-time decision-making capabilities.

This article provides an intro to cloud-native edge computing that explains how cloud-native software is changing what organizations can do on the network's edge. Jump in to learn about the main benefits and challenges of applying cloud-native practices to edge computing environments.

Learn about the basics of edge servers before reading about equipping these devices with cloud-native processors.

What Does Cloud-Native Mean?

Cloud-native is a term for software designed to take full advantage of cloud computing resources. A typical cloud-native application is built as a collection of loosely coupled microservices, each one a self-contained unit you can work on, deploy, and scale independently.

A cloud-native architecture enables developers to build and operate apps more efficiently. Here are the main advantages of the cloud-native design:

- Scalability. Cloud-native architecture scales dynamically based on demand. Apps handle varying workloads effectively, ensuring optimal resource utilization and lowering IT costs.

- High agility. Microservices promote business agility. Developers get to work on smaller, independent software components, enabling faster development cycles and quicker releases.

- Resilience. Orchestration platforms for cloud-native apps provide features for automatic recovery, self-healing, and workload distribution.

- Consistency. Cloud-native apps have high levels of consistency between development, testing, and production environments.

- Security. Microservices provide isolation between app components, while cloud-native tools offer built-in features that improve security posture (e.g., RBAC and automated updates).

Cloud-native has slightly different meanings depending on the context. For IT admins, cloud-native means managing infrastructure as code (IaC), while software developers typically associate the term with techniques to write portable cloud-native apps. In the context of CPUs, a cloud-native processor is a piece of hardware optimized for running cloud-native apps.

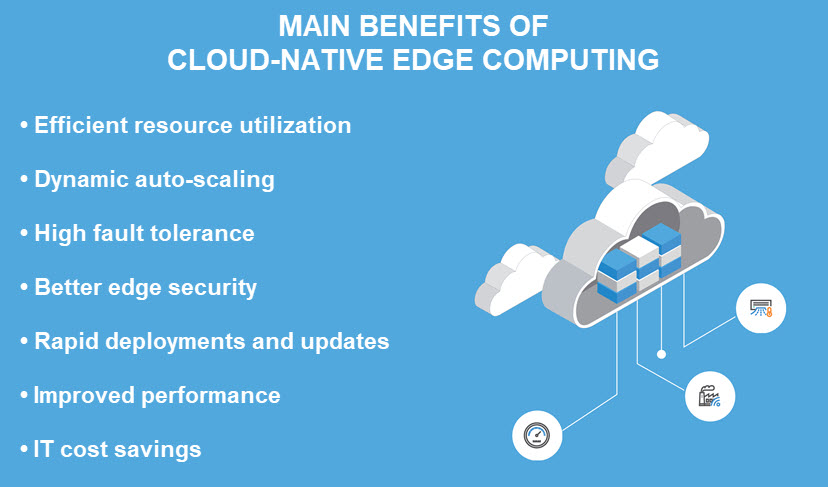

Benefits of Cloud Native for Edge Computing

The core concept of edge computing is to process data closer to or at the source of data generation. Cloud-native practices enhance the efficiency of edge processing, making cloud-native apps a natural choice for processing-heavy edge use cases.

Below is a closer look at the main benefits of cloud-native edge computing.

Efficient Resource Utilization

Cloud-native principles optimize the use of computing resources in edge environments. A typical cloud-native architecture leverages containers, lightweight and isolated runtime environments for apps.

Containers are a technology for packaging and deploying apps. They ensure consistent and reliable deployment across diverse environments by encapsulating the following into a single package:

- The executable code that forms the app's functionality.

- The dependencies required for the code to run seamlessly.

- Libraries that provide essential functionalities and support the execution of the code.

- All the necessary system tools and settings.

Containers share the kernel of the host's operating system, reducing the overhead associated with virtualization and enabling efficient resource utilization. This feature is highly advantageous in edge computing, where processing power and data storage are limited.

Container orchestration also helps with resource allocation. Orchestration tools allocate resources based on app requirements, ensuring each container gets the necessary resources without over or under-provisioning.

Efficient resource utilization directly translates into cost savings. Savings are particularly noticeable when edge devices operate in remote or resource-constrained locations.

Dynamic Auto-Scaling

Cloud-native principles enhance the ability to scale and adapt to varying demands. Microservices make it easy to scale instances up or down in response to workload changes and current requirements.

Dynamic scaling allows an edge system to adjust its capacity automatically in response to changes in demand. During peak usage periods or sudden rises in processing requirements, the system scales out to handle the additional load. During periods of low demand, the system scales down to conserve resources.

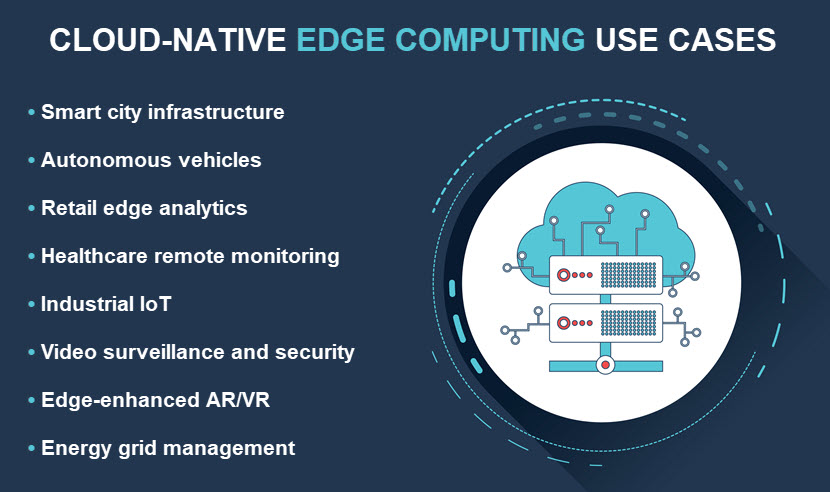

Here are a few edge use cases in which dynamic auto-scaling is highly beneficial:

- In a smart city app, the edge device handling traffic monitoring can dynamically scale its microservices for increased processing during rush hours and scale down at night.

- In manufacturing plants leveraging Industrial Internet of Things (IIoT), edge devices monitoring the machinery can scale processing based on the production load.

- Edge devices in healthcare apps can scale during emergencies or critical health events. Scaling down during normal conditions helps conserve device battery life.

- Edge devices for video surveillance can scale processing capabilities during events or situations requiring intensive video analysis (e.g., large crowds or security alerts). During quiet periods, the system scales down to save resources.

Cloud-native edge apps can scale horizontally at the edge and vertically in the cloud, ensuring a unified and flexible architecture that spans multiple deployment locations and strategies.

Learn the difference between horizontal and vertical scaling, two distinct methods of adding more resources to an IT system.

High Fault Tolerance

Distributed architecture and redundant microservices ensure continuous operation even in the face of network disruptions or device failures. High fault tolerance is vital in scenarios where edge devices are prone to:

- Unreliable or intermittent connectivity.

- Hardware failures.

- Adverse environmental conditions.

The distributed design of cloud-native apps enhances resilience by preventing single points of failure (SPOFs) from disrupting the entire system. If one microservice encounters an issue or goes offline, the remaining services continue functioning independently.

Cloud-native edge computing incorporates various methods to ensure continuous operation. The three go-to strategies are setting up:

- Duplicate instances of critical components and microservices.

- Resilient communication patterns (e.g., circuit breakers or retries).

- Redundant routes for data transmission.

Automated recovery mechanisms also boost fault tolerance. Orchestration tools like Kubernetes detect anomalies or failures automatically and initiate corrective actions. For example, if a microservice on an edge device has a sudden increase in error rates, the orchestration system automatically replaces the problematic instance with a healthy one.

A Boost to Edge Security

Cloud-native edge computing leads to a more robust security posture in edge environments where devices often have varying levels of trustworthiness. Here's how the cloud-native design makes edge computing safer:

- Each microservice operates within a separate container, limiting the potential impact of security incidents.

- The isolated nature of containers means a vulnerability or flaw in one component does not affect other system parts.

- Isolation makes it difficult for intruders to move laterally from one container to another.

- The lightweight nature of containers enables rapid deployment of security patches.

The modular design of cloud-native edge computing also allows for fine-grained access control and authentication. Each microservice can have its own security policy, which reduces the attack surface and minimizes the impact of breaches.

Rapid Deployments and Updates

Cloud-native principles enable seamless rollout of updates in dynamic and distributed edge environments. Continuous integration (CI) and continuous deployment (CD), two core concepts of cloud-native development, are the reasons for rapid deployments and updates:

- CI involves regularly integrating code changes into a shared repository. It ensures that new features, bug fixes, or enhancements are continually tested and validated to reduce the likelihood of integration issues.

- CD automates the process of deploying validated code changes into a production environment.

In edge scenarios, where devices often have varying configurations, CI becomes crucial for maintaining the consistency and reliability of apps. On the other hand, CD enables an organization to rapidly and reliably release updates to edge devices.

Cloud-native edge apps often leverage rolling updates, a strategy that gradually replaces old instances with new versions. This tactic minimizes downtime and ensures continuous operation.

Over-the-air (OTA) updates are also relevant in edge scenarios as they allow a team to remotely update and manage software on edge devices. OTA updates are vital in industries like manufacturing, where an edge device on the factory floor must receive updates without disrupting the production process.

Learn the difference between continuous delivery, deployment, and integration, three highly overlapping practices at the heart of modern software development.

Improved Performance

Cloud-native principles, combined with thoughtful network design, ensure reliable and low-latency connectivity in dynamic edge environments.

Cloud-native edge computing relies on communication protocols tailored for efficient edge interactions. The two go-to protocols are:

- MQTT (Message Queuing Telemetry Transport). MQTT is a lightweight, efficient messaging protocol designed for constrained devices and low-bandwidth, high-latency, or unreliable networks.

- CoAP (Constrained Application Protocol). CoAP provides lightweight communication suitable for edge environments. The protocol ensures efficient data exchange while conserving bandwidth and minimizing latency.

Decentralized processing and edge intelligence also boost performance. A cloud-native edge device possesses the computational capabilities to perform advanced local processing, which minimizes the need for frequent data transfers and reduces network congestion.

Dynamic load balancing is another contributor to the boost in performance. Load balancers distribute traffic across multiple edge devices or instances of microservices, preventing network bottlenecks. In scenarios like smart grids, where devices monitor and control energy distribution, load balancing ensures that the network can handle fluctuations in demand while maintaining optimal performance.

IT Cost Savings

Effective utilization of computing resources directly impacts the economic viability of deploying apps in an edge environment. Here's an overview of how cloud-native edge computing lowers IT expenses:

- Auto-scaling allows an app to adapt to varying workloads by automatically allocating resources based on current demand. Edge devices efficiently utilize resources during periods of high activity and prevent unnecessary costs during idle times.

- The lightweight nature of containers minimizes overhead. Multiple containers can run on a single device without compromising performance, so you maximize the use of available resources without costly duplication.

- Organizations can use serverless computing and only pay for the actual compute resources consumed during the execution of functions or services. This pay-as-you-go model is particularly advantageous in edge scenarios with sporadic bursts of computation.

- Cloud-native edge apps use data filtering, aggregation, and compression locally. These features minimize the amount of data that must traverse the network, reducing bandwidth expenses.

Interested in edge computing? Stay ahead of competitors with pNAP's edge servers ideal for any use case that requires low-latency processing on the network's edge.

Challenges of Cloud Native for Edge Computing

Cloud-native principles offer numerous benefits for edge computing, but this deployment strategy has a few must-know drawbacks. Here are the main challenges of cloud-native edge computing:

- Limited resources on edge devices. A typical edge device has limited processing power, memory, and storage. Deploying a cloud-native app on such a device requires careful containerization to minimize the impact on performance.

- Network-related concerns. Adopters of cloud-native edge computing must design apps that operate effectively within the constraints of network latency and limited bandwidth.

- Diverse device ecosystems. Variations in hardware, operating systems, protocols, interfaces, and capabilities between edge devices pose a challenge for uniform deployment. Developing a cloud-native app that seamlessly runs on various edge devices is a common challenge.

- Orchestration complexity. Orchestrating and automating microservices across a distributed edge environment is complex, especially when the use case involves a high number of devices.

- Data governance. Implementing cloud-native edge solutions requires a careful balance between processing data locally for efficiency and ensuring compliance with data protection and privacy laws.

- Environment variability. Edge environments are highly variable, with factors like temperature, humidity, and power availability influencing device performance. Teams often struggle to design cloud-native apps to adapt to such variability.

Learn about the main challenges of edge computing and see where companies struggle the most when moving data processing away from centralized servers.

Cloud Native Processors Innovation

A cloud-native processor is a general-purpose CPU designed for running cloud-native workloads and apps. These energy-efficient processors can handle advanced tasks locally, reducing the need for constant communication with a central processing server.

Here's what makes a cloud-native processor an excellent choice for deployment on the network's edge:

- Low power consumption. Edge devices are often powered by batteries or have limited access to power sources. Cloud-native processors are energy-efficient and can operate for extended periods without depleting the device's power source.

- High performance. The mix of single-threaded design, large caches, and non-blocking fabric ensures that cloud-native processors provide sufficient and predictable performance.

- Edge AI and inference capabilities. Cloud-native processors with built-in capabilities for edge AI and inference enable devices to perform real-time analysis without using cloud resources.

- Workload optimization. Adopters can optimize a cloud-native processor for specific workloads or tasks commonly found in edge apps (e.g., image processing or sensor data analysis).

- Built-in security features. A typical cloud-native processor has several valuable security features, such as hardware-based encryption or secure boot mechanisms.

Many edge computing architectures use cloud-native processors as edge gateways. A gateway acts as an intermediary between edge devices and the central server. Edge gateways must efficiently manage communication, data aggregation, and local processing, which is why many organizations use cloud-native processors as edge gateways.

Use Cloud-Native Edge Computing to Do More at the Network's Edge

Cloud-native principles and processors enable edge devices to perform more tasks before sending data to on-prem or cloud servers. Adopters enhance the capabilities of their edge deployments while also saving costs in the long run due to efficient usage and fewer data transfers. If you've got a suitably processing-heavy use case, cloud-native edge computing is a no-brainer investment.