Traditional encryption protects data at rest and in transit but not during processing. Data must be decrypted into plaintext during computation, which poses a significant risk in untrusted or shared environments. Homomorphic encryption (HE) addresses this long-standing data security gap by enabling the processing of information while it remains in encrypted form.

The ability to process encrypted data is vital for artificial intelligence (AI) models that handle sensitive information. HE enables third-party AI to analyze, infer from, and make predictions on encrypted inputs without ever accessing raw data.

This article explains the role of homomorphic encryption in AI and shows how the ability to perform computations on scrambled inputs is paving the way for privacy-preserving AI applications.

Homomorphic encryption is a leading approach to encryption in use, alongside technologies like trusted execution environments (TEEs) and secure multi-party computation (SMPC).

How Is Homomorphic Encryption Used With AI?

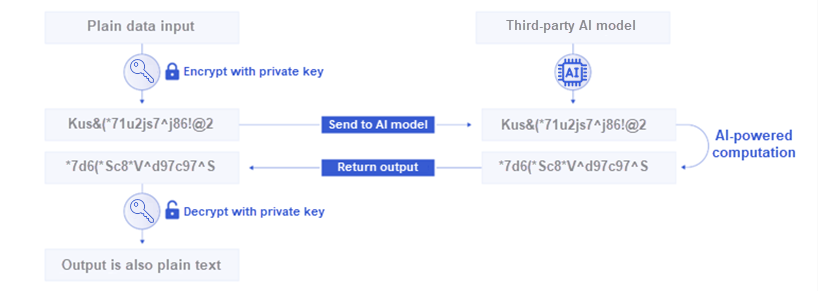

Homomorphic encryption enables AI systems to perform computations directly on encrypted data without decrypting it first. The model never sees raw data; instead, it processes ciphertext and returns an encrypted output. Upon decryption, the final output is functionally equivalent to what the model would produce if it had operated on plaintext.

Here are the most notable benefits of applying homomorphic encryption to AI use cases:

- Privacy preservation. With HE, companies reduce the risk of exposure by ensuring data remains encrypted even during input processing.

- Regulatory compliance. HE helps comply with data protection regulations by ensuring sensitive files never transform into plaintext during their lifecycle.

- An increase in user trust. End users gain greater confidence in AI systems when they know models operate without accessing raw data.

- External processing. HE enables organizations to safely rely on cloud-based AI inference and use powerful third-party models that cost a fortune to train and deploy on-site.

- Enhanced security posture. The lack of plaintext data drastically lowers the chance of costly data breaches and leaks.

There are three different types of homomorphic encryption:

- Partially homomorphic encryption (PHE). PHE enables a system to perform either additions or multiplications on encrypted data, but not both.

- Somewhat homomorphic encryption (SHE). SHE allows a system to perform both additions and multiplications on encrypted data. However, each operation adds noise to the ciphertext, and too many operations can render the result undecryptable.

- Fully homomorphic encryption (FHE). FHE allows a system to perform any number of computations on encrypted data without a predefined sequence or limit.

FHE schemes are currently computationally too expensive to be feasible for large-scale AI use cases. However, the promise is clearly there, and ongoing advancements in hardware acceleration and algorithmic efficiency continue to bring FHE closer to practical use in real-world systems.

As with encryption at rest and in transit, in-use encryption is only as effective as your key management. Check out phoenixNAP's Encryption Management Platform if you are in the market for an all-in-one EMP that enables you to control all keys from a single pane of glass.

Examples of Homomorphic Encryption in AI

While FHE is still an emerging technology, more limited forms of homomorphic encryption are already proving useful in select AI applications. The following ten examples showcase real-world use cases where HE helps AI systems preserve privacy during data processing.

Privacy-Preserving Medical Image Analysis

Medical image classification is the process of using AI to interpret X-rays, MRIs, and CT images. HE allows healthcare providers to encrypt scans before they leave the hospital or medical device and get processed by a remote AI model.

Once the AI system performs inference on an encrypted image, it returns an encrypted prediction, like a class label or confidence score. Only the data owner (e.g., a hospital or imaging center) can decrypt the result to see the diagnosis.

Without homomorphic encryption, this process would require the data holder to send images to a third party in plaintext, which raises serious concerns about HIPAA compliance and undermines patient trust.

Here's why privacy-preserving medical image classification matters so much in the healthcare industry:

- Patient privacy. Plaintext medical images can become personally identifiable when paired with metadata or contextual information.

- Compliance. Many countries prohibit transferring raw health data across borders or into public clouds.

- Cloud-based AI. Homomorphic encryption allows hospitals to leverage powerful third-party AI models without compromising data privacy.

As for the issues surrounding this use case, real-time diagnosis of encrypted images remains impractical due to computational overhead. Deep convolutional neural networks (CNNs) are too heavy to run over encrypted data without major performance trade-offs.

The most promising solutions to these issues are image preprocessing (i.e., flattening and converting images into numeric arrays before encryption) and intentional model limitations (e.g., compressed or simplified neural networks). Libraries like SEAL and TenSEAL also help due to SIMD-style batching that packs multiple pixels into a single ciphertext.

Secure Health Diagnostics

While privacy-preserving medical image classification focuses specifically on imaging, AI-powered diagnostics can involve a wide range of clinical data. Common examples are:

- Lab results.

- Symptoms.

- Disease history.

- Wearable sensor data (e.g., blood pressure or heart rate).

- EHRs (electronic health records).

- Genetic markers.

HE enables machine learning (ML) models to make inferences on highly sensitive data without ever decrypting it. Models can diagnose diseases or recommend treatments while working in a third-party cloud environment, all without seeing the patient's raw health info.

While deep learning is not a practical choice for this use case, several model types are compatible with HE-based diagnostics, such as:

- Logistic regression. This type of regression works well with encrypted data because it's a relatively simple, linear model that does not require DNNs or complex operations.

- Decision trees (with depth constraints). Decision trees are typically more computationally efficient and can be adapted to work with encrypted data when constrained to shallow depths.

- Shallow neural networks. Shallow models (i.e., those with fewer layers) may work with encrypted data, though the complexity of HE could still pose challenges for training.

- Linear models and classifiers. These are well-suited for encrypted data since linear models and classifiers involve relatively simple calculations adopters can adapt to HE.

These AI technologies and techniques can work with homomorphic encryption and are sufficient for initial triage, risk scoring, or population-level health screening based on structured data (e.g., lab results or wearable metrics). However, due to the necessary model simplicity, HE-based diagnostics are currently best suited for supportive roles, not full clinical decision-making.

Check out our guide to AI in healthcare to see how artificial intelligence is driving advancements in medical imaging, predictive clinical analytics, and robotic surgery.

Encrypted Voice Recognition

Encrypted voice recognition enables an AI system to analyze and identify speech or speakers from encrypted audio data. Whether it is speech-to-text transcription or speaker identification, the model performs its task without decrypting the input features extracted from the audio.

This type of voice recognition involves processing high-dimensional, time-series audio signals. Here's an overview of how this homomorphic encryption AI use case works:

- Audio preprocessing. The system with access to the raw user's voice (user device, smart speaker, virtual assistant, healthcare hotline, etc.) converts audio files into a feature vector to reduce data complexity. The system then encrypts features using an HE scheme (most commonly CKKS).

- Encrypted inference. A remote voice recognition AI model receives encrypted voice features. The model, which is either a simplified neural network, logistic regression model, or linear classifier, performs inference directly on the ciphertext.

- Result decryption. The server returns an encrypted output (e.g., a recognized keyword or speaker ID). Only the authorized system can decrypt the result.

GDPR and other frameworks treat voice recordings as personally identifiable information (PII), which makes encrypted voice recognition highly valuable for many organizations.

Homomorphic encryption's computational cost currently limits the complexity of voice recognition models. To be viable, models must be compressed or quantized, and inference must occur on preprocessed audio features (e.g., MFCCs or filterbanks) rather than raw waveforms.

Fraud Detection on Encrypted Data

Fraud detection is one of the most compelling homomorphic encryption AI use cases due to the high value and sensitivity of financial information.

In this use case, banks, payment processors, and fintech firms use ML models to analyze encrypted financial data (e.g., credit card transactions, transfers, and account activity) to detect fraud. Homomorphic encryption allows models to perform analysis without requiring access to raw data.

As a result, institutions can identify anomalies or high-risk behaviors while guaranteeing the confidentiality of customer data throughout the entire process. Here's a short overview of how this analysis works:

- Feature extraction. The user's device or internal system extracts relevant transaction features (e.g., transaction frequency, amount spikes, geolocation patterns) from plaintext data. These features are then encrypted using a scheme like BFV (for integer values) or CKKS (for floating-point metrics).

- Encrypted inference. The encrypted feature vectors go to a remote fraud detection model (a decision tree, logistic regression, or shallow neural network) that performs inference directly on the ciphertext.

- Result decryption. The model returns an encrypted output, such as a fraud risk score or binary classification (e.g., "Fraud likely"). Once decrypted, the output can trigger real-time alerts, flag transactions for review, or initiate automated fraud prevention action.

This use case is valuable since fraud detection systems handle highly sensitive data (e.g., location and device metadata, spending behavior, transaction timestamps and amounts, merchant identifiers). Homomorphic encryption enables AI to analyze patterns and correlations without seeing any raw inputs, which lowers the risk of data exposure.

Encrypted Credit Scoring

Encrypted credit scoring refers to the use of homomorphic encryption to assess an individual's or organization's creditworthiness without exposing sensitive info like:

- Income history.

- Repayment behavior.

- Account balances.

- Transaction-level behavioral data.

An AI model processes encrypted financial records (e.g., loan repayments, income patterns, spending habits, and loan history) and generates a scrambled credit score that only an authorized party can decrypt. That way, lenders, banks, and fintech companies make informed decisions without any third party accessing or storing raw personal data.

Most AI models used for encrypted credit storing are regression models or gradient-boosted trees simplified for encrypted inference. These systems analyze the ciphertext directly and can help financial institutions with:

- Calculating debt-to-income ratios.

- Predicting credit default risk.

- Identifying thin-file borrowers.

- Detecting anomalies such as missed payments.

- Applying custom scoring rules.

- Enabling secure multi-lender scoring.

Our article on the use of machine learning in finance explains how ML's capability to analyze vast data sets is changing the way financial institutions operate and make decisions.

Privacy-Preserving Smart Grid Analytics

Smart grids often rely on AI to analyze data from smart meters, appliances, and other IoT devices. The high level of detail, such as real-time energy usage at the individual household level, raises major privacy concerns.

Homomorphic encryption allows utility companies and third-party providers to process encrypted energy usage data without ever decrypting it. This strategy enables all the benefits of smart grid analytics without compromising customer privacy.

Here's an overview of how this type of smart grid analysis works:

- Smart meters or edge devices encrypt energy consumption data locally before transmission. This data can include usage frequency, time-of-day patterns, appliance-level breakdowns, and consumption anomalies.

- The device sends encrypted data to utility providers or analytics platforms.

- An AI model with time-series forecasting or anomaly detection algorithms analyzes the encrypted data.

- The encrypted result of the analysis is returned to the device along with the decryption key. The device decrypts insights and uses them to generate load forecasts, trigger alerts, or inform billing and demand-response systems.

This smart grid preserves consumer privacy and helps meet stringent privacy standards. HE also enables utility vendors to outsource analytics to cloud providers or research institutions without exposing any raw data.

A few pilot programs in Europe and Japan already use HE for load forecasting and consumption pattern analysis. Some organizations are also exploring hybrid approaches using both homomorphic encryption and TEEs for secure processing.

Despite this progress, HE in smart grids still faces challenges due to computational overhead and the need for hardware capable of efficient encrypted processing.

Federated Learning with Homomorphic Gradient Aggregation

Federated learning is an ML technique that allows multiple parties to train a shared AI model. Each participant trains a local version of the model on their own data and sends updates (such as gradients or weights) to a central server.

For instance, a hospital can train a model on patient records and transmit encrypted model updates to a central server coordinating with other healthcare providers.

When combined with homomorphic encryption, participants encrypt their model updates before transmission. The central server aggregates these encrypted updates using HE's mathematical properties without ever accessing the underlying plaintext.

The aggregated result is decrypted either by a trusted authority or collaboratively by the participants before updating the global model. At no point do participants exchange raw data, and the central server never sees individual updates in an unencrypted form.

This type of federated learning enables organizations like banks, hospitals, and governments to jointly train AI models without risking confidentiality. It also enables smaller-scale use cases, such as encrypted model updates from smartphones for use in applications like keyboard prediction or fitness tracking.

Learn what tasks and processes your company can automate with artificial intelligence in our guide to the use of AI in business.

Encrypted Sentiment Analysis

Sentiment analysis is a natural language processing (NLP) technique used to determine whether a piece of text is positive, negative, or neutral in tone. This technique is widely used in:

- Customer feedback systems.

- HR tools.

- Compliance monitoring.

- Social media analytics.

Sentiment analysis raises privacy concerns since the analyzed data often contains PII, sensitive opinions, or confidential business content. Homomorphic encryption enables companies to perform sentiment analysis directly on encrypted text representations, which is critical in highly regulated sectors like healthcare, finance, or enterprise communications.

Encrypted sentiment analysis starts with text preprocessing and encryption. The input text (e.g., a customer support ticket or user review) is tokenized and transformed into numerical embeddings (e.g., using Bag-of-Words, TF-IDF, or word vectors). Embeddings are then encrypted using an HE scheme.

A pre-trained sentiment analysis model (most commonly logistic regression, SVMs, or shallow MLPs) then processes encrypted embeddings. The model can calculate a sentiment score or make a classification without decrypting the input.

Once the encrypted sentiment prediction returns to the original system, the authorized party decrypts the data. Here are a few examples of where this process is most useful:

- Business owners analyzing team morale or detecting burnout signals in internal messaging platforms without reading employees' messages.

- Hospitals analyzing patient feedback to improve services without exposing any individual's personal experience.

- Brands analyzing support ticket sentiment to prioritize responses while preserving privacy.

- Educational platforms assessing student sentiment in course feedback while protecting student identity.

- Government agencies evaluating public sentiment on policy proposals using encrypted survey data.

- E-commerce platforms detecting shifts in customer sentiment across reviews while adhering to data protection regulations.

Encrypted sentiment analysis keeps sensitive text data hidden even during analysis. As a result, organizations can use powerful cloud-based NLP services without worrying about exposing private text to third-party vendors.

Privacy-Preserving Recommendation Systems

Privacy-preserving recommendation systems use homomorphic encryption to deliver personalized recommendations (e.g., movies on Netflix, products on Amazon, news articles) without accessing users' raw data.

Traditional recommendation engines must see personal data (user preferences, browsing behavior, ratings, purchase history, etc.) to make predictions. With HE, users encrypt their data before sending it to the recommendation engine. The model then computes recommendations without decrypting inputs.

The result of this process is a fully personalized recommendation pipeline where only the user knows their data and suggested recommendations.

To work within HE constraints, AI recommendation systems use simplified models like linear predictors, shallow matrix factorization, or low-degree polynomial approximations. Here are a few real-world examples of how organizations can use privacy-recommendation systems:

- Healthcare providers recommending treatment plans or preventative care options based on encrypted patient history.

- Retailers offering personalized shopping suggestions based on encrypted transaction data.

- Streaming service providers delivering custom content suggestions without logging or storing user watch history.

- Education institutions offering adaptive learning platforms that recommend materials without accessing raw student performance data.

Similar to other homomorphic encryption AI use cases, latency is the main issue of privacy-preserving recommendation systems. Complex recommendation algorithms are currently too computationally expensive for in-use encryption in latency-sensitive use cases.

Secure Document Analysis

Homomorphic encryption enables organizations to analyze sensitive legal, regulatory, and contractual documents without ever exposing information to third parties. With HE, legal and compliance departments can encrypt legal documents (contracts, court filings, policy manuals, internal audit reports, regulatory guidelines) before sending them to a third-party AI analysis platform.

Secure document analysis also enables organizations to run queries, classifications, and risk assessments directly on the encrypted data. Most models are tasked with detecting clauses of interest (e.g., indemnity, non-compete), classifying document types, or flagging anomalies.

Here are a few examples of how organizations can use AI-based document analysis protected with HE:

- Flagging problematic clauses, missing disclosures, or outdated terms in encrypted contracts.

- Analyzing internal documents to detect compliance gaps without making the data visible to external systems.

- Processing legal documents across entities without either side needing to share unencrypted content.

- Pre-screening reports and filings for non-compliance, inconsistencies, or sensitive disclosures.

This type of AI analysis enables secure outsourcing of legal workflows via public cloud services. Companies get to leverage powerful third-party cloud infrastructure without exposing sensitive strategies or deal terms. Secure AI analysis also supports data privacy mandates and confidentiality obligations (e.g., attorney-client privilege).

Considering AI adoption at your organization? If so, ensure your teams know how to avoid the most common AI risks and dangers.

The Future of Homomorphic Encryption in AI

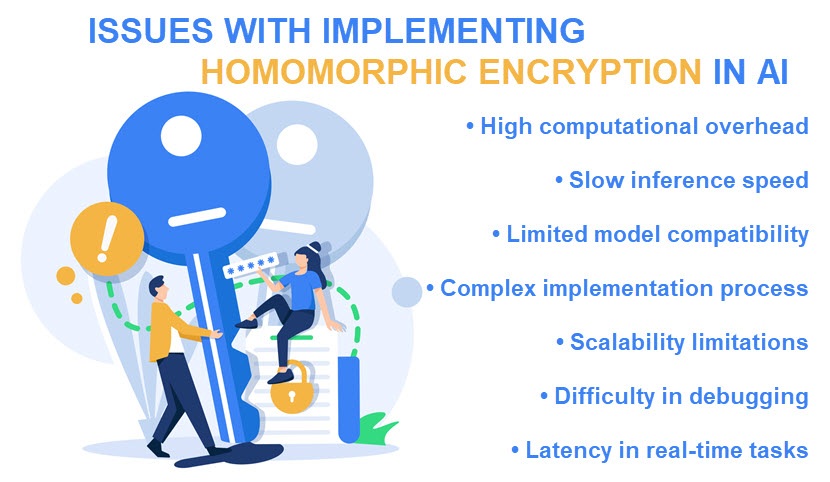

While HE holds immense promise for privacy-preserving AI, its practical use in mainstream systems remains limited for now. Fully homomorphic encryption, in particular, is still computationally too intensive and introduces significant latency for most AI use cases.

To put it into perspective, FHE can be hundreds to tens of thousands of times slower than equivalent plaintext computations. This performance gap currently restricts the use of FHE to highly specialized scenarios or proof-of-concept deployments, and prevents us from using this type of homomorphic encryption for:

- Deep neural networks (DNN) with high-dimensional data.

- Real-time or low-latency AI systems (e.g., autonomous driving or high-risk monitoring systems).

- Large-scale model training from scratch on encrypted data.

Luckily, research in this space is advancing quickly. Hardware acceleration, algorithmic optimizations, and hybrid cryptographic approaches are steadily improving the efficiency of FHE schemes. Open-source libraries like Microsoft SEAL, PALISADE, and Helib are also speeding up progress.

While FHE isn't yet practical for large-scale or latency-sensitive AI workloads, there are already niche use cases where homomorphic encryption can be used reliably in AI today. In the coming years, we'll see homomorphic encryption primarily gain traction in AI applications where data privacy is non-negotiable, such as healthcare, finance, and government systems.

In the meantime, expect companies to start investing in private and enterprise AI models that preserve confidentiality by keeping all data processing on-site. Many adopters will also experiment with hybrid approaches that combine FHE with techniques like trusted execution environments and secure multi-party computation.

Our confidential computing offering enables clients to apply end-to-end data encryption (at rest, in transit, and in use) to their sensitive files. This service is available for dedicated servers, Bare Metal Cloud (BMC) instances, and on our Data Security Cloud (DSC) platform.

A New Era of Privacy-Preserving AI

Homomorphic encryption offers a solution to one of AI's most persistent privacy challenges: how to process sensitive data while respecting user privacy. It enables computations on encrypted data without exposing the underlying information, maintaining confidentiality throughout the process.

As this technology matures and grows more practical, it's almost a guarantee that HE will become a core component in privacy-first AI architectures. Its adoption is likely to expand across industries that handle sensitive information, such as healthcare, finance, and government sectors.