Hybrid cloud is a combination of on-prem and third-party cloud resources, ensuring a high level of flexibility and cost-efficiency. Organizations are massively exploring and leveraging modern hybrid cloud environments, moving from a single on-prem or cloud solution to the best of both worlds.

According to the 2020 Cloud Computing Study by IDG, more than half of today’s organizations are using multi-cloud environments, with 21% saying they use three or more. Despite the increased popularity of hybrid cloud strategies, deploying and managing multiple clouds brings different challenges.

The Challenges

Despite the advancements in cloud security and orchestration technologies maturing, many of the key challenges related to hybrid cloud adoption remain an inhibitor to maximizing its potential. These include:

- Compliance and governance issues.

- Compatibility issues.

- Data migration complexity.

- Over and under-provisioning.

- Poor visibility and overview.

- Skills gap.

- Lack of control.

- Poorly defined SLAs.

Additionally, building a hybrid cloud environment involves managing multiple software and hardware licenses, as well as addressing inconsistencies across different platforms. This often requires applications to be rebuilt from scratch, increasing developer workloads.

How to Securely Connect Applications to Multiple Environments

Modern hybrid cloud solutions overcome the challenges mentioned above and provide application management without locking it into a single infrastructure or platform, making workloads portable. One of such applications is Google Anthos, a comprehensive suite enabling safe, flexible, and portable application development and deployment in multi-cloud and hybrid cloud environments.

What Is Google Anthos?

Anthos is an application platform that bridges Google Cloud services and user environments, bringing automation into the hybrid cloud. It is a suite of solutions that can be deployed on-prem (on top of VMware or bare metal), at the edge (bare metal), or across multiple different clouds.

Benefits Anthos Brings to the Hybrid Cloud

- Deployment in any cloud environment.

- Consistency across environments.

- Developer velocity acceleration.

- Increased observability and SLO.

- Secure, cloud-native environment.

- Increased workload mobility.

- DevOps across the organization.

- Portability and flexibility.

- Multi-cloud readiness.

Anthos Under the Hood

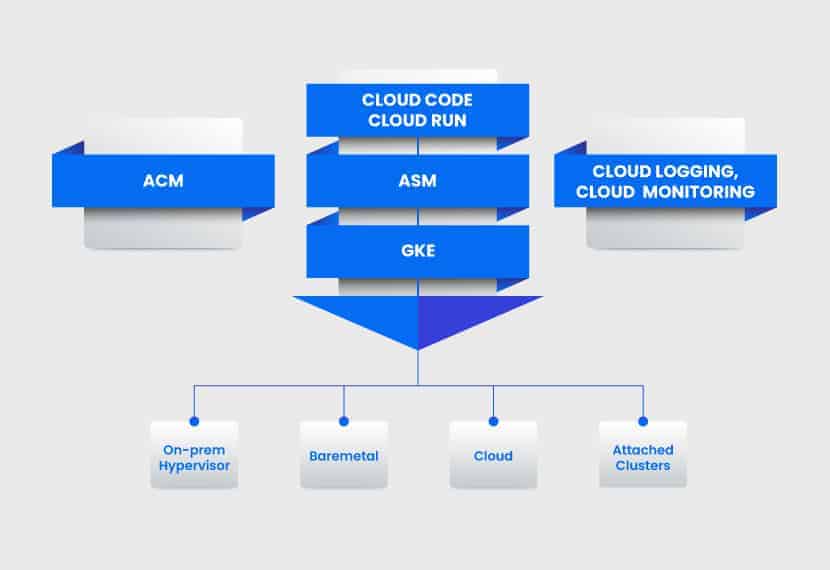

Google Anthos is built on a modular architecture. The following are some of the key Anthos components that enable hybrid and multi-cloud application management:

Google Kubernetes Engine (GKE)

At the core of Anthos lies the GKE (Google Kubernetes Engine). While Kubernetes has become the standard for container management, setting it up and maintaining it remains a challenge, especially for Day 2 operations.

GKE reaches its full potential through automation and simplification of the cluster creation process. Without any intimate Kubernetes knowledge, once the code is placed in a container, a GKE cluster can be created by using a console, Google Cloud command line, or API.

Anthos also enables attaching non-Google Kubernetes clusters to it. This way, it provides a single pane of glass overview of all user clusters.

Additionally, GKE offers logging, monitoring, AI-driven auto-scaling, auto-repair, and auto-upgrade capabilities, ensuring clusters remain healthy and highly responsive, even during massive resource spikes.

Anthos Config Management (ACM)

Clusters need to have previously established policies and security guard roles. This is manageable at the single cluster level, controlled by a single team. However, there are usually multiple teams in an organization, each one using clusters that are either on-prem or on various cloud solutions. Managing all these clusters is challenging and time-consuming.

ACM is an automation suite providing policy and configuration for the entire multi-cloud infrastructure. It enables setting policies for specific clusters across Kubernetes deployments, ensuring the desired state of clusters is constantly maintained at scale. It also prevents other teams or individuals from making modifications that are harmful to the clusters.

Anthos Service Mesh (ASM)

A service mesh ensures fast and secure service-to-service communication in a microservice architecture. Anthos has its own service mesh that automates operations inside a network and provides an overview of:

- Logging, metrics, and SLO monitoring

- Traffic management: routing and load balancing

- Service identity, authentication, and encryption

- AI-driven curated insights, recommendations, and operating analytics

ASM establishes a clear network overview, with zero-trust, policy-driven security. It does the heavy lifting instead of developers, who would generally need to code this information into the application itself.

Managing Hybrid Cloud Networks

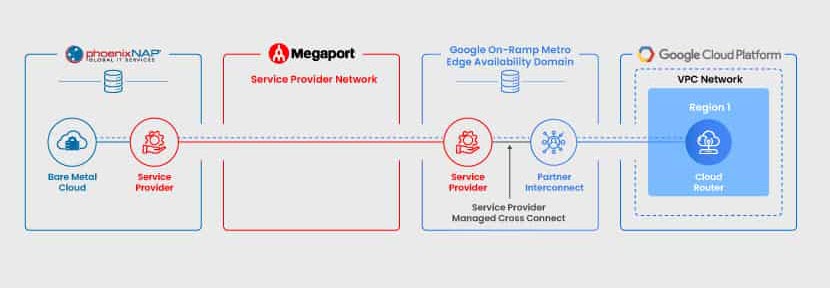

Managing multi-cloud networks is another critical challenge in hybrid cloud deployments. Using GKE, a direct connection between on-prem workloads and the multi-cloud environment can be created in a simple way. This was explained at our recent webinar with Megaport, Google, and Qwinix, where David McDaniel from Qwinix demoed how Google Anthos works on Bare Metal Cloud.

The demo showcased how a website is loaded from either BMC or GKE, based on load balancing or preset routing rules, to illustrate the utilization of a hybrid cloud platform that is safe, fast, and reliable.

The previously described ASM is functional both on-prem and on the cloud, making sure security and policy requirements are met.

When it comes to network management, Megaport Cloud Router (MCR) is a solution that allows for easy and automated orchestration of different networks. As discussed in the webinar, MCR enables organizations to leverage the existing Megaport infrastructure to connect their on-prem environment to multiple cloud providers securely. With already functional APIs available for various cloud providers, setup and management are possible across the entire network and different deployments.

How Anthos Works on Bare Metal Cloud

Built for enabling automation-driven IT, phoenixNAP’s Bare Metal Cloud servers are a fast deployable, cost-efficient infrastructure ideal for hybrid cloud environments. Providing physical servers with cloud-like deployment options, Bare Metal Cloud makes dedicated resources available in minutes. It can be used to complement organizations’ existing infrastructure for workloads that require higher performance potential.

Running both on BMC and in GKE, Anthos provides a comprehensive, unified overview of all workloads, including connections and their statuses. As such, it provides a single pane of glass for managing multi-cloud networks.

Once an application is deployed on-prem and in GKE, the Google Cloud Load Balancer chooses whether the app is loaded from the cloud or BMC.

This routing can be focused on either failover or performance and latency prioritization, allowing a certain app deployed through Bare Metal Cloud to be used on-prem for internal users. At the same time, the GCP variant would be a faster and more reliable option for external users.

Abstraction and Automation Facilitate Hybrid Cloud

The widespread adoption of hybrid cloud environments, while offering flexibility and efficiency, introduces significant complexities surrounding governance, visibility, and consistent network management. To overcome these challenges, platform solutions like Google Anthos provide a crucial layer of abstraction and automation.