The EU AI Act, the world's first comprehensive regulatory framework for AI, came into force on August 1, 2024. This regulation governs the use of artificial intelligence in the European Union by enforcing strict risk management and transparency rules for high-risk AI projects.

The EU AI Act also recognizes lower-risk projects, which must comply with fewer rules, but the Act also outright prohibits certain AI practices. If you'd like to know exactly what the EU AI Act entails and whether your business is affected by this regulation, this is the right post for you.

This article provides a comprehensive overview of the EU AI Act. Jump in to learn about the Act's risk-based approach to AI projects and see how companies with high-risk AI systems can achieve compliance with the new regulation.

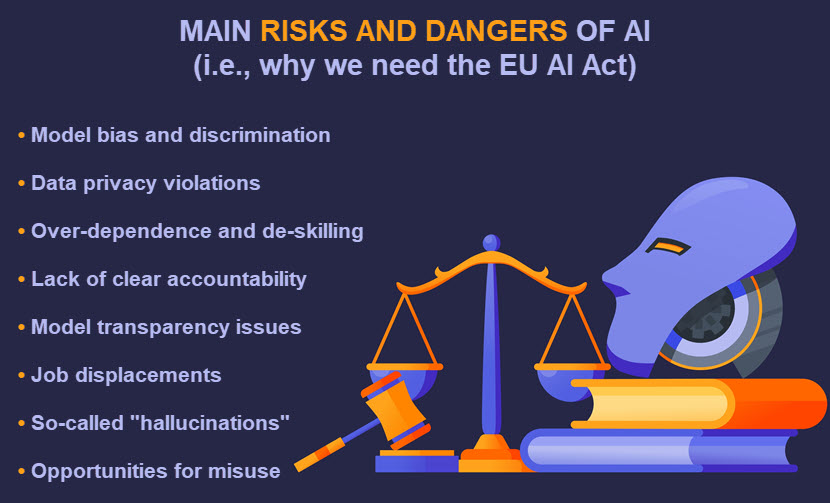

Want to learn why the EU AI Act is such a welcome addition? Check out our article on the main risks of AI to get a better sense of how dangerous it would be to not regulate this technology.

What Is EU AI Act?

The EU AI Act is the first-of-its-kind regulation that dictates how companies operating within the EU can and cannot use AI technologies. The goal of the EU AI Act is to promote the development of human-centric and trustworthy AI, as well as improve the functioning of the AI market within the EU.

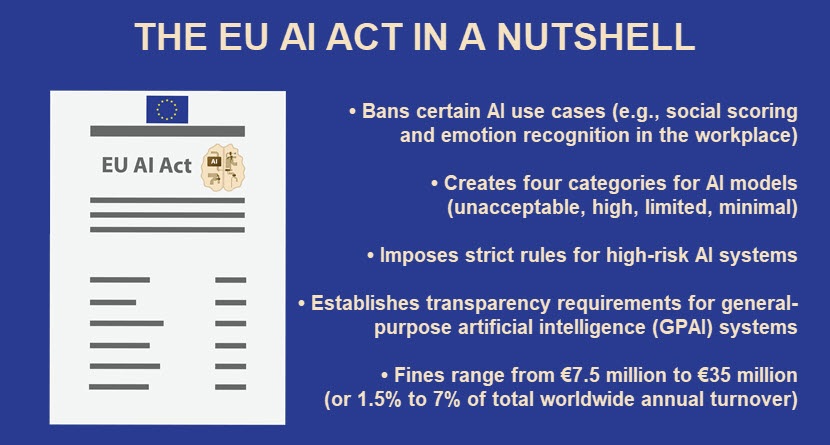

The EU AI Act classifies AI applications based on the likelihood and severity of potential harm they can cause to EU citizens. This risk-based approach categorizes AI systems into four classes:

- Minimal-risk applications, not subject to specific obligations under the Act.

- Limited-risk applications, which must only comply with transparency obligations.

- High-risk applications, which must comply with strict security, transparency, and quality obligations.

- Unacceptable-risk applications, which are banned in the EU.

The Act also introduces special rules for general-purpose AI (GPAI). Open-source GPAI applications must comply with transparency obligations and must inform users when they are interacting with AI. High-capability GPAI models must undergo additional evaluations to meet safety, transparency, and accountability standards.

Businesses that fail to comply with the rules set by the EU AI Act will face hefty fines (Article 99). Here is an overview of non-compliance penalties:

- Penalties for using prohibited AI practices will be EUR 35 million or 7% of worldwide annual turnover (whichever is higher).

- Fines for other violations (including non-compliance with high-risk AI requirements) will be EUR 7.5 million or 1.5% of worldwide annual turnover (whichever is higher).

- Penalties for supplying incorrect, incomplete, or misleading information to authorities responsible for enforcing the Act will be EUR 7.5 million or 1% of worldwide annual turnover (whichever is higher).

The penalty system in the AI EU Act is comparable to the one used in the GDPR, an EU-based regulation that dictates how companies use, process, and store personal user data.

Who Does the EU AI Act Apply To?

The EU AI Act applies to all AI systems whose output is used within the EU (regardless of whether the system operator is based inside or outside the EU). The Act defines an AI system as any machine-based system that can autonomously process inputs and generate outputs (predictions, recommendations, decisions, content, etc.) that influence physical or virtual environments (Article 3 (1)).

The EU AI Act's scope distinguishes between different entities responsible for complying with the new regulation:

- Providers. A provider develops, markets, and places an AI system on the EU market or puts it into service under their name or trademark (whether for payment or free of charge).

- Deployers. A deployer uses an AI system within the EU for its intended purpose, whether for their own use or for providing a service.

- Importer. An importer places an AI system from a third country on the EU market.

- Distributor. A distributor makes an AI system available on the EU market but is not responsible for developing, marketing, or importing it.

- Authorized representative. An authorized representative is an EU-based natural or legal person appointed by a provider outside the EU to act on their behalf in relation to specific tasks under the EU AI Act.

Keep in mind that some entities can have overlapping roles. For example, a company can both develop and use an AI system within the EU, which would make that business both a provider and deployer.

Some users of AI are exempt from the EU AI Act (Article 2). The most notable exceptions are AI models designed purely for personal use and systems used only for scientific research and development. Models used solely for military and national security purposes are also exempt from the EU AI Act.

EU AI Act: AI Risk Categories

The EU AI Act defines four risk levels for AI systems (minimal, limited, high, and unacceptable). Let's take a closer look at each risk category.

Minimal Risk

The minimal risk class is the lowest level of risk in the EU AI Act. These systems do not pose any realistic threat to EU citizens. Common examples of applications within this category are:

- AI-powered spam filters.

- AI systems used in video games.

- Personalized content recommendation engines.

- AI-based grammar and spell checkers.

- Customer support chatbots that handle basic tasks, such as answering simple questions or guiding users through processes without making decisions.

AI systems that fall in the minimal risk category are not subject to any specific restrictions or mandatory obligations under the EU AI Act. However, the Act strongly suggests that these systems follow general principles of ethical AI, such as:

- Ensuring sufficient human oversight over the system.

- Eliminating discrimination and bias issues from the model.

- Ensuring adequate levels of fairness during system deployment and use.

Most AI applications currently on the market will end up in this category. Member EU States cannot impose additional regulations on minimum-risk applications, and the EU AI Act overrides any existing national laws regarding the design or use of such systems.

Limited Risk

Limited-risk applications are AI systems that pose a risk of manipulation or deception to users who are unaware they are interacting with an AI. This category primarily includes the following AI systems:

- Models that generate or manipulate images, sound, or videos.

- AI-based virtual assistants that provide personalized recommendations or advice.

- Sentiment analysis tools that categorize user emotions or opinions.

- Automated content moderation systems that flag or remove content based on predefined rules.

- AI chatbots that engage in complex interactions, especially those that influence user decisions or simulate human conversation to the point where users might not realize they are interacting with an AI.

AI systems within the limited-risk category are subject to strict transparency obligations. Providers and deployers must inform users that they are interacting with an AI system. Additionally, any content created by these systems must be clearly marked as AI-generated to prevent users from being misled or deceived by the output.

High Risk

AI applications in the high-risk category pose significant threats to the health, safety, or fundamental rights of EU citizens.

An AI system is considered high-risk if it is used as a product (or the security component of a product) covered by specific product-related EU legislation in Annex 1 (civil aviation, vehicle security, marine equipment, toys, lifts, pressure equipment, personal protective equipment). AI systems that operate in the following areas also belong in the high-risk category (Annex 3):

- Remote biometric identification.

- Critical infrastructure.

- Education.

- Employment.

- Credit scoring.

- Law enforcement.

- Migration.

- Administration of justice and democratic processes.

High-risk AI systems are the most heavily regulated systems allowed in the EU market. These systems are subject to strict quality, transparency, oversight, and safety obligations.

The EU AI Act allows exceptions from this category if an AI system falls within the bounds of Annex 1 or 3 but does not pose a significant threat to the health, safety, or rights of individuals. This exception applies to systems companies use to:

- Perform a narrow procedural task.

- Improve the result of a previously completed human activity.

- Detect deviations from prior decision-making patterns.

- Perform a preparatory task for an assessment.

Companies that believe their AI system is not a high-risk model must document their assessment before placing the AI system on the market. This assessment must be readily available if requested by regulatory bodies.

If the assessment review determines the AI system is not high-risk, the company can operate under the assumptions for minimal or limited-risk categories. However, there may be penalties or fines for non-compliance during the period you operated under incorrect assumptions. The specific penalties depend on the extent of non-compliance and the regulator's findings.

Unacceptable Risk

AI applications that fall under the unacceptable risk category are banned from the EU. Systems within this class are incompatible with EU values and fundamental human rights. Here are a few examples of such AI systems:

- AI systems for mass surveillance that violate privacy rights.

- Social scoring models that infringe on personal freedoms.

- Deepfake creation tools intended for malicious purposes (e.g., misinformation, phishing attacks, blackmail).

Most unacceptable-risk AI systems aim to manipulate human behavior or are inherently discriminative and unsafe. The deployment, use, or even the development of unacceptable-risk AI systems is prohibited in the EU.

EU AI Act: Compliance Requirements

Minimum-risk AI systems have no legal obligations towards the EU AI Act, while limited-risk models only require that providers disclose the use of AI to their users. High-risk systems, however, must meet a long list of requirements. Let's see how companies with high-risk AI systems can achieve compliance.

Is your business using an AI model that might be deemed as a high-risk system? Use the official Compliance Checker to better understand what legal obligations you have under the EU AI Act.

Risk Management

All operators of high-risk AI applications must establish, implement, document, and maintain a risk management strategy (Article 9). The Act obligates affected companies to perform the following tasks:

- Identify risks that the AI system can pose to the health, safety, or fundamental rights of users.

- Evaluate the consequences that may emerge when the high-risk AI system is used according to its intended purpose.

- Assess risks based on the analysis of data gathered from post-market monitoring referred to in Article 72.

Once companies identify and document potential threats, they must adopt appropriate and targeted precautions to address all reasonably foreseeable risks. These measures can include technical solutions like fail-safes and procedural steps like regular risk assessments.

Data Governance

Companies must develop high-risk AI systems using high-quality data sets for training, validation, and testing (Article 10). Organizations must abide by the following data management practices:

- Companies must carefully plan the design of the AI system and have a full understanding of how the system uses data.

- Each piece of data must have a documented source and stated purpose.

- Companies must document limitations or constraints related to the data.

- Operations like labeling, cleaning, and updating the data must be conducted carefully and with consideration for data privacy.

- There must be an assessment of whether the available data is sufficient and appropriate for the intended purpose.

- Operators must thoroughly examine data sets for biases that could harm the fundamental human rights of EU citizens.

- Companies must have measures for active detection, prevention, and mitigation of identified biases (e.g., diversifying training data or adjusting model parameters).

These practices ensure that companies develop and maintain high-risk AI systems with a strong emphasis on data quality, transparency, and fairness.

Technical Documentation

Companies must prepare detailed technical documentation before they deploy a high-risk AI system (Article 11). Organizations must update this documentation whenever they upgrade or make changes to the system.

Documentation should prove that the AI system meets the Act's requirements and provide clear information for authorities to check compliance. It should include certain key elements, including the ones listed in Annex 4:

- The methods and steps performed during the development of the AI system.

- The design specifications of the system.

- Diagrams and flowcharts that illustrate the system's architecture.

- Datasheets describing the training methodologies and techniques.

- An overview of all the validation and testing procedures.

- Information about data sources, preprocessing methods, and training algorithms.

- An overview of all implemented security measures.

- Detailed information about the monitoring, functioning, and control of the AI system.

If a high-risk AI system is related to a product covered by other EU laws, one set of documentation should include all necessary information. SMBs and start-ups will be able to provide this information in a simpler way. The EU Commission plans to create a simplified form for this purpose.

Record-Keeping

The EU AI Act requires providers of high-risk AI systems to maintain comprehensive records related to their AI systems (Article 12). This requirement ensures accountability and traceability and enables regulatory authorities to verify compliance with the Act.

Here is what the Act expects companies to document and keep track of:

- Operational records that cover the different phases of the AI system, including any modifications, updates, and maintenance activities.

- Compliance records that demonstrate alignment with all relevant obligations under the EU AI Act.

- Records of the data used for training, validation, and testing of the AI model (including sources, processing methods, and any measures taken to ensure data quality and address biases).

- Incident records that document any issues encountered during the operation of the AI system (failures, security breaches, etc.).

All records must be made available to regulatory authorities upon request. Proper record-keeping also helps providers manage their systems effectively and address issues if and when they arise.

Transparency

The operation of high-risk AI systems must be transparent enough for users to understand and use the output correctly (Article 13).

High-risk AI systems must provide users with clear, complete, and relevant instructions about the system. User instructions must include contact details of the provider and their representative (if applicable), plus provide the following details about the AI system:

- The specific function and goals of the AI system.

- Potential risks to health and safety arising from its use or misuse.

- Information necessary for understanding the system's output.

- Performance metrics relevant to the target users (if applicable).

- Details about the data used for training, validation, and testing.

- Required computational and hardware resources, expected lifespan, and maintenance needs.

- Information on how the system collects, stores, and interprets logs.

User instructions must be clear and complete, but also presented in a way that is accessible and understandable to the intended users. For some use cases, companies will have to provide multilingual support or accessible formats for users with disabilities.

Human Oversight

High-risk AI systems must operate with appropriate human oversight (Article 14). Humans must be able to intervene or override decisions made by an AI system.

The purpose of this requirement is to ensure that high-risk AI systems do not operate entirely autonomously without human control. Companies with high-risk AI systems must ensure that:

- Humans have the ability to stop or alter the AI system's operations.

- Operators can review decisions or outputs produced by the AI system to ensure they align with ethical and legal standards.

Users and operators of high-risk AI systems must be adequately trained to understand and manage the system. The training must include guidance on how to use human oversight mechanisms.

Accuracy, Robustness, and Cyber Security

Article 15 of the EU AI Act pertains to expected standards in terms of accuracy, robustness, and cyber security. Here's an overview of the requirements outlined in this part of the Act:

- Accuracy. Providers must use appropriate methods to validate and verify the accuracy of their AI systems.

- Robustness. AI systems must be robust enough to handle a wide range of inputs and scenarios without malfunctioning. Providers should conduct stress testing and simulate different conditions to ensure the system's robustness.

- Cyber security. High-risk AI systems must have adequate cyber security measures in place to protect against unauthorized access, data breaches, leakage, and other cyber threats. Providers must implement strong security measures to safeguard the AI system and its data.

The requirements listed in Article 15 are crucial for maintaining trust in AI systems and safeguarding the interests of EU-based users.

The list of requirements for high-risk AI systems will likely change and expand over the next few years. We recommend subscribing to the EU AI Act Newsletter if you want to stay updated about your obligations.

EU AI Act: Prohibited Practices and Applications

The EU AI Act explicitly prohibits certain AI practices that are too risky for the EU market (Article 5). Here is the list of practices that the Act bans from the EU market:

- Distorting user behavior and impairing informed decision-making with subliminal, manipulative, or deceptive techniques (e.g., a system that influences people to vote for a particular political party without their knowledge or consent).

- Exploiting vulnerabilities related to age, disability, or socio-economic circumstances (e.g., a toy with a voice assistant that encourages children to do dangerous things).

- Categorizing people based on sensitive biometric attributes (race, political opinions, trade union membership, religious beliefs, philosophical views, sex life, or sexual orientation).

- General-purpose social scoring (i.e., evaluating or classifying individuals or groups based on social behavior or personal traits).

- Assessing the risk of someone committing criminal offenses solely based on profiling or personality traits.

- Creating or expanding facial recognition databases by scraping images from the internet or CCTV footage.

- Inferring emotions in workplaces or educational institutions (unless the model serves a vital safety purpose, such as detecting if a driver falls asleep).

The EU AI Act also prohibits collecting real-time remote biometric identification (RBI) data in publicly accessible spaces, except when authorities are trying to:

- Find missing persons, such as abduction victims or targets of human trafficking.

- Prevent a substantial and imminent threat to life (e.g., a foreseeable terrorist attack).

- Identify suspects in serious crimes (murder, armed robbery, narcotics, illegal weapons trafficking, etc.).

The list of prohibited practices will likely expand over time. The EU Commission reserves the right to alter the list of prohibited practices in the Act, so we can expect some changes in the future.

EU AI Act Enforcement Plan

The Act was initially approved by the European Parliament on April 22, 2024, but it entered into force on August 1, 2024. While enforcement does not start on August 1, 2024, the EU AI Act encourages voluntary compliance from all affected companies.

The enforcement of the Act's provisions will occur gradually over 36 months:

- In six months (February 2, 2025), the prohibitions on banned AI practices will take effect.

- After 12 months (August 2, 2025), the transparency obligations for GPAI will take effect for new models. Existing GPAI models must comply with the new rules by August 2, 2026.

- At 24 months (August 2, 2026), the grace period for high-risk AI systems ends, and the bulk of the operative provisions come into effect.

If you deploy a new high-risk AI system before August 2, 2026, it will be under a grace period. The system will not be subject to the full set of new requirements until August 2, 2026. The grace period allows companies to adjust and bring their systems into compliance over time rather than having to meet the new standards immediately.

Any high-risk AI system deployed after August 2, 2026, must meet all the requirements of the EU AI Act from the moment it is put on the market.

EU AI Act: FAQ

Below are the answers to some of the most frequently asked questions about the EU AI Act.

Who Will Enforce the EU AI Act?

The EU AI Act will be enforced by EU member states. Each member state must establish a market surveillance authority and a notifying authority to ensure regulation implementation. The EU Commission's newly formed European Artificial Intelligence Board (EAIB) will advise and assist national authorities to ensure compliance with the regulations.

Is the EU AI Act in Force?

Yes, the EU AI Act has been in force since August 1, 2024. The enforcement of rules for high-risk AI systems will start on August 2, 2026, while the use of prohibited AI practices will become punishable on February 2, 2025.

When Was the EU AI Act Passed?

The EU AI Act was passed by the EU parliament on March 13, 2024, and was unanimously approved by the EU Council on May 21, 2024. The European Commission initially proposed the Act on April 21, 2021.

Paving the Way for Safe AI Use and Innovation

Like any technology, AI can be used irresponsibly, unethically, or with malicious intent. The EU AI Act is the first attempt to legally ensure this cutting-edge technology does not do more social harm than economic good.

The Act promotes safe AI innovation while preventing use cases that violate human rights or expose EU residents to unacceptable levels of risk.